Is it true that the Apple Vision Pro has lower optical performance than the Meta Quest 3?

The display of the headset has a physical resolution, but due to the rendering method and various display processes, the resolution that is ultimately seen by the eye differs from the specs. The resolution that the user actually sees is called the 'effective resolution.' VR engineer

[Segmentation Fault]

Apple Vision Pro has the same effective resolution as Quest 3…Sometimes? And there's not much app devs can do about it, yet.

https://douevenknow.us/post/750217547284086784/apple-vision-pro-has-the-same-effective-resolution

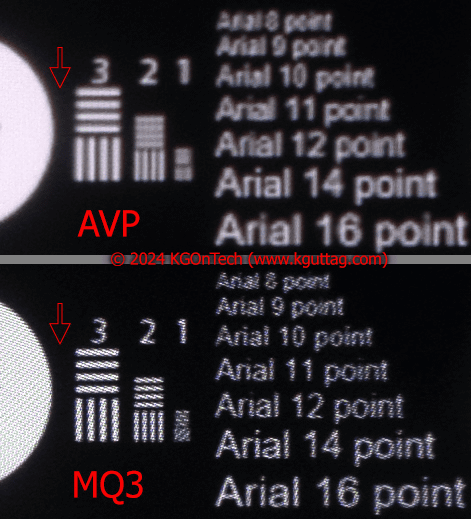

KGOnTech , a blog dealing with VR-related information, claims that 'Apple Vision Pro's optics are blurrier and have lower contrast than Meta Quest 3.' KGOnTech conducted a test using a 1080p black and white test pattern and argued that 'Meta Quest 3 can display clearer and higher contrast images than Apple Vision Pro.' In addition, from the display of the test pattern, Apple Vision Pro appears blurrier than Meta Quest 3, so Apple Vision Pro's optics and display performance are lower than Meta Quest 3.

However, while Thomas agrees with KGOnTech's argument, he emphasizes that factors other than optics play a major role in Apple Vision Pro's visual quality.

Apple Vision Pro uses a technology called 'Variable Rasterization Rate (VRR)' to optimize rendering performance. Normally, when rendering a 3D scene, the entire scene is rasterized at a uniform resolution, but VRR enables foveated rendering, which renders the important area in front of the user's line of sight at high resolution and the peripheral area at low resolution.

The main benefits of VRR are reduced computational cost, reduced memory bandwidth, faster rendering time, and preservation of visual quality. Because the human eye focuses on the center of the field of view, reducing the resolution in the periphery does not reduce the perceived visual quality much. Foveated rendering reduces the load on the GPU and saves memory bandwidth. It is also faster to render some areas at a lower resolution than to render the entire scene at a higher resolution.

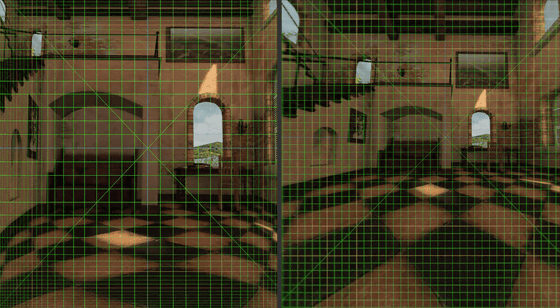

In the image below, the left side shows the center of the field of view, and the right side shows the entire field of view. In VRR, only the left side of the image is rendered at high resolution, while the rest is rendered at low resolution, so the effective resolution remains high while the processing load is reduced.

Thomas developed a benchmark tool to test visual quality using RealityKit . You can see how the benchmark tool is actually used with Apple Vision Pro in the following movie.

Tuff Test Tracked Mipmaps - YouTube

After re-examining using this tool, Thomas speculates that KGOnTech's test results were based on fixed foveation rendering (FFR) rather than dynamic foveation rendering (DFR). Apple Vision Pro is capable of eye tracking and can perform dynamic foveation rendering (DFR), which tracks the user's gaze and performs foveated rendering. However, the results of the test shown by KGOnTech were almost the same as what they looked like with FFR. Therefore, the actual user experience with Apple Vision Pro may differ.

According to Thomas, when viewing a 28-inch 1080p panel from a distance of 30 inches (about 76 cm), the effective resolution of Apple Vision Pro and Meta Quest 3 was the same. In addition, with Apple Vision Pro, which is capable of DFR, he said that Apple Vision Pro could sometimes recognize text more clearly depending on the movement of the eyes and head. This shows that the hardware performance of Apple Vision Pro is not inferior to that of Meta Quest 3, Thomas argued.

Thomas argues that in order to properly evaluate the visual quality of Apple Vision Pro, it is necessary to consider not only the optical system but also factors such as rendering method and mipmapping. According to Thomas, Apple Vision Pro's rendering resolution is low at 1920x1824 pixels, making it more susceptible to reduced visibility due to mipmapping .

Thomas pointed out that the rendering stack of Apple Vision Pro is not sophisticated in terms of mipmapping and sampling control, and argued that the visibility degradation in Apple Vision Pro is due to a software problem, and that it is not appropriate to judge visual quality solely based on the performance of the optical system. He also said that if it is a software problem, there is plenty of room for improvement in the future.

Related Posts: