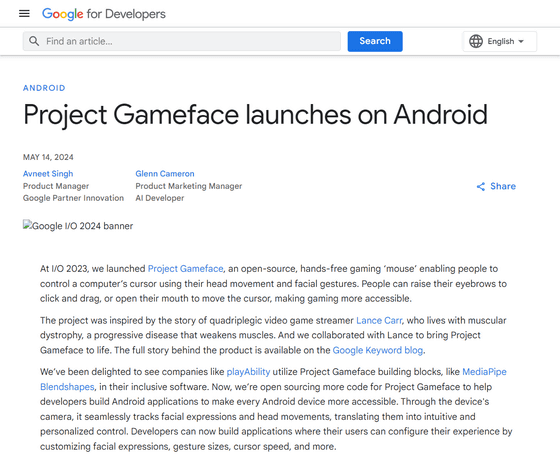

'Project Gameface', a system that lets you control the mouse cursor with your face, is now open source and available for Android

Project Gameface launches on Android - Google Developers Blog

https://developers.googleblog.com/en/project-gameface-launches-on-android/

Android apps coming soon let you use your face to control your cursor - The Verge

https://www.theverge.com/2024/5/14/24156810/google-android-project-gameface-accessibility-io

Project Gameface was inspired by the story of game streamer Lance Carr , who lives with muscular dystrophy , a progressive muscle-wasting disease.

Gaming with muscular dystrophy | Project GameFace featuring Lance Carr | Google - YouTube

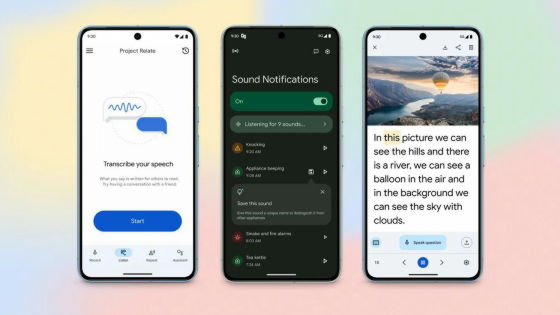

Companies such as playAbility are already developing products using Project Gameface. Now, Google has announced that it will open source Project Gameface so that all Android app developers can use it. This will allow you to seamlessly track facial expressions and head movements through the camera of your Android device and convert them into intuitive and personalized operations. In addition, app developers will be able to build applications that allow users to fine-tune their facial expressions, gestures, size, cursor speed, etc. so that they can customize their cursor operation experience with facial expressions and gestures.

Introducing Project Gameface for Android - YouTube

Google says it based its development on three core principles when building Project Gameface for Android:

1. It gives people with disabilities an additional way to interact with their Android device.

2: Build a publicly available, cost-effective solution that allows for scalable use.

3: Leverage learnings and guiding principles from the initial launch of Project Gameface to make the product user-friendly and customizable.

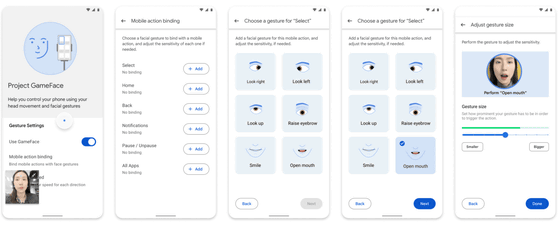

The Android version of Project Gameface introduces a virtual cursor to allow users to interact with the device in the same way as the standard version of Project Gameface. The cursor is created using Android's accessibility services and is programmed to move according to the user's head movements using MediaPipe's facial landmark detection API .

The API has 52 facial blendshape values representing different facial gestures, such as 'raise left eyebrow' or 'open mouth,' that can be used to effectively map and control a wide range of functions, providing more customization and manipulation possibilities for users. It also leverages blendshape coefficients that allow developers to set different thresholds for each specific expression, helping to customize the experience.

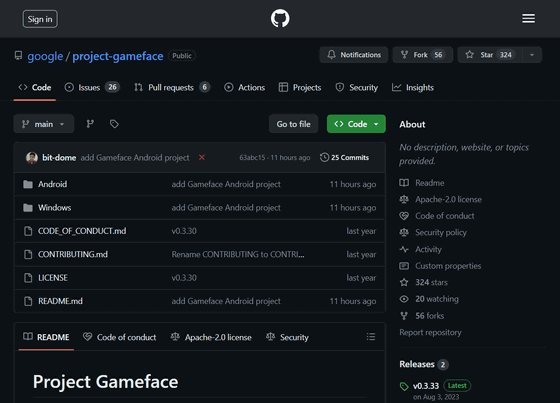

The open source Project Gameface is available on GitHub.

GitHub - google/project-gameface

https://github.com/google/project-gameface

Related Posts:

in Video, Software, Smartphone, Posted by logu_ii