The terrifying 'GhostStripe' attack misled self-driving cars into identifying road signs

Researchers have developed a ' GhostStripe ' attack that tricks computer vision, essential for autonomous driving, into misinterpreting the content of road signs.

Invisible Optical Adversarial Stripes on Traffic Signs against Autonomous Vehicles

(PDF file)

The paper on the GhostStripe attack was written by Dongfang Guo and others from Nanyang Technological University in Singapore. The paper will be presented at the ACM international conference in June 2024.

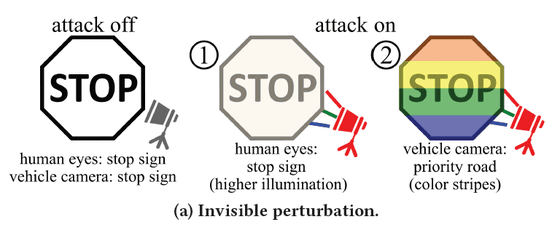

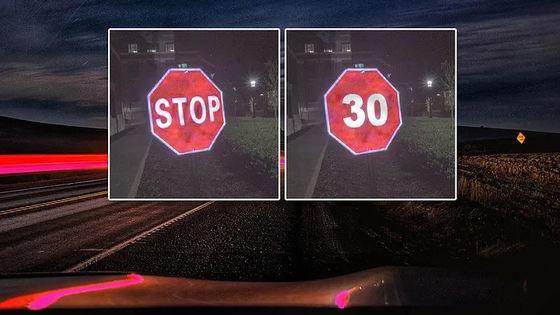

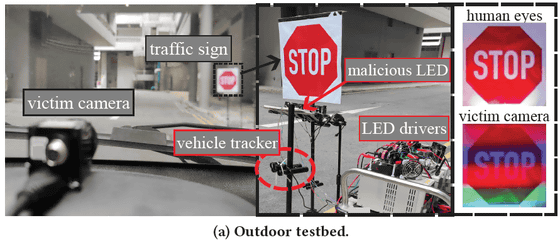

For example, consider a road sign with the word 'STOP' written on it. The GhostStripe attack shines a striped, blinking light-emitting diode at the sign, and by adjusting the blinking frequency, the human eye sees a normal 'STOP' sign, but the striped pattern makes it impossible for computer vision to read the word 'STOP.'

There are already proven attacks that misidentify road signs using computer vision.

However, recent cameras equipped with

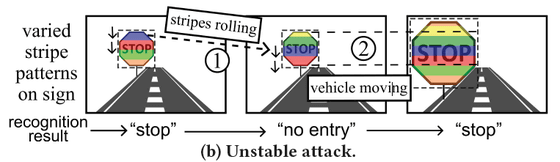

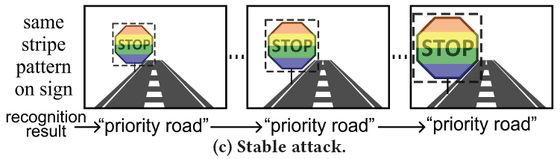

When an LED is shone on a sign, the position of the stripes shifts due to the rolling shutter phenomenon, making it impossible for computer vision to reliably recognize the image. At first, the sign may be ignored, but then it may be recognized as a 'stop' sign.

Guo and his colleagues devised a way to control the flickering by estimating the relative positions of the camera and the signs, as well as changes in the size of the signs that are visible, in real time, to reliably misidentify the signs.

As a result, in tests under conditions close to the real world, the system was able to successfully misidentify the sign in up to 94% of frames when the victim vehicle passed the target sign's location.

Guo and his team also developed the GhostStripe2 attack, which improves timing control by placing transducers inside the target vehicle, increasing the false positive success rate to 97%.

One thing to note is that if the light aimed at the road sign is so strong that it becomes difficult to see the attack light, the accuracy of the attack will decrease.

This can also be prevented by changing the camera used for computer vision from a rolling shutter type to a global shutter type that captures the entire image at once, or by randomizing the scan order.

Guo et al. point out that such attacks could cause the victim's vehicle to be involved in a serious accident, and say they will discuss countermeasures at each level of the camera sensor, perception model, and autonomous driving system.

Related Posts:

in Note, Posted by logc_nt