Version 0.7 of ``llamafile'', which distributes and executes large-scale language models in a single file, increases processing power by up to 10 times

The package `` llamafile v0.7 '', which allows you to easily distribute and run a large-scale language model (LLM) with a single executable file of only about 4GB, has been released. This version improves the calculation performance and accuracy of both the CPU and GPU, and supports

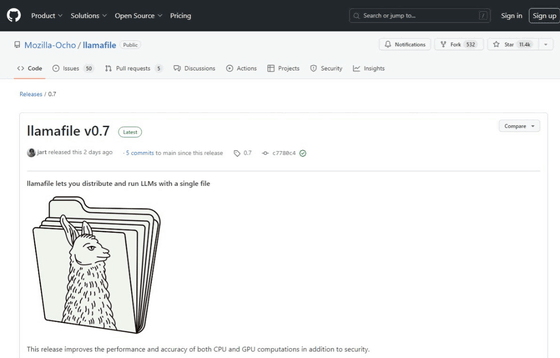

Release llamafile v0.7 · Mozilla-Ocho/llamafile · GitHub

https://github.com/Mozilla-Ocho/llamafile/releases/tag/0.7

Llamafile 0.7 Brings AVX-512 Support: 10x Faster Prompt Eval Times For AMD Zen 4 - Phoronix

LLaMA Now Goes Faster on CPUs

llamafile is a mechanism that allows developers and end users to easily distribute and use LLM by providing it as a single file that can be executed on most systems.

How to easily run ``llamafile'', a mechanism that allows you to easily distribute and execute AI using large-scale language models with just one executable file of only 4 GB, on Windows and Linux - GIGAZINE

It has been reported that 'llamafile v0.7' released on March 31, 2024 local time has significantly improved prompt processing speed on the CPU.

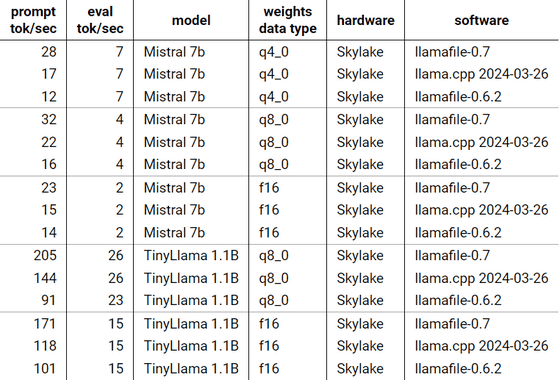

Engineer Justin Tunney ran ``llamafile v0.7'', ``llamafile v0.6.2'', and ``llama.ccp 2024-03-26'', an acceleration tool that is also included in llamafile, and observed the difference in processing speed. is shown.

Below are the execution results for a 2020 HP terminal (equipped with Intel Core i9-9900) that Mr. Tanney had on hand. After running with different models and parameters, we can see that llamafile v0.7 showed superior results.

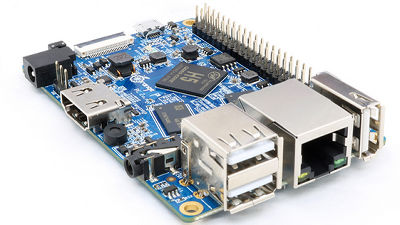

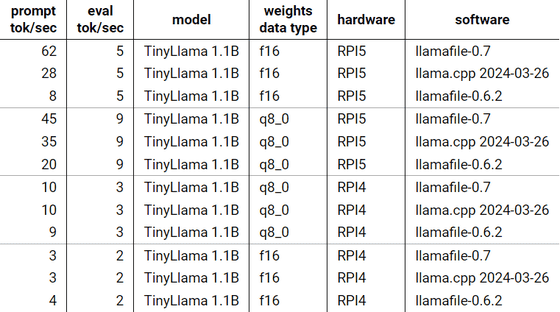

Mr. Tunney also shows the results of running on Raspberry Pi v5 (ARMv8.2) and Raspberry Pi v4 (ARMv8.0). Raspberry Pi v5 has a difference of up to 8 times compared to the previous version.

According to the release, llamafile v0.7 supports Intel's instruction architecture ``AVX-512'', which means processing speed will be 10 times faster in environments such as Zen4 architecture.

Related Posts:

in Software, Posted by logc_nt