Apple announces a method to build multimodal AI that can achieve state-of-the-art performance on multiple AI benchmarks, potentially a major advancement for AI and Apple products

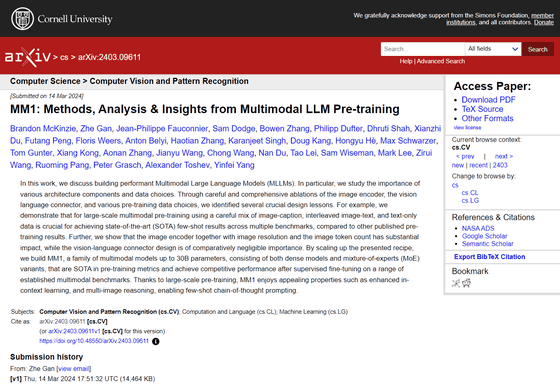

A team of Apple researchers has announced MM1 , a method for building high-performance multimodal large-scale language models (MLLM).

[2403.09611] MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training

Apple researchers achieve breakthroughs in multimodal AI as company ramps up investments | VentureBeat

https://venturebeat.com/ai/apple-researchers-achieve-breakthroughs-in-multimodal-ai-as-company-ramps-up-investments/

Apple's research team has developed a new method called MM1 to train large-scale language models on both text and images. Regarding this, technology media VentureBeat points out, ``It will be possible to build more powerful and flexible AI systems, which could be a major advancement for AI and Apple products.''

A research paper published by Apple on MM1 shows what results can be achieved by carefully combining multiple training data and model architectures. By using MM1, it is possible to achieve cutting-edge performance in various AI benchmark tests.

'We show that extensive multimodal pre-training, which carefully combines image captions, interleaved image text, and text-only data, achieves state-of-the-art results across multiple benchmarks,' the researchers wrote. 'We have demonstrated that it is important to

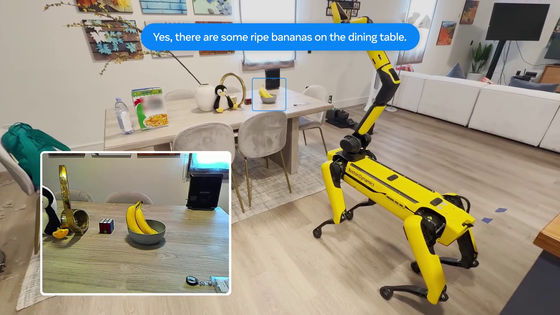

By training the model on diverse datasets spanning credential and linguistic information, MM1 has been able to achieve superior performance on tasks such as image captioning, visual question answering, and natural language inference.

The researchers also found that the choice of image encoder and the resolution of the input images have a significant impact on the model's performance. The research team wrote, ``We showed that the image encoder, image resolution, and number of image tokens have a large impact, while the design of the visual language connector is relatively negligible.'' Regarding this, VentureBeat pointed out that 'continued scaling and refinement of the visual component of multimodal models suggests that it is key to unlocking further benefits.'

Holding up to 30 billion parameters, MM1 has strong in-context learning capabilities and successfully performs multi-step inference on multiple input images using thought chain prompts. This suggests that large-scale multimodal models have the potential to tackle complex, open-ended problems that require grounded language understanding and generation.

Regarding MM1, VentureBeat pointed out that ``MM1 was announced as Apple ramps up investment in the AI field to catch up with rivals such as Google, Microsoft, and Amazon who are integrating generative AI and products.'' . It has been rumored for a while that Apple is investing a large amount of money in AI development, and at the general meeting of shareholders held at the end of February 2024, Apple CEO Tim Cook announced that he was investing a large amount of money in AI. I admit that there are.

Apple reveals that it is investing heavily in AI development and says it will ``reveal details in the second half of 2024'' - GIGAZINE

Apple is reportedly developing a framework for developing large-scale language models called ``Ajax'' and a chat AI called ``Apple GPT.'' Apple's goal is believed to be to integrate Apple GPT with services such as Siri, the Messages app, and Apple Music, using AI to automatically create music playlists and help developers write code. They may be able to assist, participate in open conversations, and complete tasks.

Is Apple developing its own large-scale language model and chatbot AI 'Apple GPT'? - GIGAZINE

Related Posts:

in Software, Posted by logu_ii