The supervisory board of Meta, the operator of Instagram and Facebook, announces that ``Israel Hamas videos should not have been deleted''

Regarding Meta's decision to delete a video related to the Israel-Hamas war that was posted on Facebook, the Oversight Committee, which monitors the content that is deleted on Meta's platform, said that the deletion decision was wrong and that it 'should not have been deleted.' I acknowledged that it was a poor decision.

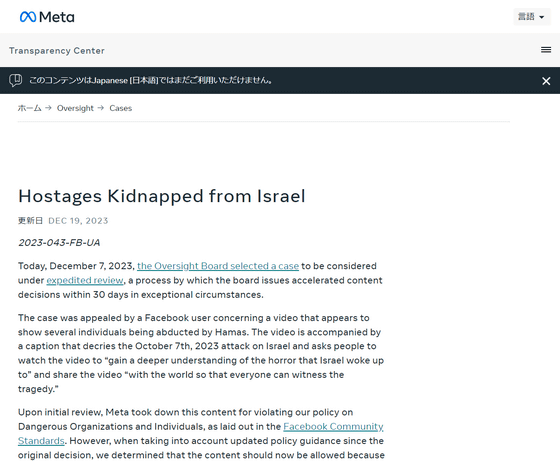

Hostages Kidnapped from Israel

Oversight Board Issues First Expedited Decisions About Israel-Hamas Conflict | Oversight Board

https://oversightboard.com/news/1109713833718200-oversight-board-issues-first-expedited-decisions-about-israel-hamas-conflict/

Supervisory Board | Independent Judgment. transparency. Validity.

https://www.oversightboard.com/decision/FB-M8D2SOGS

Meta Oversight Board says Israel-Hamas videos should not have been removed | Reuters

https://www.reuters.com/technology/meta-oversight-board-says-israel-hamas-videos-should-not-have-been-removed-2023-12-19/

Meta Watchdog Reverses Facebook Decision To Remove Graphic Hamas Attack Video - The Messenger

https://themessenger.com/tech/meta-watchdog-reverses-facebook-decision-to-remove-graphic-hamas-attack-video

Meta criticized over removal of videos showing Israel-Hamas war - The Verge

https://www.theverge.com/2023/12/19/24007655/meta-oversight-board-removed-videos-israel-hamas-conflict

In order to respect freedom of expression on platforms such as Facebook and Instagram, Meta has established an oversight board as an independent adjudication body to select what content is removed and what content is allowed on the platform.

The supervisory committee selected the cases for urgent review on December 7, 2023 local time. The subject of the urgent review was a ``video showing a woman abducted by Hamas begging for her life'' that had been deleted from Facebook, and ``a video of a woman abducted by Hamas pleading for her life'' and a ``video of a woman begging for her life after being abducted by Hamas'' that had been deleted from Instagram. This is about whether or not a video of an airstrike attack can be deleted. The videos have captions condemning the 'attack on Israel' that took place on October 7, 2023, with captions such as 'Watch the videos to better understand the horrors suffered by Israel' and ' There is a need to share this information with the world in a way that everyone can understand.

Meta removed the video for violating its Violence and Incitement Rules and Dangerous Groups and People Policy . Violence and incitement rules allow content to be removed that clearly identifies the hostage, even if the goal is to denounce or raise awareness about the hostage situation. Our Dangerous Groups and People policy specifically prohibits third-party images depicting the moment of a designated terrorist attack against an apparent victim.

However, the supervisory board conducted an urgent review to determine whether the deletion of the video was inappropriate. Then, on December 19, 2023 local time, the Oversight Board announced, ``After reviewing Meta's community standards, we determined that the content was only shared in the context of condemnation or awareness-raising.'' The video, which was removed by Meta, was deemed to be 'content that should be allowed.' As a result, the deleted video will be restored with a warning screen.

Today, the Oversight Board published its first expedited decisions in two cases about the Israel-Hamas conflict.

https://t.co/Hx2kiJ6bBg — Oversight Board (@OversightBoard) December 19, 2023

In each case, the Board overturned Meta's original decision to remove the content but approved its subsequent decision to restore the post with a warning screen.

The supervisory board explained that the video was removed from the list because it was valuable in understanding the suffering of the Israeli people in the war.

Meta said of the Oversight Board's decision: 'We welcome the Oversight Board's decision on this matter. Expression and safety are both important to the people who use our services. The Oversight Board has removed this content. 'We have reversed Meta's original decision to restore the content, but have acknowledged the decision to restore the content with a warning screen. Meta has previously restored this content and no further action will be taken.' says.

Meta excludes content from some categories from being recommended , but the Oversight Board disagrees with Meta's decision to exclude some content from being recommended. However, the Supervisory Board has not made any recommendations as part of its decision, so there will likely be no further updates on this matter.

In addition, Meta is not the only site that is subject to strict monitoring in its handling of content related to the Israel-Hamas war. Verified users of X (formerly Twitter) (accounts with blue check marks) have been criticized as `` misinformation superspreaders '' by NewsGuard, a misinformation monitoring organization. TikTok and YouTube have been investigated under the EU's Digital Services Act following a surge in illegal content and misinformation on their platforms.

Related Posts:

in Software, Web Service, Posted by logu_ii