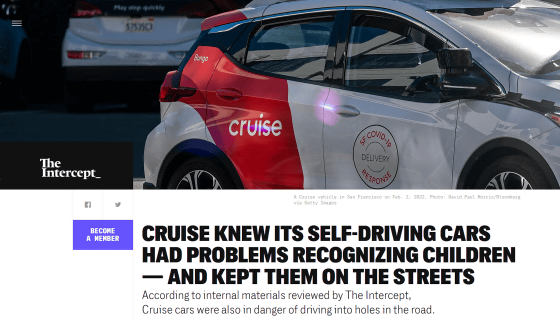

It is reported that Cruise, a subsidiary of GM, continued testing on public roads despite knowing that ``self-driving cars were having problems detecting children.''

Cruise, a self-driving company owned by General Motors (GM), continues to test on public roads despite recognizing that self-driving cars have problems ``detecting children and paying appropriate attention.'' The foreign media outlet The Intercept reported.

Cruise Self-Driving Cars Struggled to Recognize Children

Cruise was testing its self-driving cars on public roads in several American cities such as San Francisco and Miami. There have been reports of self-driving cars causing accidents and disrupting traffic , but in October 2023, GM and others announced that ``self-driving cars are safer than cars driven by humans.'' The company has been promoting the safety of self-driving cars, such as by announcing data showing that.

However, shortly after this report was published, the California Department of Motor Vehicles (DMV) announced that it would immediately suspend Cruise's permission to deploy autonomous vehicles and conduct driverless testing. The reason for this is that Cruise did not properly disclose information about the accident to the DMV in an accident caused by Cruise's self-driving car in early October. Since then, Cruise has decided to temporarily suspend driverless testing of self-driving cars outside of California.

California immediately suspends Cruise's permission for autonomous vehicle deployment and unmanned driving tests - GIGAZINE

Kyle Vogt, Cruise's co-founder and CEO, has sent a message to employees regarding this matter: ``Safety is at the core of everything we do at Cruise.'' In fact, self-driving car developers such as Cruise have emphasized that the software installed in self-driving cars does not get tired, drunk, or distracted the way humans do. Ta.

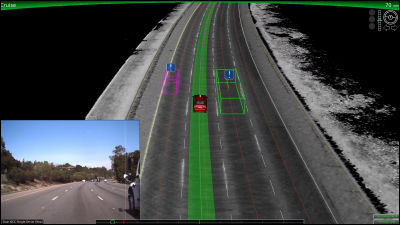

However, internal documents including chat logs obtained by The Intercept show that even before Cruise suspended public road testing of its self-driving cars, there were problems with its 'ability to detect children and potholes.' is shown to have been recognized. Children may suddenly start running or jump out onto the road, so if you see a child while driving, you should be extra careful. However, one safety evaluation stated that ``Cruise's self-driving car may not be able to pay special attention to children.''

Cruise's internal documents state that during the development of self-driving cars, children are suddenly separated from accompanying adults, children fall, children ride bicycles, and children wear special clothing. It was noted that data regarding the central scenario was lacking. As a result of actually conducting a mock test in which Cruise ran near a small child, it was reported that ``Based on the simulation results, we cannot exclude the possibility that a fully self-driving car hit a child.'' In addition, in another test run, although it succeeded in detecting a child-sized dummy doll, it seems that the side mirror of a self-driving car traveling at 28 miles per hour (about 45 km) collided with the doll.

Cruise explains that the problem was not that the company's self-driving software failed to detect children, but rather that it failed to classify them as a 'special category of pedestrians that require caution.' 'We have the lowest risk tolerance for contact with children and treat their safety as our top priority,' Cruise spokesperson Eric Moser said in a statement. There is no vehicle that has zero risk of collision, no matter what it runs on.'

Furthermore, documents obtained by The Intercept show that Cruise knew more than a year ago that it could not detect 'large holes created by construction work that had workers inside.' Shown. Cruise estimates that even with only a limited number of self-driving cars driving in the test, one self-driving car falls into an open hole every year, and one every four years has workers inside. It was estimated that there was a possibility of falling into the hole where there was. If the number of self-driving cars on public roads increases, the risks will increase accordingly.

In fact, video footage of the Cruise vehicle seen by The Intercept shows the self-driving car driving toward a hole in a construction site where multiple workers were located, and then stopping just a few centimeters in front of the workers. is. The construction site was surrounded by orange cones, and one of the workers was trying to stop the car by waving a sign indicating 'SLOW', but the self-driving car could not detect this well. Looks like it was.

Cruise relies heavily on AI to perceive and navigate the outside world, and in 2021, Cruise's AI chief said, ``We can't create Cruise without AI,'' and we regularly demonstrate the great capabilities of AI. We are advertising. However, a series of issues suggest challenges with a 'machine learning-first approach.'

Phil Koopman, an engineering professor at Carnegie Mellon University, agrees that Cruise's listing and evaluation of various safety risks is a positive sign. However, the safety issues that Cruise is concerned about are not new, but have been known in the field of autonomous robotics for decades. Koopman points out that the problem with technology companies with machine learning, data-driven cultures is that they don't think about problems until they actually encounter a danger, rather than before.

'Cruise should have listed its hazards from day one,' said Koopman. 'If you only train on how to deal with things you've already encountered, there are many dangers you won't be aware of until they happen to your own car.' 'Therefore, machine learning is fundamentally unsuitable for security.'

Related Posts:

in Vehicle, Posted by log1h_ik