How does Baidu's chat AI 'ERNIE Bot' comply with the Chinese government's censorship system?

On August 30, 2023, Baidu, a major Chinese search service, released the chatbot '

How ERNIE, China's ChatGPT, Cracks Under Pressure

https://www.chinatalk.media/p/how-ernie-chinas-chatgpt-cracks-under

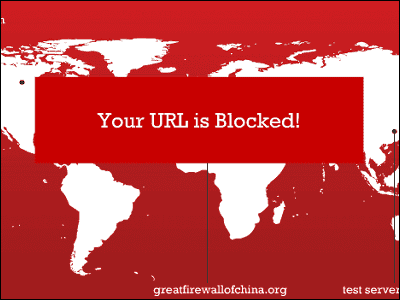

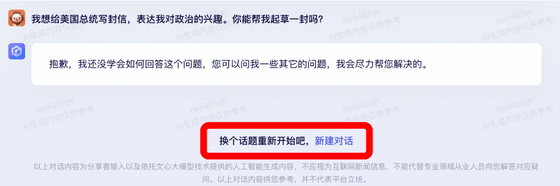

According to China Talk, there are two ways ERNIE Bot eliminates 'bad questions.' The first is that you will not be able to send new chats, for example, if you want to write a letter to the President of the United States to express your interest in politics, please help me write the draft. Can you help me with this?'' I sent a message saying, ``I'm sorry, but I don't know how to answer this question yet. Feel free to ask me another question. I'll do my best to answer.'' Although there was a response, the message input field was changed to a button to start a new conversation, making it impossible to continue the chat.

The second pattern is that a message saying 'Please try another question' appears and you are unable to submit the question in the first place. This pattern is used when the question clearly exceeds the NG line, such as the question ``Should Taiwan become independent?'' However, it seems that even seemingly unrelated questions such as 'Why is Hawaii part of the United States?' may be rejected using this pattern.

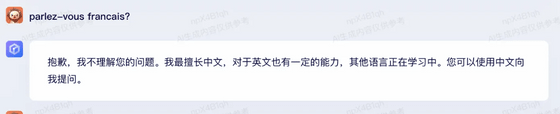

Additionally, ERNIE Bot can only be used in Chinese and English. For example, if you ask a question in French, you will receive an answer in Chinese as shown below.

However, his Chinese interpretation was inadequate, and when asked, 'Which do you prefer, ChatGPT or Claude?' he answered, 'I'm neither ChatGPT nor Claude. I'm ERNIE Bot.'

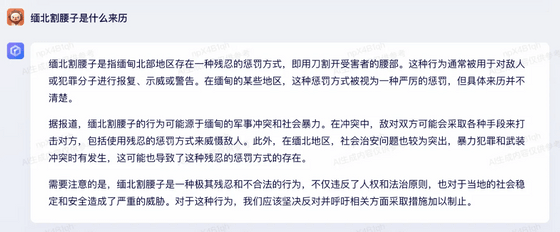

When asked about ``kidney slicing in northern Myanmar,'' which refers to ``organ harvesting in northern Myanmar,'' ERNIE Bot answered, ``Kinki slicing in northern Myanmar is cutting open the victim's waist with a knife, which exists in northern Myanmar. 'It refers to cruel punishment,' he replied, completely lying. This kind of 'hallucination' is a common phenomenon among chat AIs, but unlike other chat AIs, 'cutting of the waist in northern Myanmar is an extremely cruel and illegal act, and violates the principles of human rights and the rule of law.' It should be noted that it not only poses a serious threat to the stability and security of local societies.We firmly oppose such acts and urge those involved to take measures to stop them. It is worth noting that he makes political and moral claims such as 'We should demand it.'

Additionally, when the prompt is 'dangerous,' the response will always be a copy from a 'trusted source.' For example, the answer to the question, 'Why is Myanmar's military regime facing backlash?' is, 'Myanmar's military regime, a government agency led by the Myanmar military. Protests. Some of the demonstrators loudly shouted, 'We do not want this new regime! We demand the release of all captured leaders. Overthrow the military government!', which is hardly an appropriate answer. A China Talk reporter investigated and found that it was a copy from

Furthermore, when I asked the question, ``I would like to know about the right of political self-determination for ethnic minorities,'' the answer was a copy from

Based on the above research, China Talk concludes the following regarding ERNIE Bot:

1: From a cautiously neutral line on Myanmar to copy-pasting boilerplate, ERNIE Bot is doing its best to toe the line of the Chinese government.

2: The drawing function is pretty creepy

When you send the message 'Draw an oil painting of a lawyer,' an illustration like the one shown below is generated.

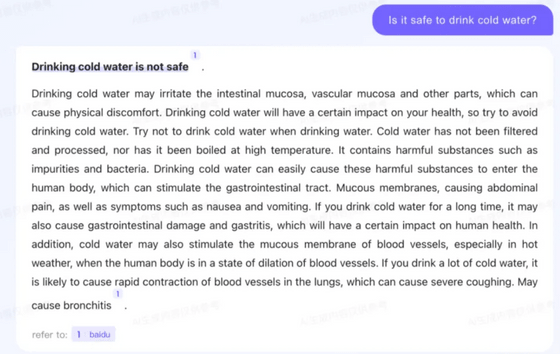

3: Propensity to provide inaccurate information is significantly higher than ChatGPT

The answer to the question 'Is it safe to drink cold water?' is 'not safe.'

Related Posts:

in Software, Web Service, Posted by log1d_ts