Technology 'TokenFlow' that can change the movie to a taste specified by characters while maintaining consistency between frames appears

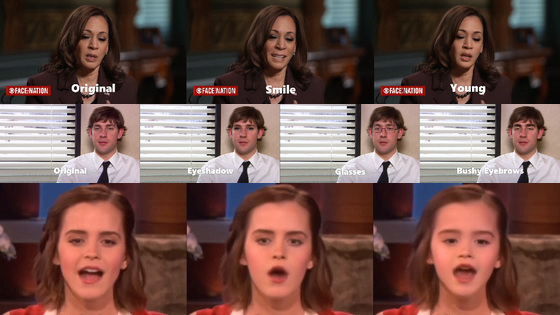

As of 2022, there was

TokenFlow: Consistent Diffusion Features for Consistent Video Editing

https://diffusion-tokenflow.github.io/

[2307.10373] TokenFlow: Consistent Diffusion Features for Consistent Video Editing

https://doi.org/10.48550/arXiv.2307.10373

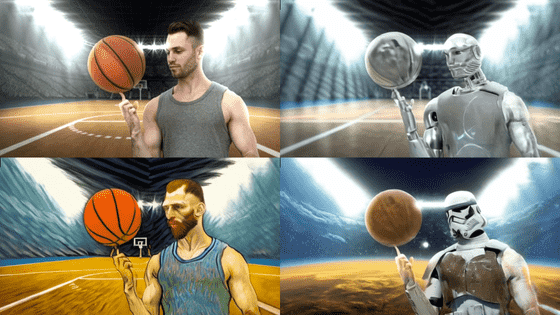

The movie below was actually generated using TokenFlow.

The older brother is spinning a basketball on his finger. This is the original movie.

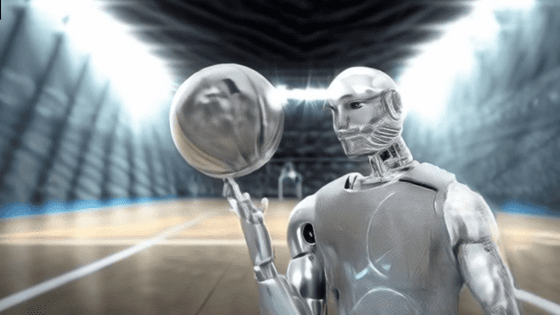

When I gave the prompt 'Shiny silver robot', my brother transformed into a silver metallic body with the ball.

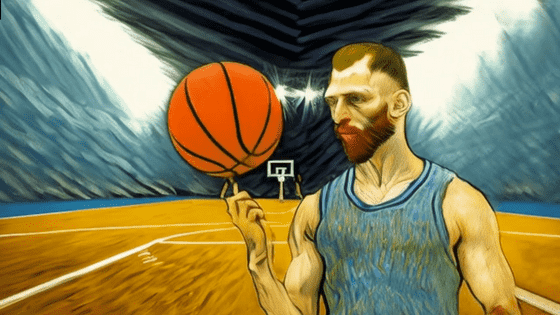

'Van Gogh Style' looks like a painting. It looks like a painting, but the movement of the brother and the ball is very smooth.

If you specify 'Star wars clone trooper (Star Wars clone trooper)', not only will your brother and the ball change, but the background will also be transformed into space.

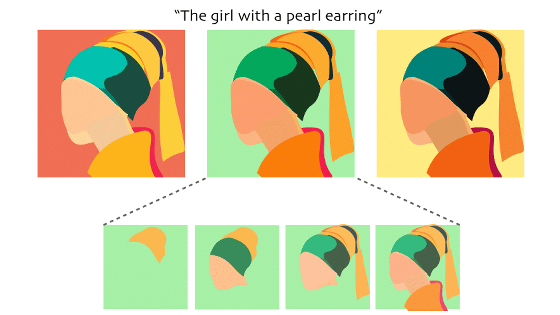

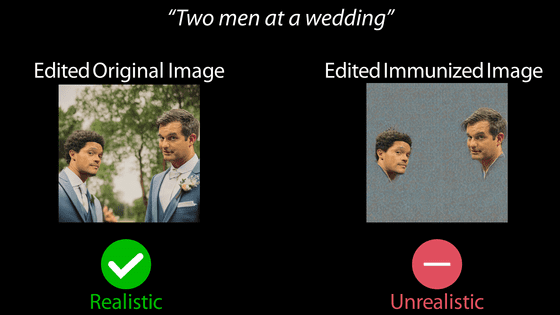

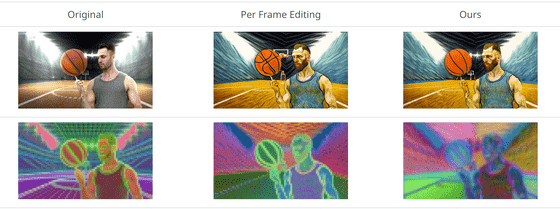

With the conventional method of 'changing the atmosphere for each frame', it was difficult to handle elements such as the position of the line of the ball that needed to be placed in the appropriate position based on the previous and next frames.

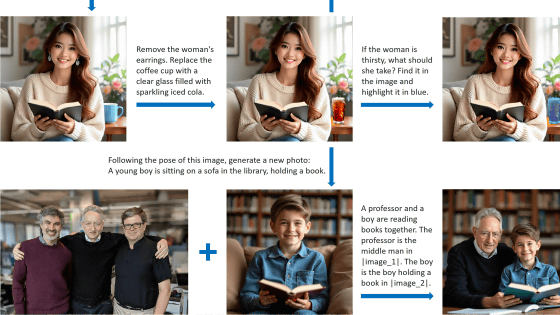

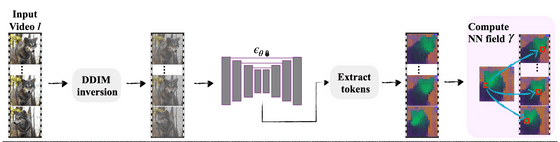

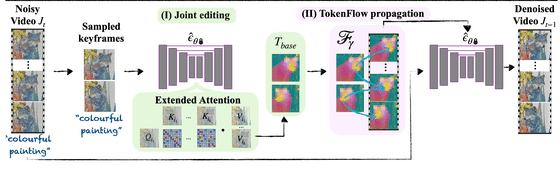

TokenFlow first reverses each frame of the input movie with

Then, during the denoising phase of the diffusion model, keyframes are sampled from the noisy movie and batch edited using the extended attention block to create 'edited tokens.' Here, consistency is ensured by applying the 'edited token' to the entire movie using the feature correspondence between the frames extracted earlier.

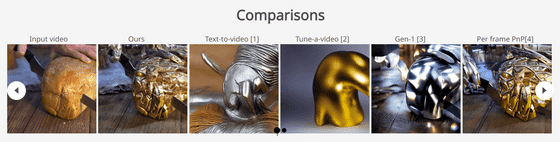

Many samples and comparison movies with other models are prepared on the project page, so please check if you are interested.

Also, the code is scheduled to be released on GitHub , but at the time of writing the article, it was ``CODE IS COMING SOON!'' and had not been released yet.

Related Posts: