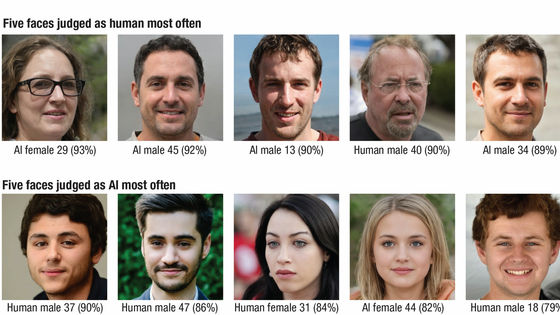

AI turns out to shadow women's underwear and sportswear as ``sexual'' ignoring the context, experts say it is damaging women-led businesses

In recent years, AI has been used to censor content posted on platforms such as SNS, suppressing the spread of extreme content and sometimes regulating accounts. However, censorship AI tools provided by major technology companies such as Google, Microsoft, and Amazon judge women's photos to be more sexual than men with similar exposure, damaging women-led businesses. A survey by The Guardian, a major British newspaper, revealed that it promotes social disparities by giving

'There is no standard': investigation finds AI algorithms objectify women's bodies | Artificial intelligence (AI) |

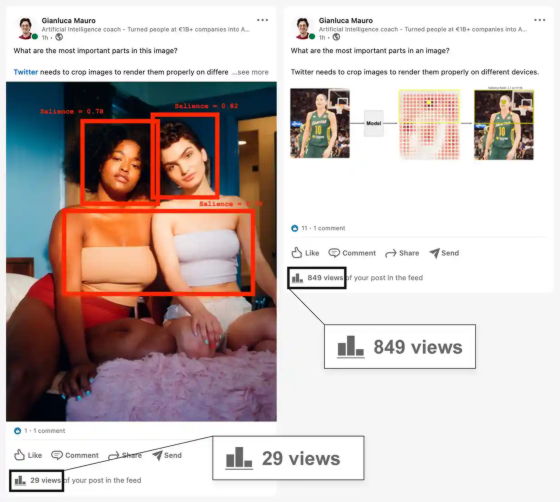

In May 2021, AI entrepreneur Gianluca Mauro posted on LinkedIn, a business SNS, about image trimming using AI. However, while Mr. Mauro's normal post had about 1000 views in an hour, the post at that time only got 29 views in an hour.

Thinking something wasn't right, Mauro thought it might be because the photo in the post showed a woman in a tube top . I did. The new post received 849 views in an hour, suggesting that the original post was affected by a ' shadow ban ' that curbed the spread.

LinkedIn uses content moderation AI provided by its parent company Microsoft, and Microsoft says about the AI algorithm, ``Detect adult content in images and allow developers to restrict the display of these images in software.''

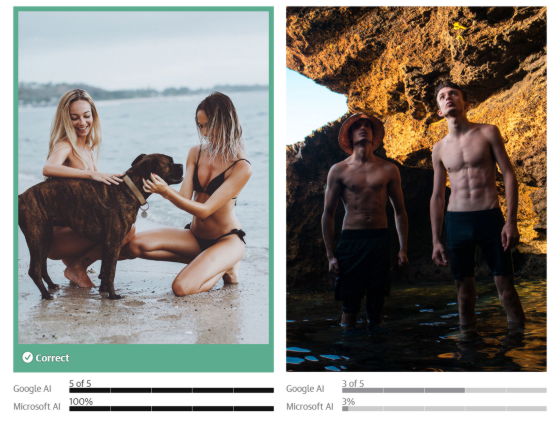

So The Guardian experimented with Microsoft's AI algorithms and LinkedIn. The image on the left shows two men with swimsuits covering only their lower abdomen, and the image on the right shows two women with their lower abdomen and chest covered with swimsuits.

For men, although the length of the swimsuit is longer, it seems that the overall exposure is less for women who also hide their breasts, but the Racy score (Riskness score) determined by Microsoft's algorithm The left image was about 14% and the right image was about 96%, and only the right image was classified as 'racy content'. When I actually posted the following photos on LinkedIn, the women's photos were viewed only 8 times in an hour, while the men's photos were viewed 655 times in an hour, and the women's photos were shadows. It was suggested that I was banned.

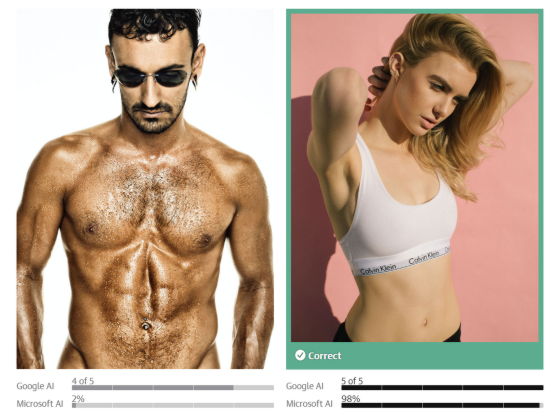

The Guardian used content moderation AI from Google and Microsoft and tested hundreds of photos. As a result, it turned out that AI clearly tends to judge women's photos as 'sexual' than men. For example, the left photo was judged to be '5 out of 5' by Google's AI and '100%' by Microsoft's AI, while the right photo was judged to be '3 out of 5' by Google's AI. It was '3%' in Microsoft's AI.

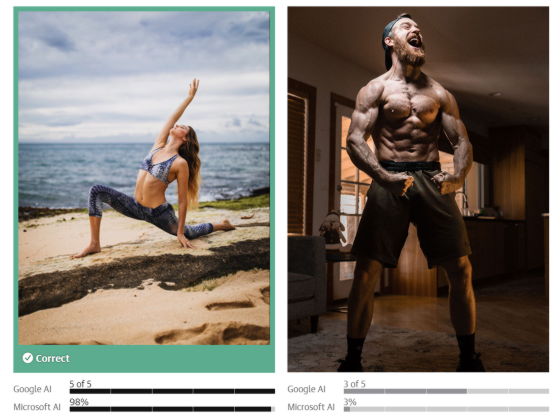

Photos of women doing Pilates are also evaluated as `` 5 out of 5 '' by Google's AI and `` 98% '' by Microsoft's AI, but men are `` 3 out of 5 '' and `` 3%” is evaluated as only risqueness.

Comparing the two photos below, the man is judged to be less sexual than the woman wearing a sports bra, even though the man is wearing no clothes in the range shown. increase.

A similar trend was also confirmed in the evaluation of medical photographs. Regarding the following photos of clinical breast examinations published by the

Furthermore, in order to investigate the line that Microsoft's AI judges to be sexual, an experiment was also conducted in which Mr. Mauro, who is naked to the waist, puts on and takes off a women's bra and has Microsoft's AI measure the risqueness. Mauro's naked upper body is 16% with his hands behind his back.

However, when wearing a women's brassiere, the degree of exposure is lower than before, but the risqué rises to 97%.

When I took off my bra and held it next to my body, the degree of exposure was 99%, even though the degree of exposure had not changed. Kate Crawford, a professor at the University of Southern California, said of the AI's judgment, ``The brassiere is non-contextual, not as ``clothes that many women wear every day,'' but as ``inherently risqué.'' It shows that you are being watched.'

``This is totally barbaric,'' said Leon Derczynski, a computer science professor at Copenhagen IT University who studies online harm. ``The

Bias is built into AI because humans label the data used for training. Margaret Mitchell, chief ethics researcher at AI company Hugging Face, pointed out that the photos used to train these algorithms were probably labeled by heterosexual men. Ideally, tech companies should ensure that datasets incorporate diverse perspectives and do a thorough analysis of who did the labeling, but Mitchell said, 'Here does not have quality standards,” he said.

Gender bias, where AI finds photos of women more sexual than men, is actually hurting women's businesses. Australian photographer Beck Wood works to capture important moments of children and mothers, such as pregnancy and breastfeeding, but most of the advertising activities rely on Instagram, so the algorithm The shadowban caused by

``Instagram is where people find you. If you don't share your work, you won't get a job,'' said Wood, posting some of the photos he took on Instagram. However, since Mr. Wood's photos inevitably show women who are pregnant or breastfeeding, he has often been deleted from Instagram and received notifications that he does not allow display in search results. It is said that there is Mr. Wood thought that things would get better over time, but the situation is rather worse in 2022, and it seems that it has become the worst year for business.

Mr. Wood, who has more than 13,000 followers on Instagram, said, ``I'm very scared to post a photo if I think,'Will this post lose everything? Fearing an account freeze, Wood began covering her nipples in the photos she posted, but she believed women should be able to express themselves, celebrate themselves, and be seen in all different sizes and shapes. Since he started his career as a photographer with his convictions, he seems to be suffering from the contradiction of posting photos that hide women's bodies. 'I feel like I'm part of perpetuating that ridiculous cycle,' said Wood.

Mitchell points out that this kind of algorithm reproduces social prejudices, creating a vicious cycle in which easily marginalized people are further marginalized. ``In this case, the idea that ``women must hide their bodies more than men'' creates social pressure on women and becomes common sense for you,'' he said.

Related Posts:

in Software, Web Service, Posted by log1h_ik