A fierce man who realized a real-time translation system like 'Cyberpunk 2077' using Whisper and DeepL appears

Mr.

GitHub - elanmart/cbp-translate

https://github.com/elanmart/cbp-translate

Mr. Elankowski seems to have decided to build a system that can handle the following conditions in developing a ``translation system that displays the conversation content in real time on the speaker and translates it''.

・Short videos can be processed

・Conversation content of multiple characters (speakers) can be translated

- Recognizes and transcribes both English and Polish voices

・Conversation can be translated into any language

・Each phrase can be assigned to a speaker

・Display the speaker on the screen

・Add subtitles to the original video like Cyberpunk 2077

- Nice front-end system

・Remote execution possible in the cloud

With the development of the machine learning ecosystem, he thought it was 'definitely possible' to develop a proof-of-concept translation system that meets the above requirements.

“The off-the-shelf tools are very robust and in most cases very easy to integrate,” says Jelankowski . We were able to build the entire app without any manual labeling.' Elankowski also said, ``Development definitely took longer than expected, but most of the time was spent on problems other than machine learning (such as figuring out how to add Unicode characters to video frames). It was done, ”he said, saying that development took longer than originally planned, but that the problem was outside of machine learning.

Check the video that can be played by clicking the image below to see how the `` translation system that displays the conversation content in real time on the speaker and translates it '' actually developed by Mr. Elankowski Then you will understand. The video is an interview conducted in Polish in the 1960s and translated into English in real time by a translation system.

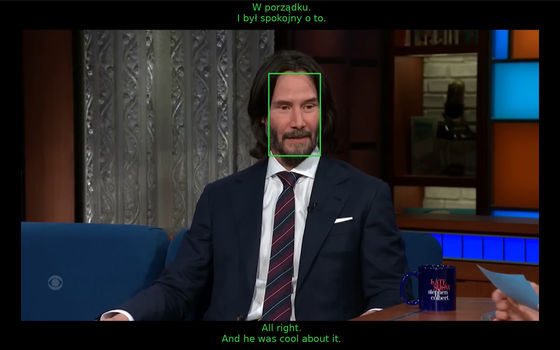

The content of the speaker's conversation (Polish) is displayed in text at the bottom of the screen, and the real-time translation of the conversation is displayed in English at the top of the screen. As you can see from the fact that the color of the text changes depending on the speaker, Mr. Erankowski's system requirements include ``translating the conversations of multiple characters (speakers)'' and ``translating each phrase to the speaker. Allocable'.

When you click the image below, the video played is a real-time translation from English to Polish of the video of Keanu Reeves, who plays Johnny Silverhand in Cyberpunk 2077, responding to an interview with

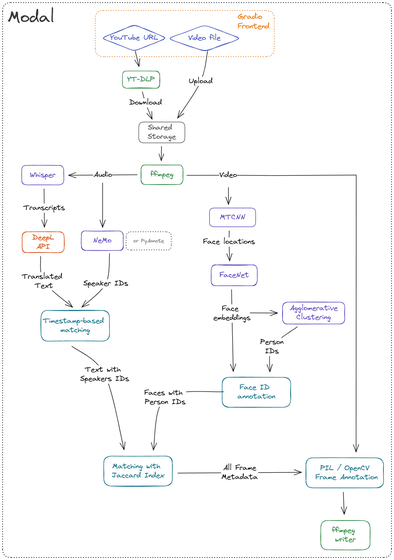

Mr. Elankowski uses the following tools to develop a real-time translation system.

・Whisper for speech recognition

NVIDIA's NeMo for speaker diarization ( Note: PyAnnote was also tested, but the results were not satisfactory)

・DeepL for translation

・RetinaFace for face detection

・DeepFace for face recognition

・scikit-learn for unique face detection (used for clustering )

・Gradio demo front end

・Modal for serverless deployment

We used Python Imaging Library and OpenCV to transcribe the speaker's dialogue into video frames. In addition, I also use yt-dlp as a tool for downloading samples from YouTube.

The sketch below shows how the above tools work together to perform real-time translation.

Mr. Elankowski said about Whisper used for speech recognition, ``This is a great tool that recognizes English speech more correctly than I do.It handles multiple languages and works fine even if the speech overlaps.'' , cited the reasons for adopting Whisper as a speech recognition tool. Furthermore, Mr. Elankowski says, 'I put the audio stream together and feed it to Whisper as one input, but if I want to improve my code, I can split the audio data for each speaker. However, I don't think this will improve,' he said.

Mr. Elankowski wanted to maximize the quality of his translations, so he chose DeepL as his translation model. Regarding the reason for adopting DeepL, Mr. Elankowski said, ``DeepL works better than Google Translate, and the API translates 500,000 characters for free per month.''

Elankowski is experimenting with NeMo and PyAnnote for speaker diarization. Below is the process by which speaker diarization identifies which speaker is speaking. Mr. Elankowski, who was not satisfied with the accuracy of PyAnnote, uses NVIDIA's NeMo, saying, ``NVIDIA's NeMo uses automatic speech recognition (ASR), text-to-speech synthesis (TTS), large-scale language model (LLM), natural It is a conversational AI toolkit built for researchers working on language processing (NLP),” said NeMo. In particular, the recognition accuracy of English is excellent. 'We are still struggling to identify the moment when multiple speakers talk at the same time, but it worked at a sufficient level in the demo,' he said.

RetinaFace is used for face detection in videos. Leverage pre-trained models to detect any face that appears in each frame of a video. RetinaFace's face detection accuracy seems to be very robust and reliable, but the only drawback is that ``the code can only process one frame at a time because it relies on Tensorflow,'' Erankowski cites. . Therefore, it takes a very long time to process all the frames of the video to RetinaFace. In fact, even the latest GPUs take several minutes to process a 60-second video.

The source code of the real-time translation system developed by Mr. Elankowski is published on GitHub.

GitHub - elanmart/cbp-translate

https://github.com/elanmart/cbp-translate

Related Posts:

in Software, Posted by logu_ii