The strongest shogi AI reaches new ground, DeepMind's AI 'AlphaTensor' succeeds in improving the matrix multiplication algorithm that has been stagnant for over 50 years

DeepMind's AI `` AlphaGo '', which

Discovering faster matrix multiplication algorithms with reinforcement learning | Nature

https://doi.org/10.1038/s41586-022-05172-4

Discovering novel algorithms with AlphaTensor

https://www.deepmind.com/blog/discovering-novel-algorithms-with-alphatensor

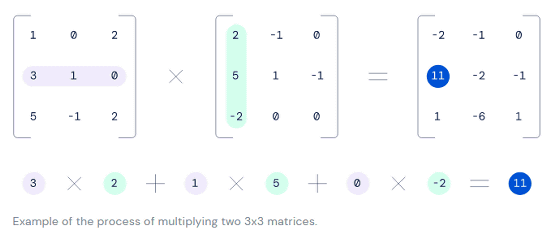

The 'algorithm for calculating the matrix product' that AlphaTensor worked on this time is used in various fields related to daily life, such as image processing, game graphics processing, weather forecasting, and data compression. Many companies are working on the development and enhancement of calculation hardware to speed up matrix calculation, but if the algorithm itself is made more efficient, the speed of matrix calculation may dramatically improve.

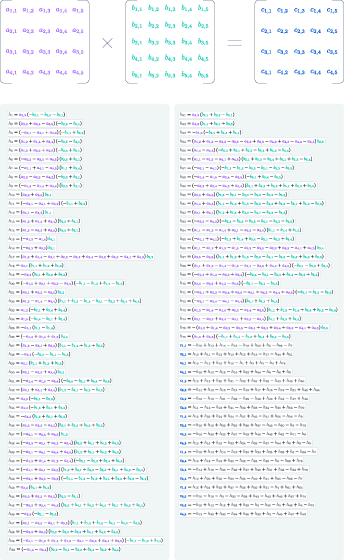

The ' Strassen's algorithm ' announced in 1969 is widely known as an algorithm for calculating the matrix product at high speed. However, in the more than 50 years since Strassen's algorithm was announced, significant efficiency improvements have not been realized. Therefore, DeepMind designed a ``game to find the best way to calculate the matrix product'' and let AlphaTensor play it.

At the start of play, AlphaTensor did not learn any ``methods for calculating the matrix product'', but as the play was repeated, it began to acquire efficient calculation methods. For example, when calculating the product of a '4 x 5 matrix' and a '5 x 5 matrix', 100 multiplications are required if calculated naively, but if a known algorithm is used, the number of multiplications can be reduced to 80. . On the other hand, the algorithm derived by AlphaTensor can find the product with 76 multiplications.

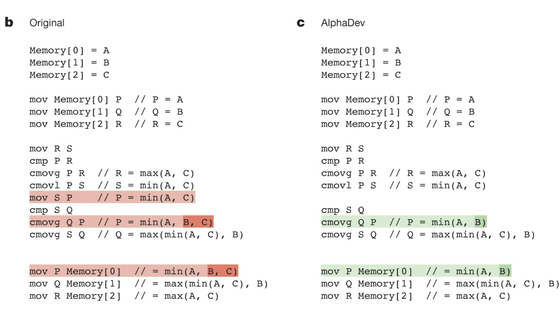

As a result of repeated play, AlphaTensor succeeded in deriving an algorithm similar to Strassen's algorithm on its own, and also deriving an algorithm that can be calculated more efficiently than existing algorithms. According to DeepMind, the algorithm discovered by AlphaTensor can handle a huge matrix of thousands of rows. Algorithms have also been derived that speed up matrix computation by 10% to 20% on specific hardware such as NVIDIA V100 and Google TPU v2 .

DeepMind says that the algorithm discovered by AlphaTensor may greatly improve the efficiency of computations such as computer graphics, communication processing, and neural network training. DeepMind also points out the possibility that AlphaTensor's algorithm exploration can be used in computational fields other than matrices.

The matrix multiplication algorithm discovered by AlphaTensor is published in the following GitHub repository.

GitHub - deepmind/alphatensor

https://github.com/deepmind/alphatensor

Related Posts: