A solution to the problem of slow 'ls' commands in folders with too many files

In log storage, the number of files in one folder may grow to millions before you know it.

You can list a directory containing 8 million files! But not with ls.

http://be-n.com/spw/you-can-list-a-million-files-in-a-directory-but-not-with-ls.html

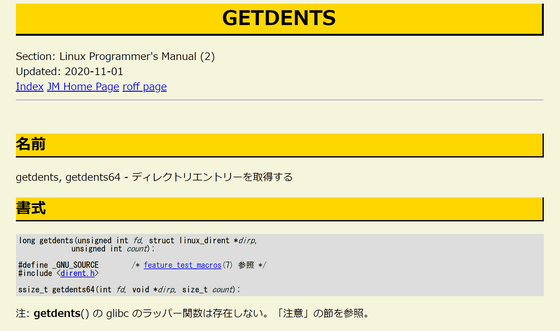

According to Congleton, the implementation of practical file list commands such as 'ls' and 'find. ' Relies on libc 's ' readdir () '. This 'readdir ()' can only read 32KB of directory entries at a time, which is very difficult to read all the entries in a directory where the number of files has grown to hundreds of megabytes. It will take time. So, Mr. Congleton thought about a method to directly call the 'getdents () ' system call without using 'readdir ()'.

'Getdents ()' is a low-level system call to call a directory entry from disk and takes three arguments: a 'filehandle ', a 'directory entry pointer', and a 'buffer size'. Mr. Congleton said that he first increased the buffer size to 5MB based on the example on the manual page.

[code] #define BUF_SIZE 1024 * 1024 * 5 [/ code]

And in the part that writes the file information of the main loop, it is set to exclude the one whose 'inode' is 0.

[code] if (dp-> d_ino! = 0) printf (...); [/ code]

In the case of Mr. Congleton, it seems that only the file name was important, and he changed it so that only the file name is output.

[code] if (d-> d_ino) printf ('% sn', (char *) d-> d_name); [/ code]

Then compile and run.

[code] gcc listdir.c -o listdir

./listdir [directory with insane number of files]> output.txt [/ code]

In this way, Mr. Congleton succeeded in listing 8 million files. When I checked the size of the directory entry with the 'ls -dl' command, it was about 513MB, and it was said that 16,416 system calls were required to read 32KB each. Congleton said it was important to increase the buffer size of 'getdents ()' to reduce the number of system calls, especially in a slow virtual disk environment, as the number of system calls has a significant impact on execution time. increase.

Related Posts:

in Software, Posted by log1d_ts