Delete comment Moderator publishes research results on 'what should be deleted?'

In the comments section of many social media and websites, moderators remove posts and comments that are inappropriate or offensive. A research team at the University of Texas at Austin's Media Engagement Center investigated how people's reactions change, such as 'types of deleted comments' and 'moderators explain why they were deleted.'

How the Public Views Deletion of Offensive Comments --Center for Media Engagement --Center for Media Engagement

Comment moderators should focus more on hate speech than profanity, a new study suggests »Nieman Journalism Lab

https://www.niemanlab.org/2021/06/comment-moderators-should-focus-more-on-hate-speech-than-profanity-a-new-study-suggests/

The Media Engagement Center's research team is working with research teams from the University of Erasmus in the Netherlands and the University of Nueva de Lisbon in Portugal to comment on people living in the United States, the Netherlands and Portugal. We conducted a survey on ration. In this survey, it seems that factors such as 'type of comment' and 'explanation of the reason for deletion' have an effect on the fairness and legitimacy of moderation that people feel.

The research team used templates designed like Disqus , an online service that provides commenting capabilities for websites and blogs, to display user comments and moderator descriptions. The survey was conducted on 902 subjects in the United States, 975 in the Netherlands, and 993 in Portugal, and comments and moderator explanations were all translated into the major languages of each country.

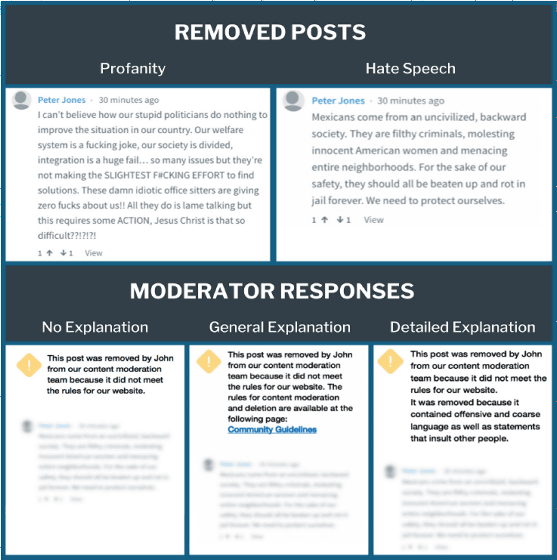

First, the subject saw 'comments containing profane and profane expressions' and 'hate speech to a particular group,' and then the moderator's description of removing those writings. In the image below, the upper left is 'Comments on political criticism including abusive words such as'f * cking'', the right is 'Hate speech to Mexicans', and the lower is 'Moderator who does not explain the reason for deletion' from the left. 'Posts' 'Moderator posts with general explanations and links to community guidelines' 'Moderator posts explaining the reason for the removal in question'.

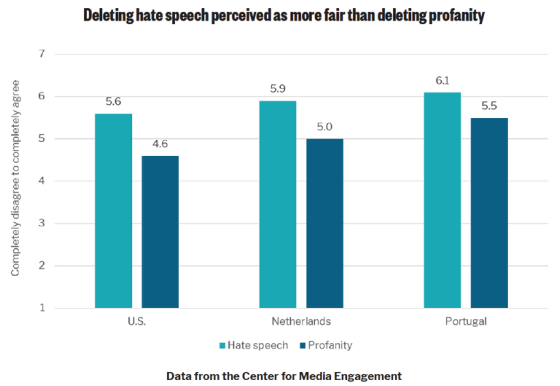

The subjects then answered questions about the fairness and legitimacy of comment deletion, and the credibility of the moderators. The image below shows a bar graph showing the score of 'whether the comment deletion was fair' on a 7-point scale. The light blue bar graph is 'hate speech' and the dark blue bar graph is 'comments containing abusive words'. From the left, the survey results are from the United States, the Netherlands, and Portugal. In all three countries, removing hate speech tends to feel more impartial than removing comments that contain abusive words.

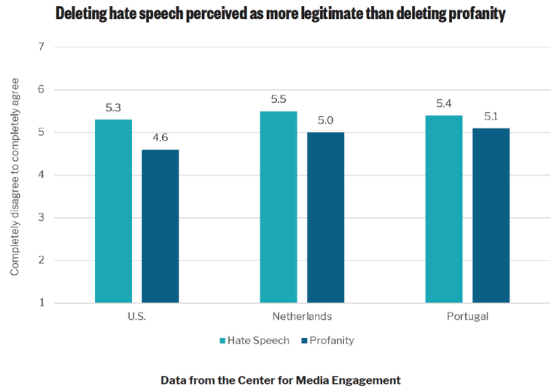

In the graph below, which shows the results of the investigation on 'validity of comment deletion', it can be seen that hate speech is also more likely to be perceived as legitimate than comments containing abusive words.

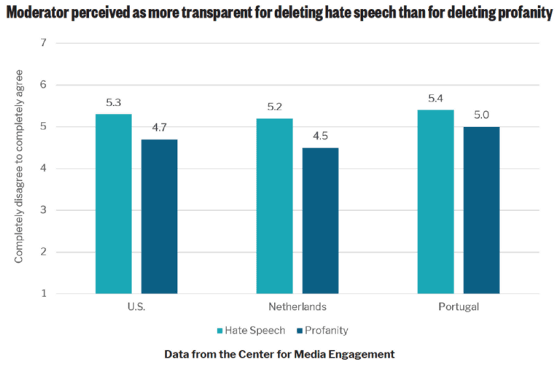

The type of comment deleted also affects the 'moderator's assessment of transparency'. According to the graph below, moderators who deleted hate speech tended to be evaluated as 'more transparent' than moderators who deleted comments containing abusive words.

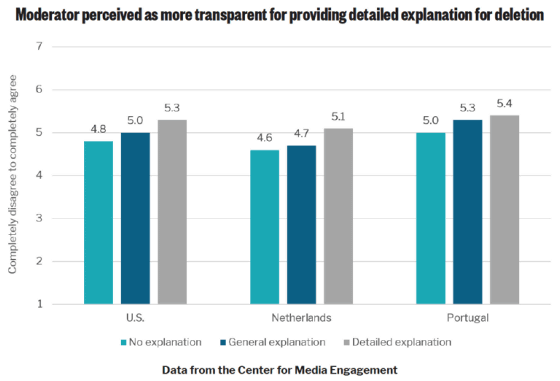

The bar chart below shows the impact of postings on deletion support on moderators' assessment of transparency. Light blue is 'Posts of moderators who do not explain the reason for deletion', dark blue is 'Posts of moderators with general explanation and links to community guidelines', and gray is 'Posts of moderators who explain the reason for deletion in question' It can be seen that the moderators are evaluated to be more transparent if they explain the reason for deletion in any country.

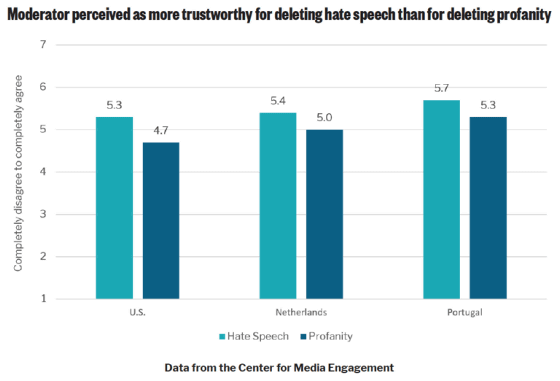

And this is the one that shows the evaluation about 'reliability of the moderator who deleted the comment'. Light blue indicates 'hate speech' and dark blue indicates 'comments containing abusive words'. In the United States on the far left and the Netherlands in the center, moderators who deleted hate speech are better than moderators who deleted comments containing abusive words. You can see that it is easy to be trusted. In addition, Portugal on the far right has the same result as far as the graph is seen, but the research team says, 'There was not much difference.'

In addition to these results, the research team also investigated the effect of 'whether the moderator is human or AI' on the fairness and legitimacy of content deletion. As a result, in the United States and the Netherlands, whether the moderator is human or AI, the evaluation of deletion did not change, but in Portugal, when the moderator is human rather than AI, it tends to be evaluated as fair and legitimate. Was seen.

From the results of this survey, the research team summarizes the important points for social media and websites that delete comments as follows.

• Moderators should focus on hate speech, as people believe that hate speech should be removed rather than abusive or profane expressions.

• Moderators should not only explain the general reason, but also the specific reason why the content was deleted.

-Algorithm moderators may be perceived as human moderators, but they may not apply to all countries, so cultural backgrounds need to be considered.

Related Posts:

in Web Service, Science, Posted by log1h_ik