Deep learning algorithms developed for commercial CPUs up to 15 times faster than GPUs

In recent years, AI operates using a learning method called '

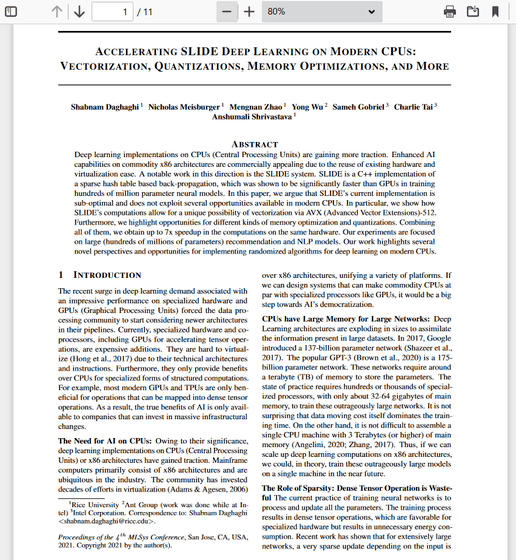

ACCELERATING SLIDE DEEP LEARNING ON MODERN CPUS: VECTORIZATION, QUANTIZATIONS, MEMORY OPTIMIZATIONS, AND MORE

(PDF file) https://proceedings.mlsys.org/paper/2021/file/3636638817772e42b59d74cff571fbb3-Paper.pdf

CPU algorithm trains deep neural nets up to 15 times faster than top GPU trainers

https://techxplore.com/news/2021-04-rice-intel-optimize-ai-commodity.html

Deep learning is a learning method in which the machine automatically discovers patterns and rules that exist in the data, sets features, and learns. Compared to the conventional method that required humans to discover patterns and rules, it is possible to break through the limit of 'human recognition and judgment', so it is a technology that leads the fields of image recognition, translation, automatic driving, etc. It has become.

Deep learning is, in principle, performing a matrix multiplication-accumulate operation. On the other hand, the GPU, which was developed to process graphics, is an arithmetic unit that performs a matrix multiplication-accumulate operation to move and rotate polygons in three-dimensional graphics. In other words, since the GPU specializes in matrix multiplication-accumulate operations, in recent years it has also been used as the optimal processor for deep learning.

However, GPUs have the problem of being 'costly'. To solve this problem, Rice University computer scientist Anshumali Shrivastava said he tried to review the deep learning algorithm itself, which requires the current product-sum operation of matrices.

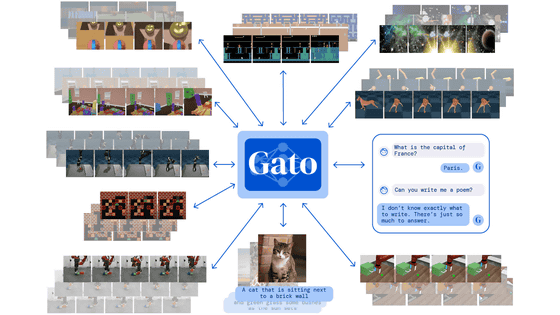

A research team led by Shrivastava has developed a learning algorithm 'Sub-Linear Deep Learning Engine (SLIDE)' that optimizes the calculation itself for a commercial CPU by reconsidering deep learning learning as a 'search problem that can be solved with a hash table'. .. We have shown that this SLIDE outperforms GPU-based learning.

The research team noted that CPU is the most popular hardware related to computing, and pointed out that machine learning using CPU has a cost advantage. 'When I used SLIDE, which doesn't stick to matrix operations, I achieved a learning speed of 4 to 15 times faster than the GPU on the CPU,' he said.

Related Posts: