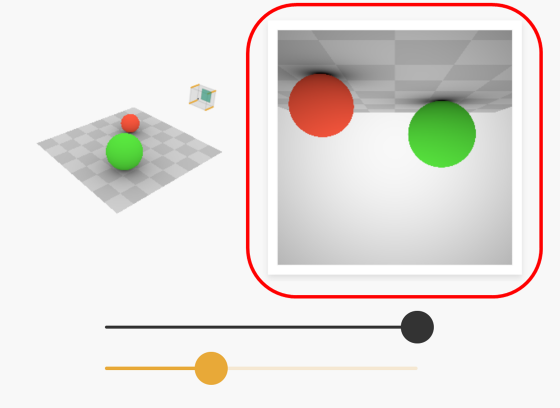

A site that explains how the subject appears on the camera with a 3D model looks like this

Although it is a 'camera' with a history of about 200 years, its structure and functions are very complicated. Photographer Bartos Chehanovsky explains in detail how such a camera works using a 3D model.

Cameras and Lenses – Bartosz Ciechanowski

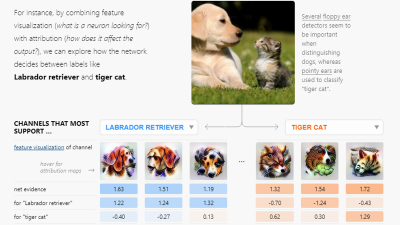

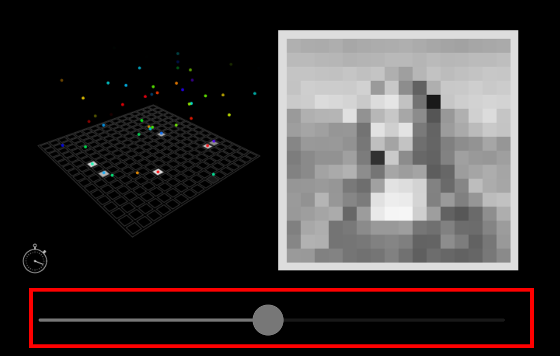

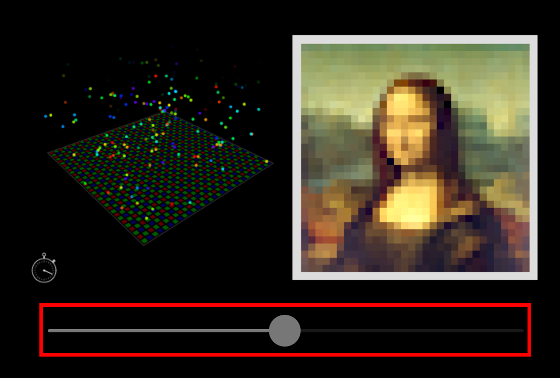

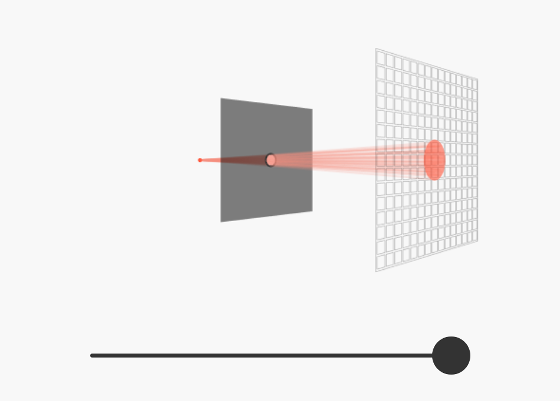

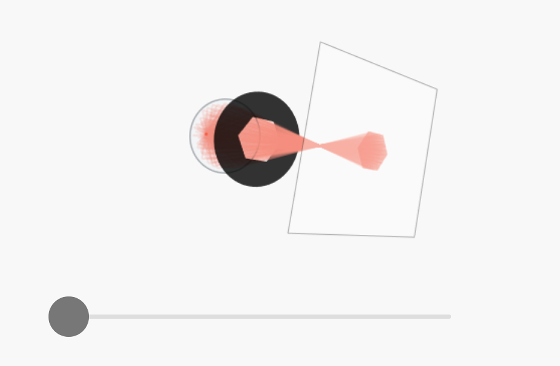

Mr. Chehanovsky explains the camera in order from the basic functions and principles. The first thing to be done is to talk about the 'light' that is needed for photography. Digital cameras have photodetectors called image sensors, which are arranged in a grid pattern. The image sensor converts photons into a measurable current, and the more photons that hit the image sensor, the higher the signal.

You can move the gray bar left and right to advance the time and see how the photons pass through the small image sensors arranged in a grid. The signal read by each image sensor is converted to the brightness of the image pixels displayed on the right. The longer the photon collection time, the more photons hit the image sensor and the brighter the pixels in the image. If the photon collection time is short, the image will be underexposed and the image will be dark. If the photon collection time is long, the image will be overexposed and the image will be bright.

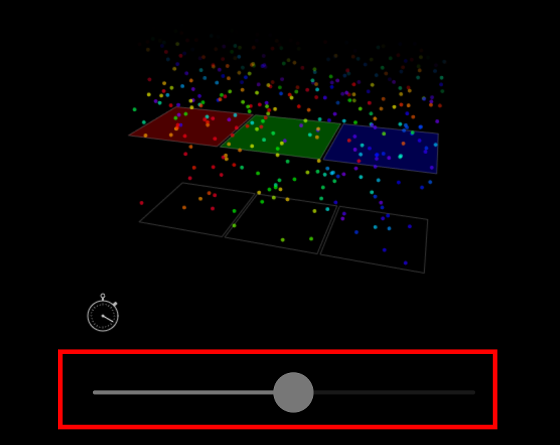

Photons come in a variety of colors, but the image sensor does not recognize that color and only detects the intensity of the photon. It is necessary to divide photons into individual groups in order to recognize colors. By installing a small color filter on the detector, it is possible to recognize only red, green, and blue light. On the site, you can move the bar to see how only certain colors pass through the filter.

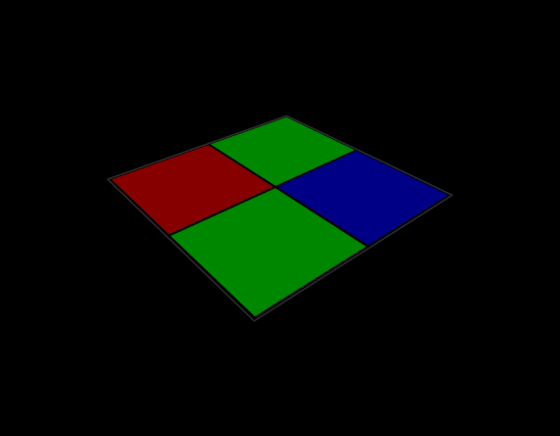

There are several ways to place such a filter, but the simplest is the

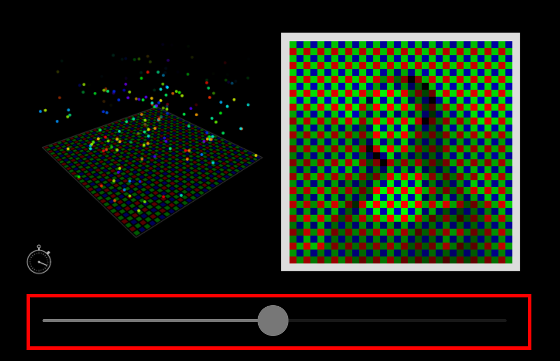

You can see how the photons that have passed through each filter are displayed by moving the bar. This filter recognizes only the color intensity and cannot display the specific color.

As the final step in displaying a normal image, a process called ' demosaic processing ' is required. By this processing, the color of photons that have passed through each filter is complemented with the adjacent color, and the original color can be reproduced to some extent. You can see the process of demosaic processing by moving the black bar. Even with this process, the overall brightness of the image depends on the length of time the photons pass, which is called the ' shutter speed (exposure time) '.

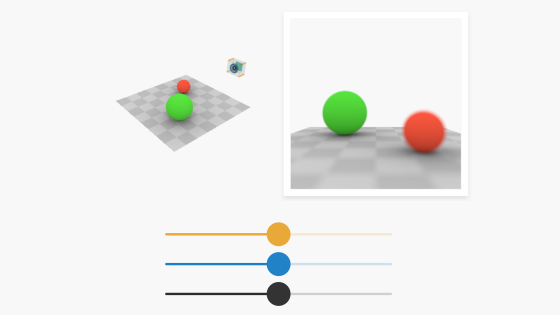

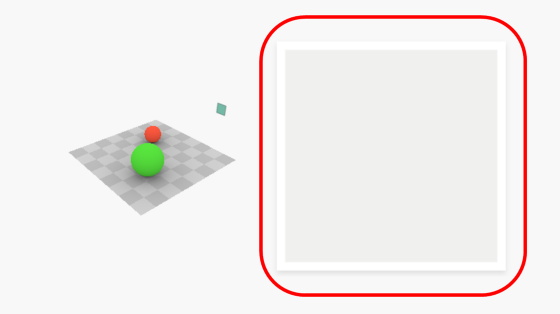

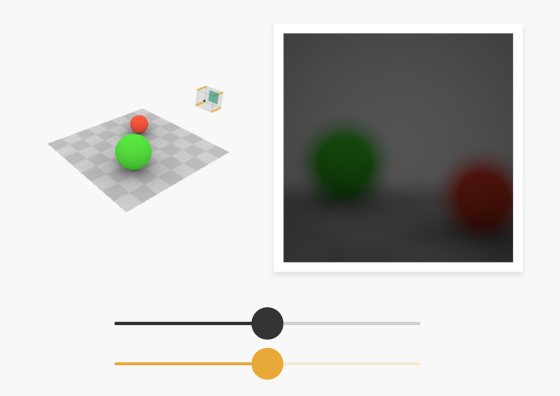

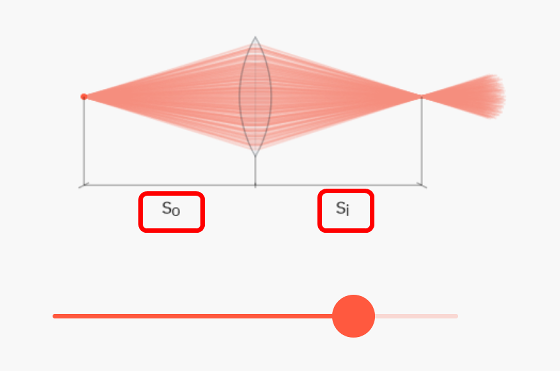

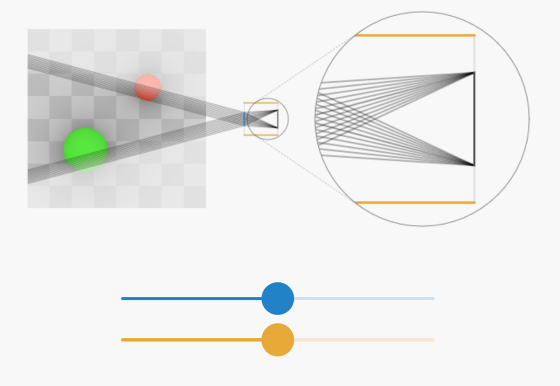

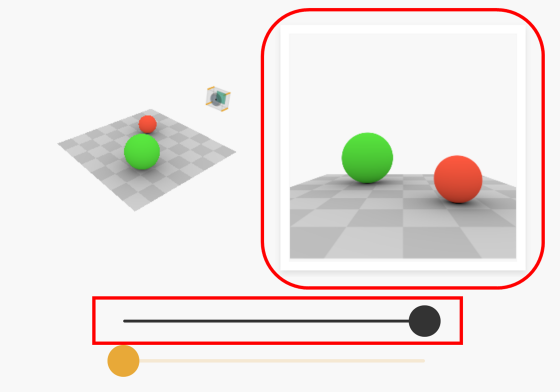

The model has moved into the light. I have the image sensor and color filter that I have created so far, and I am shooting a sphere with a camera that can perform demosaic processing, but nothing is displayed in the light.

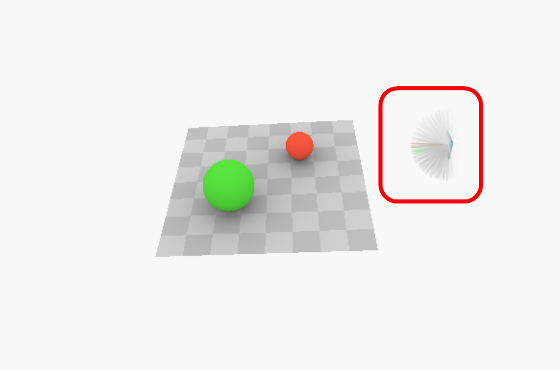

The reason for this is that photons are collected at one point by a sensor that collects light. The red sphere reflects red and the green sphere reflects green, but all the reflected colors are gathered at one point from all directions as shown below, so it seems that nothing is reflected in the camera. It means that it looks like.

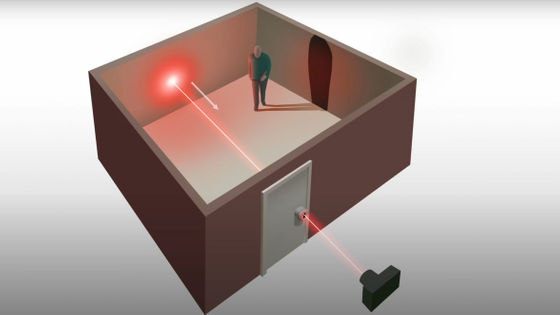

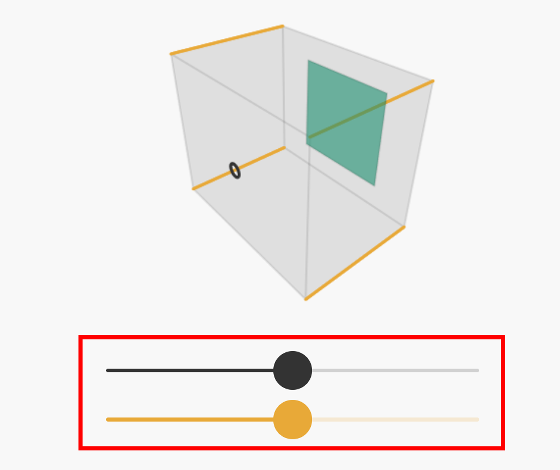

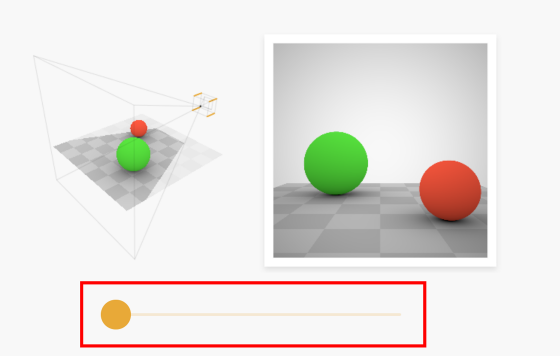

It is necessary to limit the range of photons that pass through the sensor in order to display the image normally. Chehanovsky created a model of the box, put a sensor inside, and made a small hole in the front. You can change the size of the hole with the black bar and the distance from the hole to the sensor with the orange bar. The inside of this box is set to black, and it is said that light will not be reflected inside the box. In addition, the sensor is placed behind the inside of the box so that the light that passes through the hole hits it. This box is a so-called '

You can see how the sphere looks on the sensor by moving the black and orange bars. What you can see here is that the image is displayed upside down, left and right.

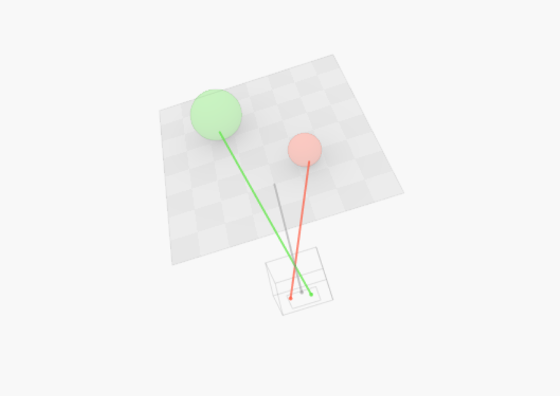

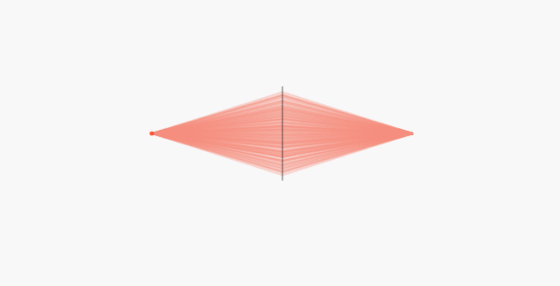

It is said that the light is displayed in reverse because it intersects when passing through the hole as shown below.

You can check that the display range changes by changing the distance from the hole to the sensor by moving the orange bar.

All the light passes through the hole, but by changing the distance from the hole to the sensor, the range that hits the sensor changes, and the displayed range also changes. A top view of the model is also available, so you can see how the range of light hits changes.

You can also see that the larger the hole size, the larger the range of light that hits the sensor.

However, if you combine the mechanism that can change the size of the hole with the camera you created earlier, the number of photons that hit the sensor will decrease, and the image will become dark as shown below. This can be brightened by increasing the sensitivity of the image sensor (

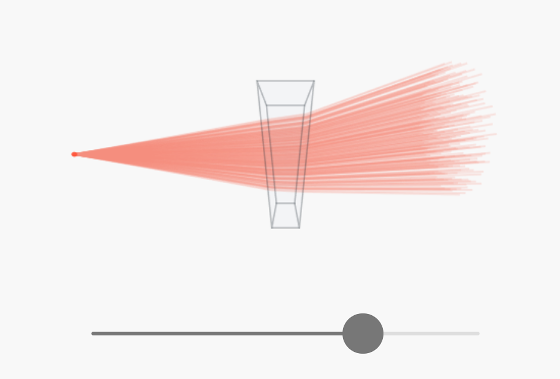

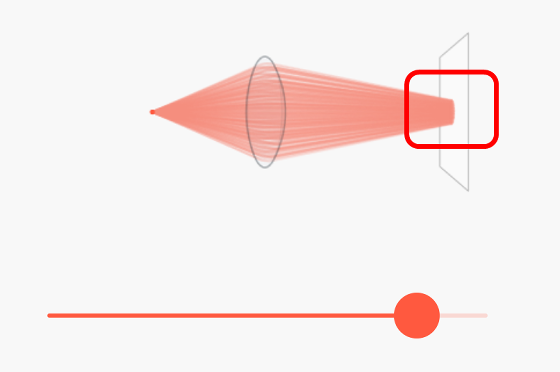

There is another way to solve the problem of blurring and lightness and darkness of the image by installing a sensor at the point where the light rays passing through the hole called the

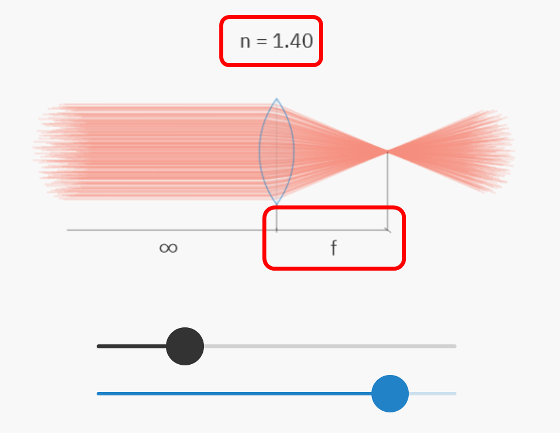

If you place a glass shaped like a straight line in the hole and let a light beam pass through it, it will refract like this. Both upward and downward glass must be combined to collect the rays in one place.

When you combine several pieces of glass, the light rays are refracted in one place. The closer the number of glasses is to infinity, the higher the accuracy, but it is not perfect yet.

By creating a convex lens with a smooth shape, the light beam is now concentrated on one point. The

The focal length (f) depends on the refractive index (n) of the lens and the shape of the lens. The smaller the index of refraction and the lens, the longer the focal length, and the larger the index, the shorter the focal length.

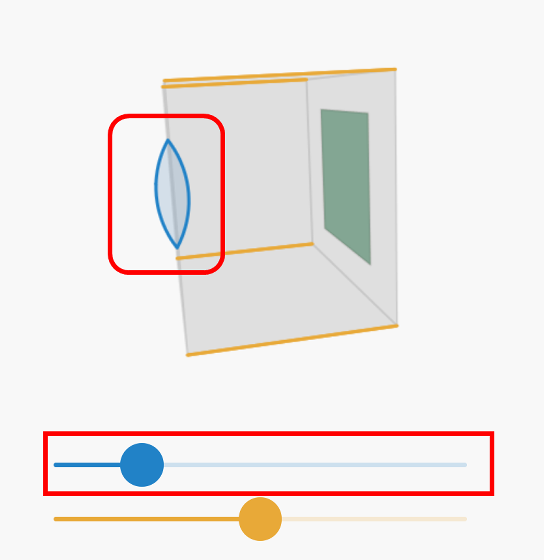

I attached the lens to the model I made. In later models, the blue bar allows you to change the focal length itself.

By operating the bar that changes the focal length and the distance from the hole to the sensor, you can see how the image is in focus. Originally, it is displayed in reverse like a pinhole camera, but in this case it is corrected and displayed.

Even if the focal length and the distance from the hole to the sensor can be changed, the position of the camera must be fixed in order to focus on the image, so it will be in focus no matter where the camera is moved. You need to. However, unlike the 'eyes' of living things, fixed focal length lenses cannot change their shape, so it is necessary to use a combination of several lenses.

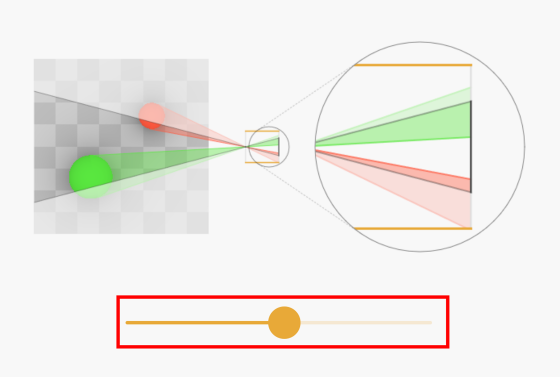

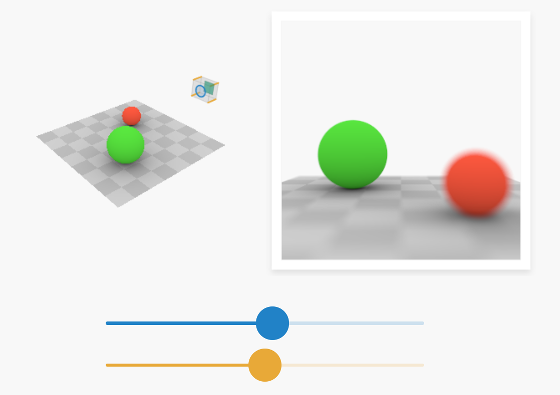

When the subject approaches the lens, the focus does not focus on the sensor and the light beam spreads in a circle. This circle is called a 'circle of confusion.' In reality, it is impossible to completely focus the sensor, but if this circle of confusion is large enough, it will appear to the human eye as being in focus.

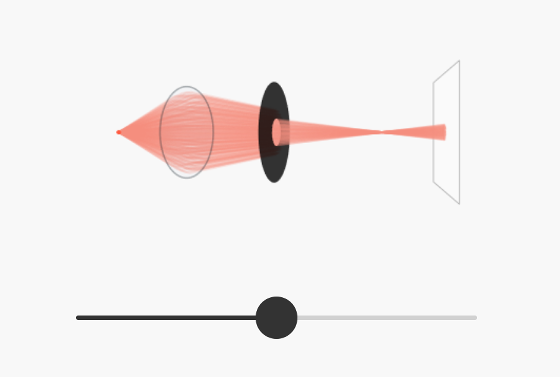

To adjust the size of the circle of confusion, it is necessary to make the angle of the

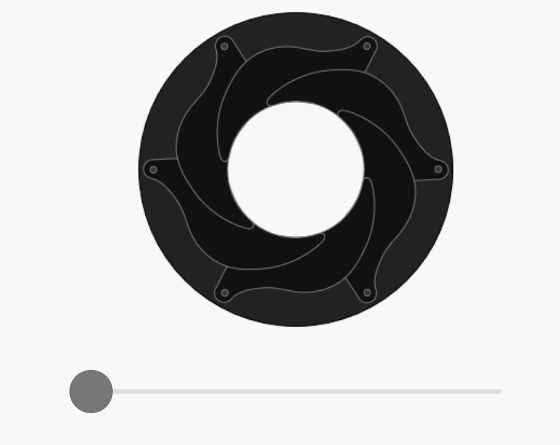

The aperture is a combination of several plates in an actual camera.

Since the aperture of the aperture is polygonal, it is often said that the blurring that occurs in photographs is also polygonal.

The smaller the aperture of the aperture (larger aperture value), the wider the area of the captured image will be in focus and clearer, and this in-focus area is called the '

Move the following black (opening size), blue (focal length), and red (subject position with respect to camera) bars to see the depth of field between the two blue dots displayed in the 3D model. You can see that changes. The smaller the aperture and the longer the focal length, the wider the depth of field, and the larger the opening and the shorter the focal length, the narrower the depth of field. If the red dot that represents the position of the subject is within the depth of field, the image will be in focus.

In this explanation, we have been talking about the theoretical lens, but in reality, blurring and distortion will occur when converting the subject into an image. There are many types of these phenomena, and the five phenomena related to monochromatic light are classified by the name of Seidel aberration . 'Shooting looks like a simple task of pressing the shutter button on a smartphone or digital camera, but in reality it's made forever thanks to the meticulously guided rays and precision equipment,' said Chehanovsky. I'm here. '

Related Posts:

in Hardware, Posted by log1p_kr