AI 'Motion2Vec' that learned and actually mastered suture surgery by seeing a movie of surgery appeared

The development of medical robots has progressed at a remarkable speed in recent years, and in 2017, ``

Motion2Vec

https://sites.google.com/view/motion2vec

Intel, Google, UC Berekely AI team trains robot to do sutures

https://techxplore.com/news/2020-06-intel-google-uc-berekely-ai.html

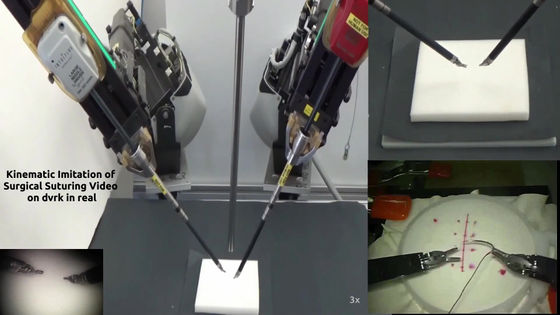

A research team joined by Google Brain and Intel AI Labs led by AI technician Ajay Tanwani of the University of California, Berkeley, is an AI that can operate the surgical robot hand `` Da Vinci '' with accuracy close to a human surgeon. We succeeded in developing a certain 'Motion2Vec'.

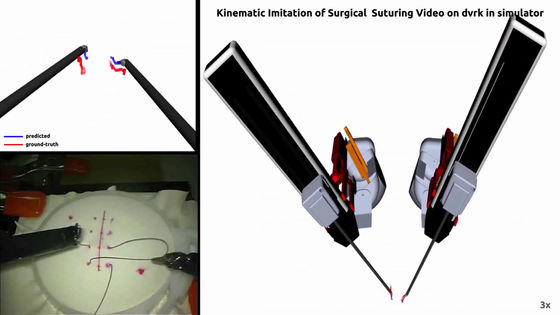

You can see how 'Motion2Vec' is actually moving the robot hand by watching the following movie.

Motion2Vec: semi-supervised representation learning from surgical videos-YouTube

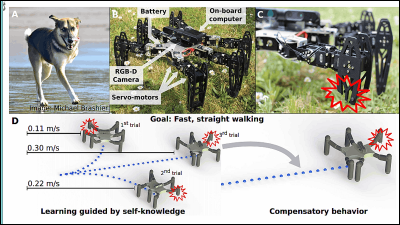

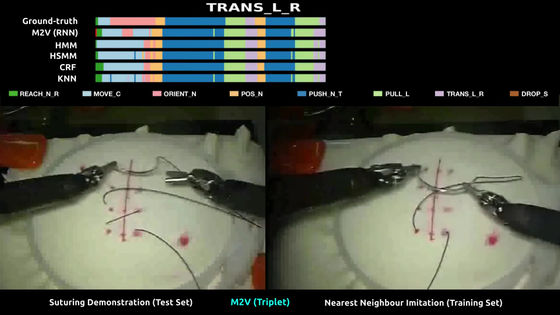

The learning method called ' sham network ' adopted by 'Motion2Vec' is a neural network that performs learning by extracting similar points and dissimilar points of two images. The research team used Motion2Vec's training using the video of the suture performed by eight surgeons with different skills in Da Vinci, which is recorded in the dataset JIGSAWS , which has been published for the study of surgical operation. The we.

After learning the movement of the robot hand from the video, we will simulate how to move the robot hand to mimic that movement.

Actually, when I operated Da Vinci on 'Motion2Vec', the accuracy was 85.5% or more, the average error was 0.94 cm, which was higher than the previous AI. Also, only 78 video materials were required for this training.

Ken Goldberg, co-author of the paper, explains the significance of this research: 'For example, YouTube is a wonderful database that can obtain 500 hours of video material per minute. However, most humans can only watch videos. You can understand the meaning in, but the robot can only recognize the image as the transition of the pixel.So, this time, we will make it possible for the robot to see the image and analyze it and see the movie as a meaningful image. It was aimed at.'

He added, “We can't replace the surgeon with a robot yet. The immediate goal is that if the surgeon using the system says'needs to be sutured here', the robot will do it. It's about understanding and getting involved, so that surgeons can focus on more complex and delicate surgery.'

Related Posts: