AI experts thoroughly counter optimism that `` from now on it is too early to worry about AI runaway ''

by

With regard to artificial intelligence (AI), which has been making remarkable progress in recent years, there is an optimistic opinion that “ threat theory is irresponsible ”, while threats such as “ dangerous than nuclear weapons ” have been screamed. Meanwhile, Stewart Russell, professor of information engineering at the University of California, San Francisco, who is regarded as the forefront of AI, said in his book ' Human Compatible: Artificial Intelligence and the Problem of Control ' 'It's never too early to do it,' he said, and he is thorough in opposition to optimism.

Many Experts Say We Shouldnt Worry About Superintelligent Ai Theyre Wrong-IEEE Spectrum

https://spectrum.ieee.org/computing/software/many-experts-say-we-shouldnt-worry-about-superintelligent-ai-theyre-wrong

◆ Discussion about whether it is better than human beings is meaningless

Some optimists say that there are no AI threats beyond wisdom because the calculator has superhuman computational power but is not a threat at all. However, Mr. Russell dismissed these opinions as “not even debatable”. He points out that “ short-term memory of chimpanzees is superior to mankind ”, “it is meaningless to say that chimpanzees are smarter than humans”.

Mr. Russell says that “it is a sufficient threat even if it is not super-intelligent beyond humans in all” because it is extremely difficult to stop AI that has once gone out of control. In fact, British AI company DeepMind who developed Alpha AI of Go AI has announced the basic principle of `` emergency stop button to stop AI runaway '' in the past, but in this case Q learning can be interrupted, It is also clear that Sarsa, which is also one of reinforcement learning, is unclear whether it can be interrupted at any time.

The basic principle of “Emergency stop button to stop AI runaway” proposed by DeepMind is explained in detail in the following article.

DeepMind who made Google's artificial intelligence `` AlphaGo '' developed a mechanism of `` emergency stop button '' to stop AI's runaway-GIGAZINE

by włodi

◆ 'It's too early' is not appropriate

Major IT companies of China Baidu has studied the deep learning in Andrew en once said, 'The fear of the murder robot, what's like to worry about the Mars of the population problem' and that told you there is.

Certainly, as Elon Musk plans to move to Mars , if mankind succeeds in colonizing Mars, Mars is likely to have population problems as well as Earth. However, as of 2019, mankind has not even succeeded in advancing to Mars, and it seems to me that it is awkward to worry about the population problem of Mars in such a situation.

However, Mr. Russell asks the question, “If we know that an asteroid will collide with the earth in 2069, should we start countermeasures from 2068?”, And devise countermeasures after the threat of AI becomes apparent You points out that it is like catching a thief and making a rope.

Mr. Russell is particularly concerned that 'there is a lot of research on AI development, but lacks the debate when an AI with a high degree of development actually appears.' Mr. Russell describes this situation as 'it is like starting to find what to eat on Mars after migrating to Mars' and argues that it is necessary to discuss the impact of advanced AI on mankind.

by Stefan Keller

Researchers are too distracted by the benefits of AI

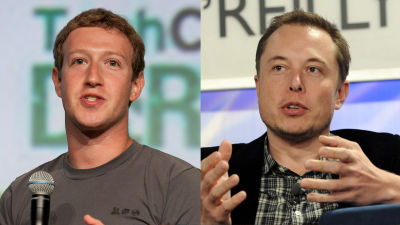

Elon Musk and Facebook CEO Mark Zuckerberg have previously had a heated debate about AI threat theory.

Battle that Elon Mask counters against Mark Zuckerberg that `` AI threat theory is irresponsible ''-GIGAZINE

by JD Lasica

At this time, Zuckerberg said from an optimistic point of view, `` The objection to AI is the same as the objection to a safe car that does not cause a traffic accident and the method that can accurately diagnose the disease. ' This is based on diagnostic technology using automated driving and AI. If these technologies are developed, people's lives will be enriched.

However, Mr. Russell accused Mr. Zuckerberg of 'the discussion is completely reversed' for two reasons. The first reason is that “AI research is being conducted because there is a merit”. If there is no merit in AI, an advanced AI that threatens humanity cannot be developed in the first place, so the opinion that “AI is not a threat because it has merit” is out of the question. And the second reason is “If the risk is not properly avoided, there will be no benefits”. As a result of AI being developed in a disorderly manner because of the merits of its merits, if AI has a negative impact on society, that is the end of the process.

In light of this opposition to optimism, Mr. Russell said, “Although the risk of AI is not small, it is not impossible to overcome,” AI researchers are disregarded of the risk of AI. Called for a more constructive discussion.

Related Posts:

in Science, Posted by log1l_ks