The claim that 'machine learning has a high hurdle' is a hype

By fdecomite

Machine learning using deep learning using a neural network is often perceived as requiring a long time using a very high performance processing device in large quantities, so somehow unless it is a very specialist It tends to think that it is high. However, in fact there is not such a thing, arguing arguing that it is a sort of hype that makes me feel "somehow hurdles are high".

Google's AutoML: Cutting Through the Hype · fast.ai

http://www.fast.ai/2018/07/23/auto-ml-3/

This assertion is published on the entry of the blog " fast.ai ", a site aimed at spreading the power of deep learning widely. As mentioned at the beginning, there is a vague myth that machine learning has "somehow high level", "a lot of high-performance equipment is needed", "it takes time", and Google In order to respond to the needs of users, we announced a policy to lower the hurdle of machine learning by releasing " AutoML ", which requires little knowledge of coding, to the public in the early 2020s.

But fast.ai claims that "hype" exists here. Google also suggests that there is a strategy to endorse the use of cloud services provided by the company by giving such images to people and to have a strategy of making future pillars of revenue.

It was in 2017 that AutoML attracted attention. As it is to say, AutoML which makes it possible to "build up a neural network" is attracting attention as a means of lowering the machine learning hurd, and Google's Thunder Pichai CEO "Making AI work for everyone" (AI is all In order to contribute to people) "in the blog entry entitled" Neural network can design neural network, we have launched AutoML as an approach to that ".

Making AI work for everyone

https://blog.google/technology/ai/making-ai-work-for-everyone/

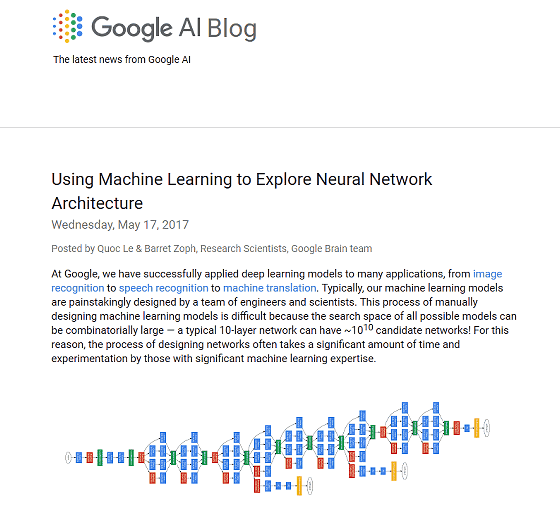

Also, Google's AI researchers also said in the following blog entry: "In our approach called" AutoML ", a neural network to be a controller can train and evaluate the quality of a specific task" child You can propose a model architecture that will be "," is written.

Google AI Blog: Using Machine Learning to Explore Neural Network Architecture

https://ai.googleblog.com/2017/05/using-machine-learning-to-explore.html

Two key core technologies called "Transfer Learning" and "Neural Architecture Search" are important elements in AutoML. Transfer learning, often referred to as "metastasis learning" in Japanese, is a technique to adapt a model learned in a domain (= domain) to another domain. Since it is possible to divert content already learned in another domain to another domain, there is the merit that you can save resources and time for new learning.

Transfer learning: Invitation to next frontier of machine learning

https://qiita.com/icoxfog417/items/48cbf087dd22f1f8c6f4

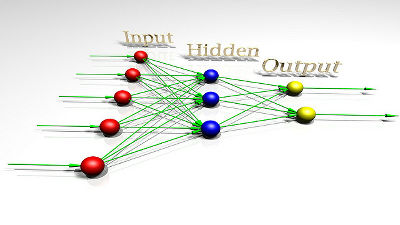

One Neural Architecture Search (NAS) is a technology that optimizes the architecture itself of a neural network. In the conventional neural network, human beings design the structure of the neural network in advance and optimize the weight of the network, but NAS optimizes the weight itself by optimizing the neural network structure itself and parameters.

Explain the theory of AutoML, Neural Architecture Search.

https://qiita.com/cvusk/items/536862d57107b9c190e2

Transfer learning and NAS are technologies that approach one thing in the opposite direction. Transfer learning is "to generalize similar types of problems". For example, as shown in the image below, this includes a case where elements such as "corner" "circle" "dog face" "wheel" are included in various images. NAS, on the other hand, is based on the idea that "All data sets have unique and highly specialized architectures that deliver the best performance."

In conducting machine learning, it is not always necessary to implement NAS for all domains, and if there is a field where learning has already been done, it is possible to utilize the asset by metastatic learning is. However, it is barely touched on the fact that Google is screaming loudly "Machine learning requires a high-performance computer" and that media that sells as it is can compress necessary resources and time by metastasizing learning Fast.ai points out that it is not.

Although it is a field of machine learning which hurdle will be high by all means hurdle is brought, it brings big effect by using it well, better neural network training becomes possible. Some of the examples are as follows.

· Dropout : a method to prevent excessive learning and to optimize neural networks with deep hierarchy with high accuracy

· Batch normalization : a method to increase the learning speed by stabilizing the learning process of the network as a whole

· Rectified Linear Unit (ReLU): a method to prevent the neural network from expanding to a larger size at more levels

Related Posts:

in Software, Posted by darkhorse_log