Google's AI allows more accurate lip reading than experts

ByGage Skidmore

Although hearing impaired people were born to read the contents of conversation by looking at the movement of their lips, in recent years they are often referred to as "spy technology for reading conversations of people who are far away" It is drawn in a fiction work of. In fact, often in the football communityReproductive reportIt is a topic, and recently it is often that players see the scene to conversate while hiding their mouths to avoid reading lip. Also in Japan, TV Asahi's "Gon Zhongshan & Zakiyama's Kirito TVCorner using reading lip is appearing in such as. Meanwhile, Artificial Intelligence (AI) developed by Google has challenged lip reading and has revealed reading accuracy with higher accuracy than done by human experts.

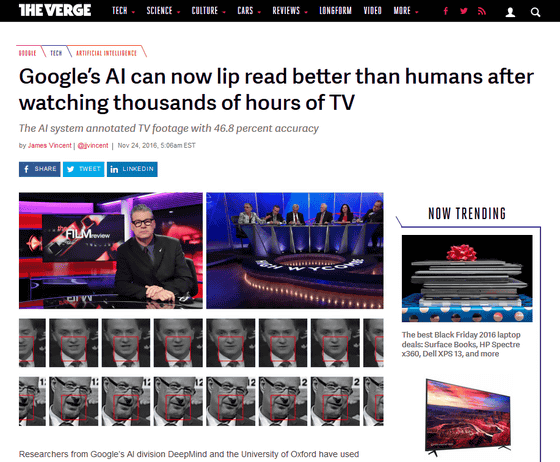

Google's AI can now lip read better than humans after watching thousands of hours of TV - The Verge

http://www.theverge.com/2016/11/24/13740798/google-deepmind-ai-lip-reading-tv

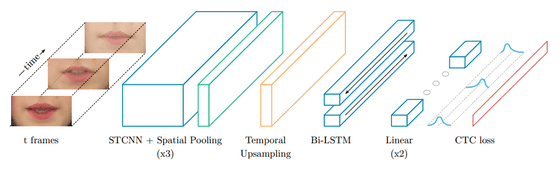

Google's AI development department DeepMind and Oxford University researchers used AI to "Watch, Listen, Attend, and Spell" the most accurate lip reading softwaredevelopment ofDid. In development, it seems that AI 's neural network learned the reading lip with the TV broadcast of BBC for thousands of hours, and finished in software that can accurately read 46.8% of the actual remark content. If you look at only the number "46.8%", it may seem that it is not so epoch-making software, but as a professional readingist copied the image which AI had read lipidly, he could pick up the words correctly Is only 12.4% of the total, you can see how precise AI is.

Another research group at Oxford University is "LipNet"We are announcing a lip reading software called. LipNet was a software that blew out the wonderful answer rate of 93.4% at the test stage, and it seems that the correct answer rate was 52.3% when a professional reading biologist read the same image. However, LipNet was a volunteer photographed a sentence talking about the sentences, tested the accuracy of the lip reading with the video, and tried the accuracy of the reading lip with various images like AI developed by Google Attention is necessary in that it is not things.

The total sum of images used by Google's AI for learning lip reading exceeds 5000 hours, and programs used such as "Newsnight", "Question Time" and "World Today". In these programs, 118,000 different sentences and 17,500 unique words have appeared, but only 51 unique words have appeared in the video LipNet used for testing.

Deep Mind researchers see this lip reading software useful in various fields and it is useful not only for hearing impaired people to understand the contents of conversation but also to annotate silent movies, like Siri and Alexa Or may be used to improve the accuracy of speech recognition AI.

According to the researchers, the precision of the reading lip is greatly different between TV images captured at high resolution under bright lighting and images with low image quality with low frame rate, but the overseas news media The Verge evaluates "AI seems to fill up even the difference", and high evaluation accuracy of AI's lip reading technique.

Related Posts:

in Software, Posted by logu_ii