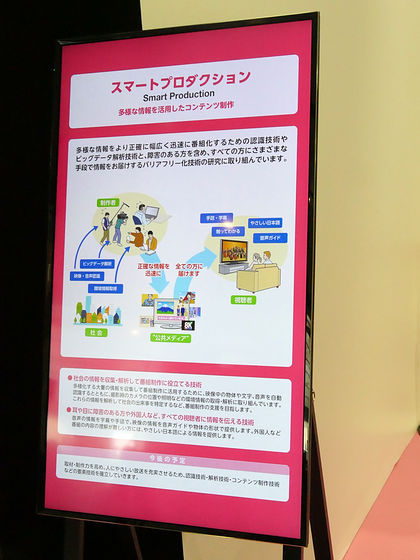

"Smart Production" technology which automatically collects and analyzes video, sound, big data all over the world, creates contents, and surely tells the other party

The news program that is being distributed on television and the net is made based on information gathered by human hands and reporters' coverage, but in the future the day when that "common sense" will change may come. It is open to the public from Thursday 26th (Thu) 20th to Sunday 29th (Sun) in 2016NHK Giken Open 2016At the venue of the event, using the computer's automatic recognition technology to accurately pick up information that floods the world to create programs, and cover a range of people to quickly convey the technology "Smart Production"The mechanism of it is exhibited.

NHK Giken Public 2016 ~ Please feel the evolving broadcasting technology continues ~

https://www.nhk.or.jp/strl/open2016/

Corner of "Smart Production" in entrance lobby. Outline of technology is explained by making full use of many displays.

Smart Production produces a wide range of programs precisely using recognition technology and big data analysis technology based on diverse information flooded over the Internet and so on, and to everyone including eyes and deaf people Technology that covers up to the point where information can be delivered by some means. It may change the flow of future interviews and program production greatly.

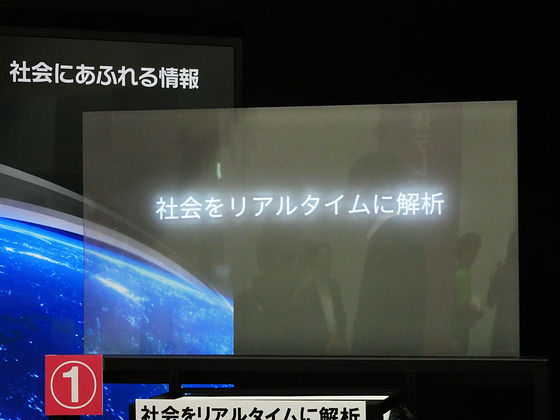

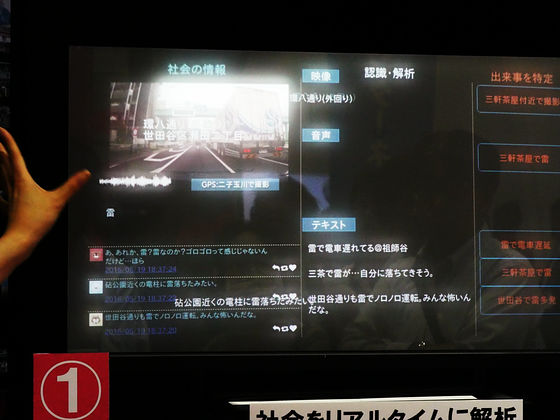

A lot of information such as news, images, texts and the like flies to society ... ...

In smart production, we analyze society in real time based on these information.

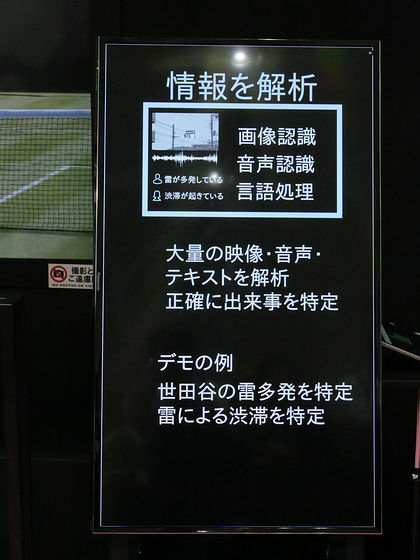

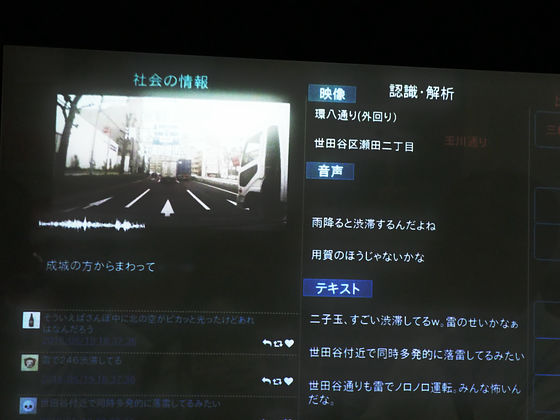

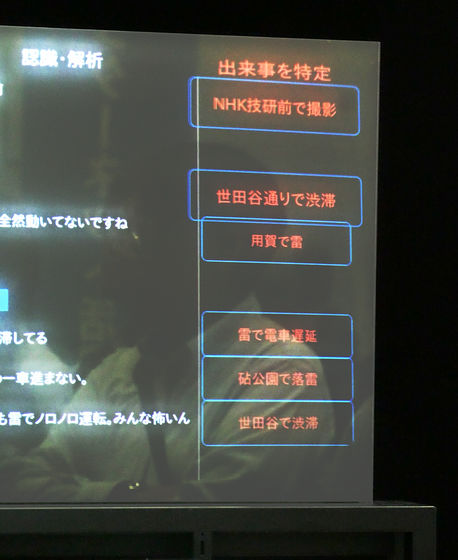

For example, by analyzing images, sounds, and text, it is explained step by step how to accurately specify the event "heavy traffic lightning occurs in Setagaya and traffic jam occurs".

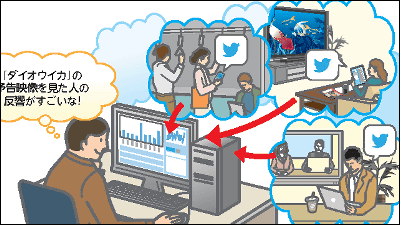

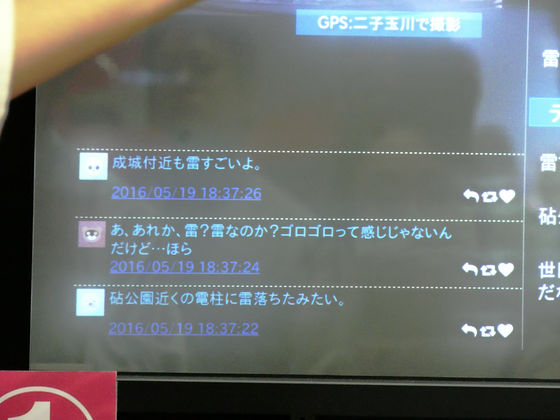

The images captured by the camera and tweets on the net are monitored in real time.

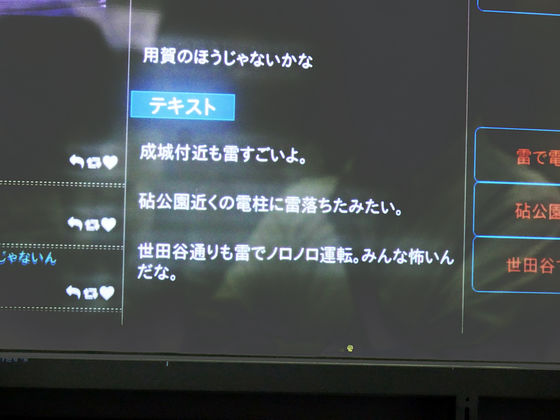

Analyze words such as "thunder wonderful" and "thunder thrown" posted on the net ... ...

Pick up what will be information.

In addition, we collect information sources through recognizing facts of lightning strikes based on images captured by in-vehicle cameras, and by recognizing the occurrence of lightning from voice data and accumulating it.

Based on these information, we will automatically identify events such as "lightning before NHK Giken", "traffic jam at Setagaya Dori", "delayed train by lightning". Even now, it is important for news gathering technology to be interviewed by human reporters, but at NHK the development of information gathering means using such recognition technology is under way.

It is a smart production that identifies events by such a feeling, but it is still thought that there are only news agencies and that they widely disseminate that information.

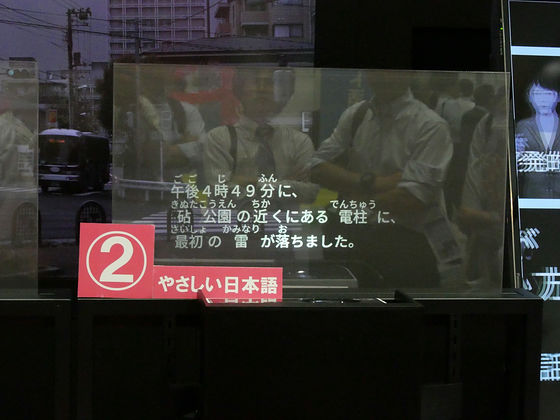

For many people it is possible to deliver it on radio, television, net, etc, but for foreigners who are not so used to small children and Japanese, system to distribute with friendly Japanese ......

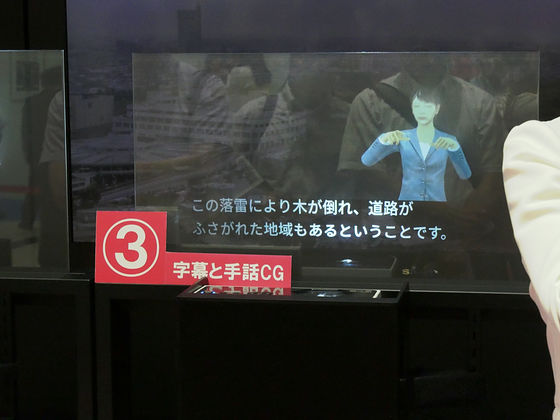

Subtitles displayed on the screen and barrier-free information transmission methods by sign language automatically generated by CG are also being developed.

Also, for people who are hard to touch information because they are out of the office, a system that communicates information through the speakers installed at the street corners has been developed.

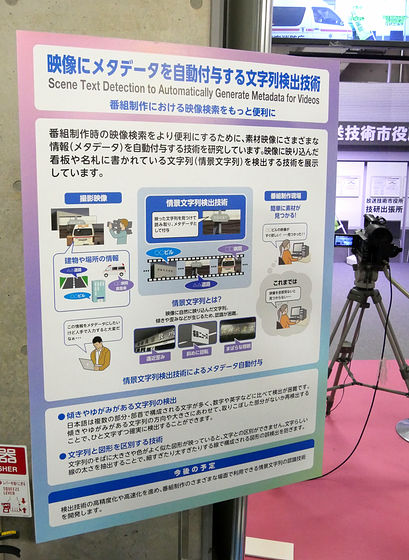

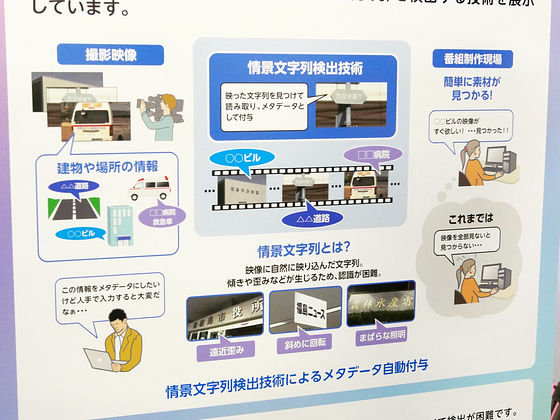

Image recognition technology for actually recognizing information was also exhibited. This is a corner of character string detection technology that analyzes images taken by camera and automatically assign metadata.

Thanks to the development of image analysis technology, it is possible to recognize letters and the like appearing in the image without using human power, generate character data that can be used, and give it as metadata.

For example, when the camera shoots such a set ...

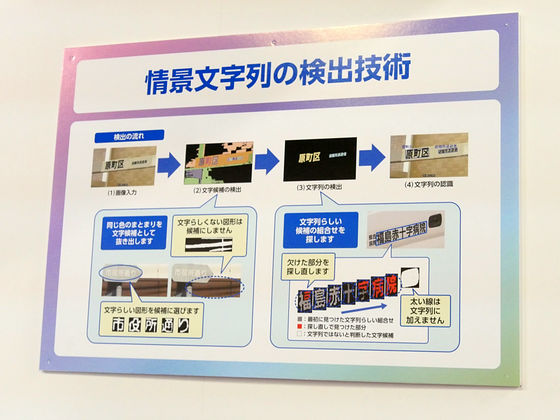

Processing of "1. detection of character candidates" → "2. detection of character strings" → "3. Recognition of character strings" → "4. application of effects" is performed on the computer, Metadata that can be utilized is given.

Various algorithms seem to be used for character string detection.

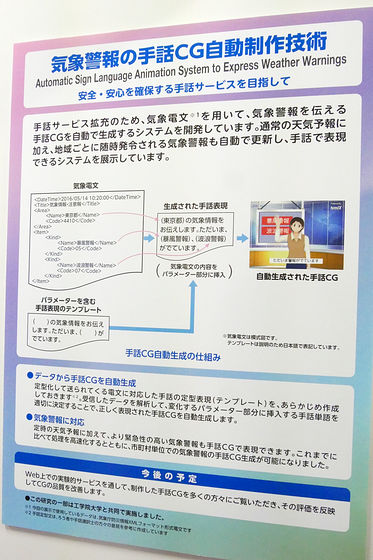

Automatic production techniques for sign language CG are also being developed to convey urgent information such as weather warnings to people with disabilities. It was sent in a data format such as XMLWeather telegram, Analyze it, insert it into the template of sign language expression, and generate sign language CG therefrom. It is a system that allows information to be transmitted in sign language immediately without requiring sign language interpreter staff.

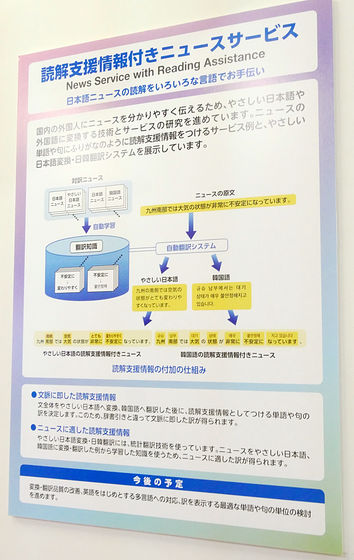

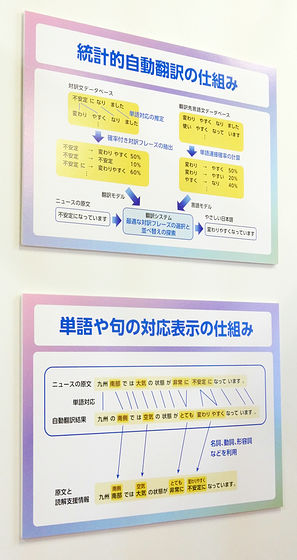

A technique called "news service with comprehension support information" for people who are not familiar with Japanese, are automatically developed by automatically phonetically phoning Japanese sentences. This is not to translate from Japanese to foreign languages, but to analyze sentences in Japanese and give Japanese / foreign language phonetics to difficult kanji.

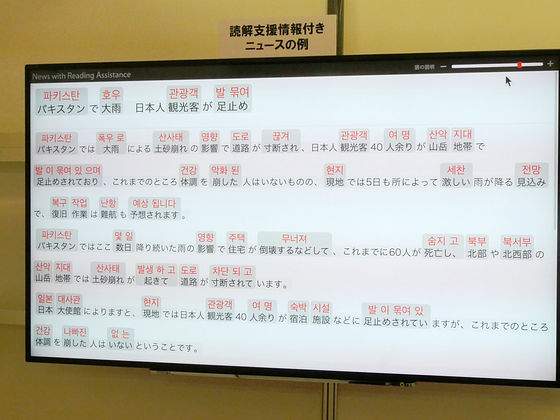

It is possible to grammatically analyze the original sentences read in the news and to replace the places where it is judged difficult to read with simple expressions. For example, by adding information that supports reading comprehension, such as "air" to "air" and "instability" to be "easy to change", we are aiming to reliably deliver information.

In addition to Japanese, it is also possible to give Korean as a phonetic as follows. Often it is translated into strange sentences when automatic translation is done, but taking the method of replacing only key points with foreign languages based on the Japanese form like this is a certainty of information transmission It might be a reasonable way indeed if we think about it.

Related Posts: