Google reports that 'distillation attacks attempting to extract Gemini's capabilities to develop competing AI are on the rise'

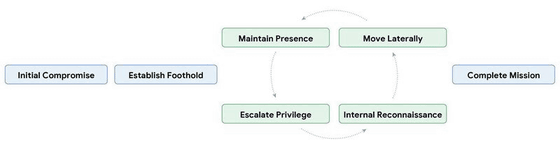

The Google Threat Intelligence Group (GTIG) has reported that threat actors are leveraging AI to accelerate the attack lifecycle, including social engineering and malware development, to improve productivity.

GTIG AI Threat Tracker: Distillation, Experimentation, and (Continued) Integration of AI for Adversarial Use | Google Cloud Blog

Google DeepMind and GTIG have warned of an increase in ' distillation ' attacks that steal knowledge stored by AI models.

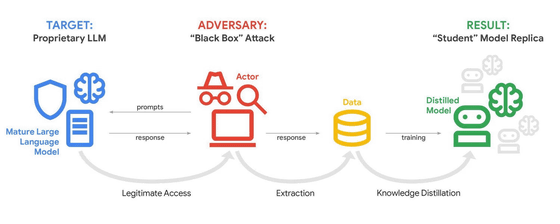

While attackers have attempted to steal trade secrets in the past, they have typically used computers to infiltrate systems and steal sensitive data. However, when AI services based on large-scale language models (LLMs) are provided, attackers can attempt to replicate the functionality of the AI model by leveraging legitimate API access without having to infiltrate the system. This is called a distillation attack. Because a distillation attack allows attackers to develop AI models more quickly and at significantly lower cost, GTIG points out that 'a distillation attack is effectively a form of intellectual property theft.'

While no direct attacks on cutting-edge AI models or generative AI by advanced persistent threat actors were observed in 2025, GTIG reported that distillation attacks are frequently attempted by private companies around the world and by researchers attempting to replicate their own logic. The GTIG also noted that distillation attacks violate Google's terms of service .

Google DeepMind and GTIG have identified and thwarted distillation attack attempts by researchers and private companies around the world.

GTIG writes that the goal of threat actors launching distillation attacks is to exploit Gemini's superior inference capabilities. While internal inference traces are typically summarized before being presented to the user, attackers attempt to coerce the AI model into outputting the complete inference process.

In one of the identified attacks, Gemini was instructed to ensure that the language used in thought content strictly matched the main language of the user input.

Distillation attacks do not pose a risk to ordinary users because they do not threaten the confidentiality, availability, or integrity of AI services. Rather, the risk is concentrated on AI model developers and service providers.

Organizations that offer AI models as a service should monitor API access to identify extraction or distillation patterns. For example, a custom model tailored for financial data analysis could be targeted by competitors looking to develop derivative products, and coding models could be targeted by attackers looking to replicate functionality in an environment without guardrails.

To protect its proprietary logic and specialized training data, Google continuously detects, prevents, and mitigates model exfiltration activities, including real-time preventative defenses that could degrade the performance of AI models.

Over the past year, government-sponsored attackers have consistently used Gemini to perform coding and scripting tasks, gather information on potential targets, and research known vulnerabilities.

In Q4 2025, direct and indirect correlations were found between threat actors' exploitation of Gemini and real-world activity, providing the GTIG with a deeper understanding of how these efforts translate into real-world activity.

Additionally, GTIG reports that state-sponsored threat actors use LLMs as a critical tool for rapid technical reconnaissance, targeting, and phishing scams.

GTIG also observed threat actors expressing interest in building AI agents to assist in the development of malware and tools.

Additionally, the malware family 'HONESTCUE' has already been detected, which attempts to use Gemini's API to generate code that allows malware to be downloaded and executed.

Related Posts: