AMD releases 'Nitro-E,' a lightweight image generation AI model that can generate images in 0.16 seconds even with an iGPU

AMD released its image generation AI, Nitro-E , on October 24, 2025. Nitro-E is a lightweight model with 304M (304 million) parameters, enabling faster training and image generation processing.

Nitro-E: A 304M Diffusion Transformer Model for High Quality Image Generation — ROCm Blogs

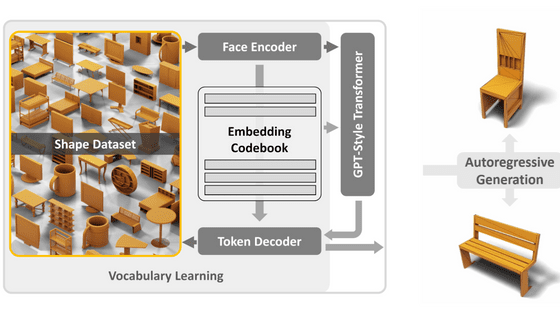

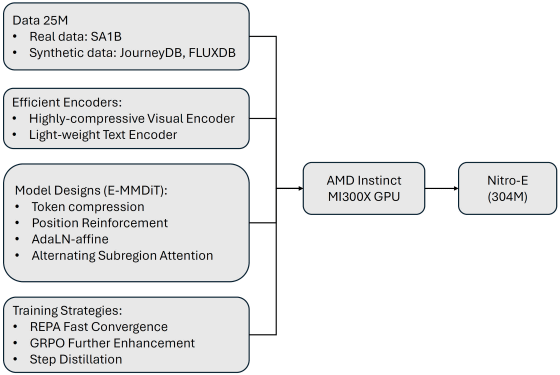

Nitro-E uses an architecture called 'E-MMDiT.' The initial Stable Diffusion released in 2022 used an architecture called 'U-Net,' but as of 2025, image generation AI using an architecture called 'Diffusion Transformer (DiT)' using a Transformer and 'Multimodal Diffusion Transformer (MMDiT)' designed to handle text and images are on the rise. E-MMDiT is an improved version of the MMDiT architecture, incorporating mechanisms that help speed up training and inference, such as 'reducing the number of image tokens to be processed by 68.5% using a multi-pass compression module.'

The training data used was 11.1 million non-AI-generated images, 4.4 million images generated by Midjourney, and 9.5 million images generated by FLUX.1-dev. Additionally, the system employs the highly compressed visual encoder DC-AE and the lightweight, high-performance text encoder Llama-3.2-1B . The number of parameters is only 304M, and training can be completed in 1.5 days using an AI processing node equipped with eight Instinct MI300X processors .

Inference (image generation processing) is also extremely fast, with a single Instinct MI300X capable of generating 512x512 pixel images at 18.8 samples per second. The distillation model can generate 39.3 samples per second. Furthermore,

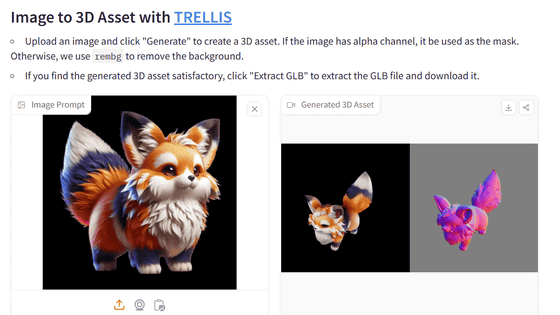

Below is an example of an image generated by Nitro-E.

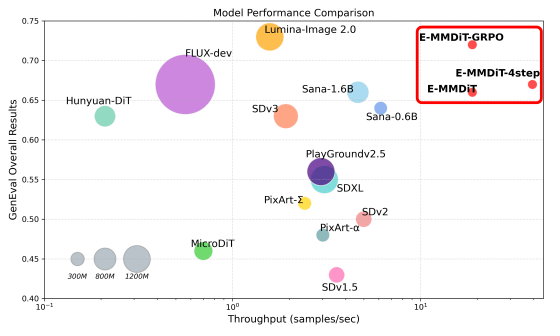

In addition to the standard version of Nitro-E, AMD has released a GRPO version optimized for various tasks and a 4Step version that enables generation in four steps through distillation. The graph below compares the performance of various image generation AI models with the 'standard version of Nitro-E (E-MMDiT),' 'GRPO version of Nitro-E (E-MMDiT-GRPO),' and '4Step version of Nitro-E (E-MMDiT-4Step).' The vertical axis represents the quality of the generated image, the horizontal axis represents the number of samples generated per second, and the size of the circle represents the scale of the model. The graph shows that the Nitro-E series achieves high-speed, high-quality image generation in a compact model.

The Nitro-E model data is available at the following link:

amd/Nitro-E · Hugging Face

https://huggingface.co/amd/Nitro-E

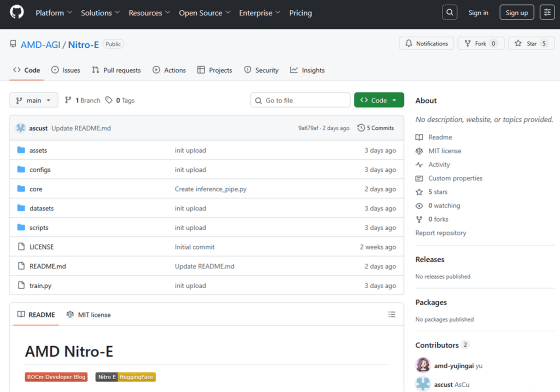

In addition, the learning data and code used for training Nitro-E are collected at the following link, so that anyone can reproduce Nitro-E.

GitHub - AMD-AGI/Nitro-E

https://github.com/AMD-AGI/Nitro-E

Related Posts:

in AI, Posted by log1o_hf