OpenAI releases its most advanced voice dialogue model, 'gpt-realtime,' and the 'Realtime API' is now official.

OpenAI has updated its ' Realtime API ,' which allows developers to incorporate ChatGPT's real-time conversation functionality into their apps, from beta to official, and released it to the public. It has also announced a practical voice dialogue model (voice AI) called ' gpt-realtime .'

The Realtime API is officially out of beta and ready for your production voice agents!

pic.twitter.com/fX5yvt0CDD — OpenAI Developers (@OpenAIDevs) August 28, 2025

We're also introducing gpt-realtime—our most advanced speech-to-speech model yet—plus new voices and API capabilities:

🔌 Remote MCPs

🖼️ Image input

📞 SIP phone calling

♻️ Reusable prompts

The 'Realtime API' was released in October 2024 as an API that reduces latency by directly connecting audio input and output with speech recognition models without going through a text-to-speech API.

OpenAI releases 'API that allows you to incorporate ChatGPT's real-time conversation function into your app' - GIGAZINE

According to OpenAI, after the public beta release, thousands of developers have implemented the API and identified areas for improvement. As a result, it has been optimized to achieve reliability, low latency, and high quality, allowing voice agents to be successfully deployed in production environments. In fact, real estate information site Zillow and telecommunications company T-Mobile have deployed voice agents that respond naturally.

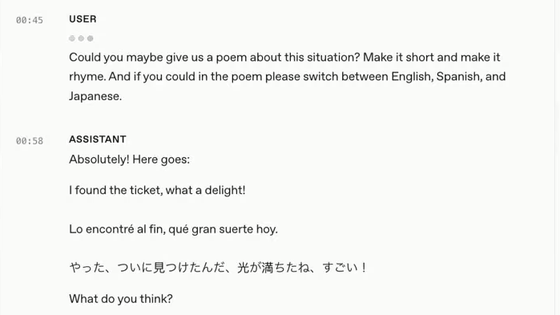

The newly announced voice interaction model, gpt-realtime, includes improvements such as understanding complex commands, accurately invoking tools, and generating more natural and expressive speech. According to OpenAI, it also improves the ability to interpret system messages and developer prompts, reads disclaimers verbatim in support calls, repeats alphanumeric characters, and seamlessly switches between languages within a sentence.

A video introducing and demonstrating gpt-realtime by OpenAI staff has been released.

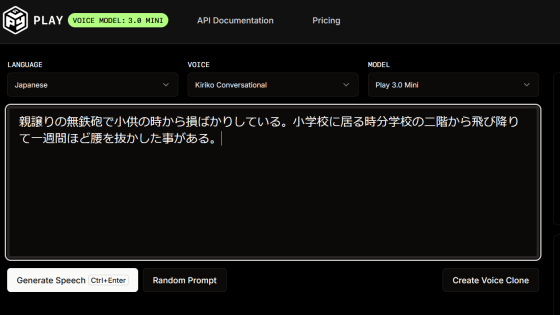

You can see the low latency of voice recognition and seamless language switching.

Natural conversation is essential for deploying voice agents, and gpt-realtime has been trained to generate higher-quality voices that sound more natural and follow detailed instructions. Two new voices, 'Cedar' and 'Marin,' have been added, and eight existing voices have been updated.

Here's a sample voice of Marin:

Here's a sample voice of Cedar:

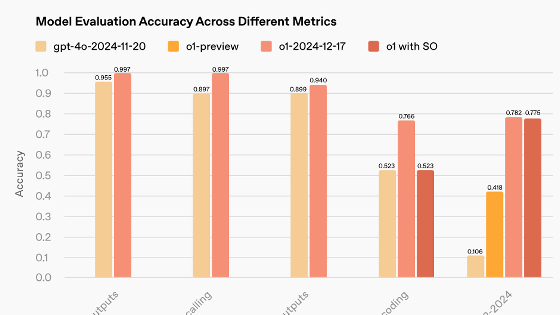

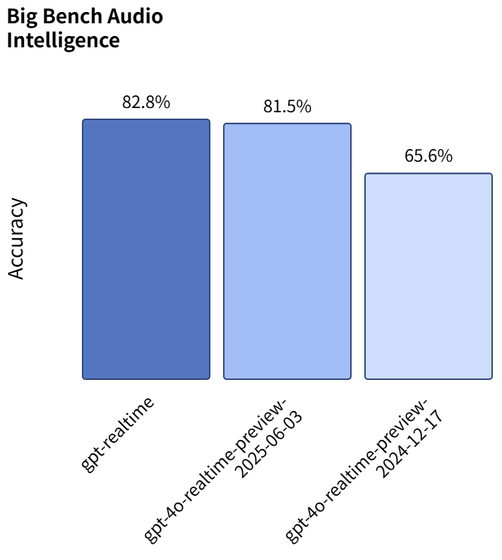

gpt-realtime's speech understanding ability has also improved, with the accuracy of detecting alphanumeric characters such as phone numbers now at 82.8%, a significant improvement from 65.6% in the model released before December 2024.

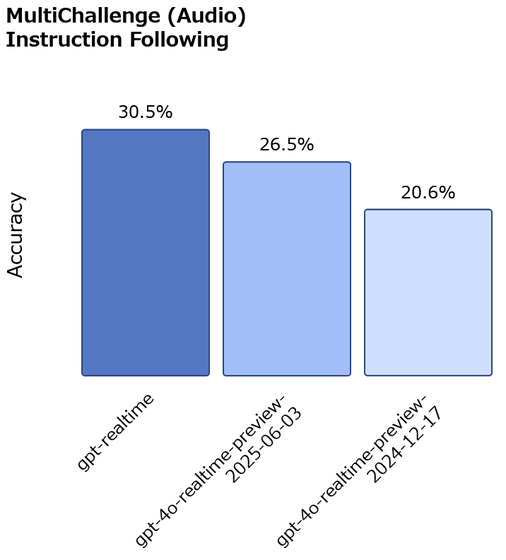

Developers also instruct the model on behavioral guidelines, such as what to say and what to do in specific situations. OpenAI focused on improving its ability to follow these instructions, achieving a score of 30.5% on the MultiChallenge voice benchmark, which measures instruction compliance accuracy. This is a significant improvement from the 20.6% achieved by the previous model in 2024/12.

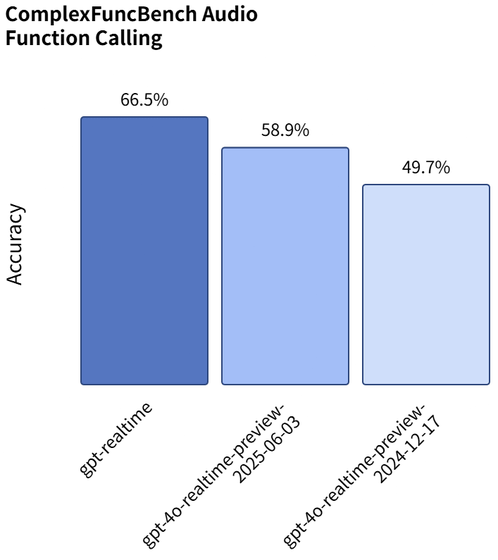

In terms of function calls, it also recorded a score of 66.5% in the ComplexFuncBench Audio Function Calling benchmark, significantly higher than the previous model's 49.7%.

Other improvements include enabling MCP support and image input support.

The usage fee for gpt-realtime is 20% cheaper than gpt-4o-realtime-preview, with voice input tokens costing $32 (approximately 4,700 yen) per million, cached input tokens costing $0.4 (approximately 59 yen), and voice output tokens costing $64 (approximately 9,400 yen) per million.

Related Posts:

in Software, Posted by logc_nt