Survey finds that 'almost all' college students use generative AI, sending shockwaves through education

On February 26, 2025, the Higher Education Policy Institute (HEPI), a British think tank, published a report stating that more than nine in ten British undergraduate students are using AI in some form, a significant increase from the previous survey, which showed two-thirds. Experts say that such a sudden change in student behavior is unprecedented.

Student Generative AI Survey 2025 - HEPI

Surge in UK university students using AI to complete work

https://www.ft.com/content/d591fb1a-9f6c-4345-b5fc-781e091ae3f8

In its 'Student Generative AI Survey 2025,' released on February 26, 2025, HEPI surveyed 1,041 full-time undergraduate students who responded to the previous survey in February 2024 about the use of generative AI tools through market research consultancy Savanta.

The results showed that the percentage of students using AI in some form jumped from 66% in 2024 to 92% in just one year. Additionally, the percentage of students using generative AI to grade exams, assignments, papers, and other grades also increased from 53% the previous year to 88%.

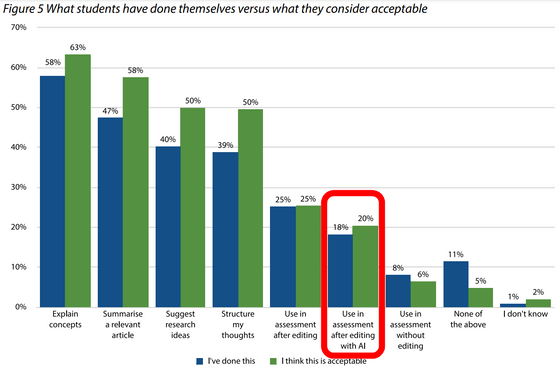

When asked about the uses of generative AI, responses (see figure below) were mainly supportive, such as explaining concepts, summarizing papers, and proposing research ideas. However, a significant proportion (18%) of students (shown in the red box in the figure below) said they would use AI-generated text directly in their own work.

'Such a sudden change in student behavior is almost unheard of. This is an urgent lesson for education institutions. All assessment methods will need to be reconsidered to see if they can be easily replaced by AI. This will require a bold retraining of faculty on the power and potential of generative AI,' said Josh Freeman, policy manager at HEPI, suggesting that universities will be forced to fundamentally change the way they assess students.

The survey also highlights a persistent 'digital divide' in the use of generative AI, with wealthier students more likely to use it than poorer students, and male students more likely to use it frequently than female students.

Only 29% of humanities students felt that AI-generated content would help them perform better in their subjects, but nearly half (45%) of students pursuing science, engineering or medical degrees said they felt the same way.

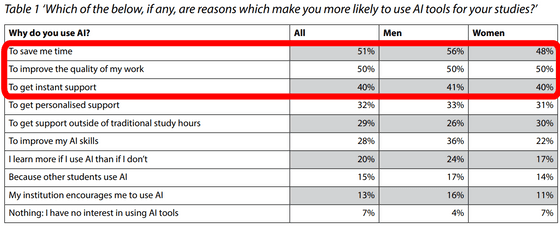

In the survey, undergraduate students cited 'saving time' (top row in the chart below) as the primary reason for using AI in their studies. A similar percentage of students also cited 'improving the quality of their work' (second row from the top), and a significant number cited 'receiving instant support' (third row from the top).

Universities are also making progress in addressing AI, with the percentage of students who believe that staff are adequately prepared to support the use of AI doubling from 18% to 42% in one year. However, many students still complain that the rules regarding the use of AI are not clear.

'Everything is still very vague and it's unclear when and why we can use AI,' one student told the survey, while another said, 'The university is skirting the issue. AI isn't banned, but it's not encouraged either. If we use AI it would be academic misconduct, yet our lecturers tell us they are using AI. It's a very conflicting message.'

In the UK, Science and Technology Minister Peter Kyle recently caused controversy by answering the question 'Should children use ChatGPT to do their homework?' in a January 2025 interview with the Financial Times, saying 'Yes, as long as they are supervised.' The Financial Times suggests that the survey results will have a major impact on the UK education sector.

“Is it ok for kids to use ChatGPT to do their homework?” asks #BBCLauraK

— BBC Politics (@BBCPolitics) January 12, 2025

“With supervision, then yes… we need to make sure kids are learning how to use this technology” says Science Secretary Peter Kyle

https://t.co/YgnXu7F8RI pic.twitter.com/nFlFjjADlh

Janice Kaye, director of higher education consultancy Higher Futures and author of the report's foreword, told the Financial Times: 'While there is little evidence that AI tools are being used for fraud or system manipulation, there are many signs that they pose serious challenges for learners, teachers and institutions that higher education must address through innovation.'

Related Posts: