Google announces 'Willow', a quantum chip that can perform calculations that would take 10^25 years on a supercomputer in just 5 minutes

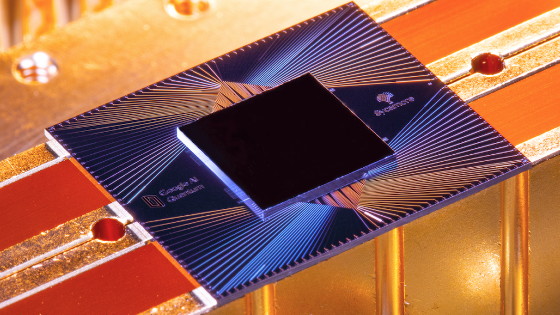

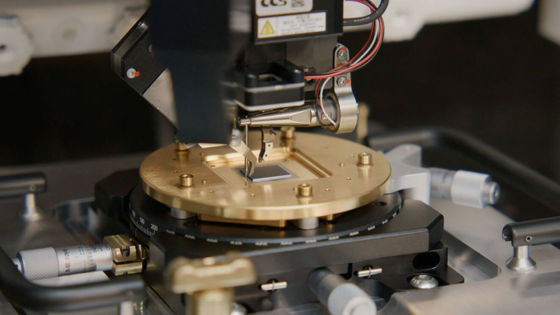

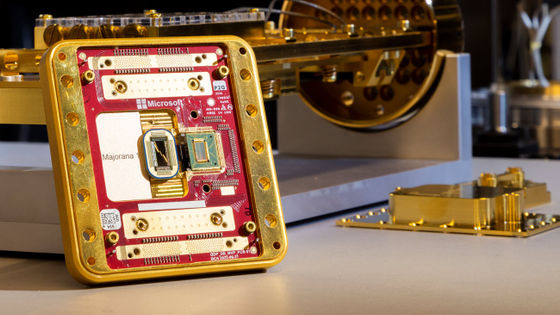

Google Quantum AI Lab, Google's quantum computing research division, has announced a new quantum chip called ' Willow ,' which is equipped with 105 qubits and achieves exponential improvements in quantum error correction and ultra-fast calculations.

Meet Willow, our state-of-the-art quantum chip

Quantum error correction below the surface code threshold | Nature

https://www.nature.com/articles/s41586-024-08449-y

Google reveals quantum computing chip with 'breakthrough' achievements - The Verge

https://www.theverge.com/2024/12/9/24317382/google-willow-quantum-computing-chip-breakthrough

Google gets an error-corrected quantum bit to be stable for an hour - Ars Technica

https://arstechnica.com/science/2024/12/google-gets-an-error-corrected-quantum-bit-to-be-stable-for-an-hour/

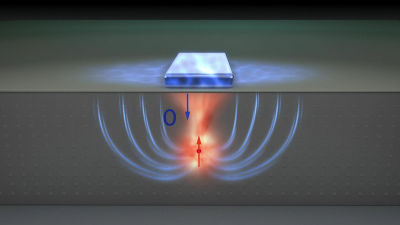

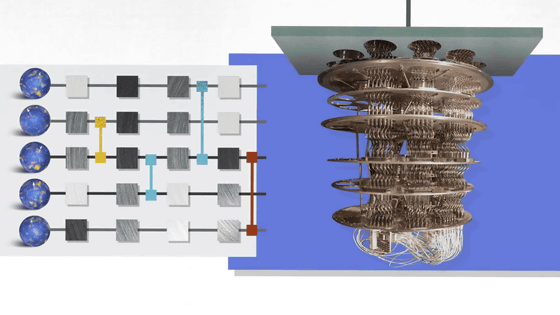

Conventional computers (classical computers) perform calculations using binary digits, either '0' or '1.' However, quantum computers use quantum bits, which can simultaneously represent '0' and '1' using a phenomenon called quantum superposition, making it possible to process large amounts of information at once and perform calculations at speeds incomparable to those of classical computers.

However, because quantum bits have the property of 'rapidly exchanging information with the environment,' they are subject to various influences from the outside world, causing errors. Therefore, even though it is theoretically possible to perform calculations at explosive speeds, in reality there is an increase in errors, making it impossible to perform high-precision calculations for long periods of time. It is no exaggeration to say that the biggest challenge in quantum computing is how to correct these errors.

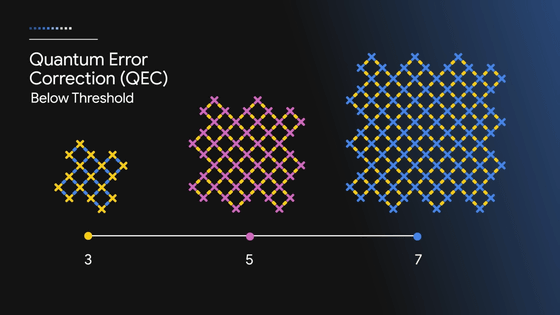

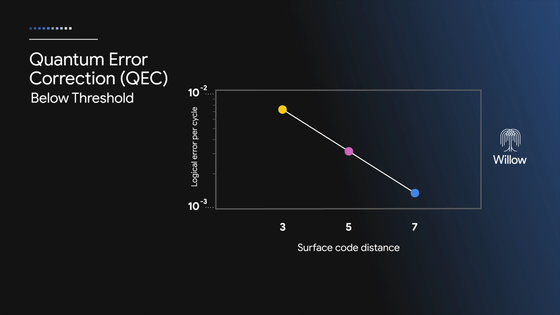

Willow has 105 qubits arranged in a lattice, making it possible to configure logical qubits in square arrays such as 3 x 3 bits, 5 x 5 bits, and 7 x 7 bits.

According to a paper published in Nature by the Google Quantum AI Lab, they were able to reduce the error rate by almost half at each step by expanding the quantum bit array from 3x3 to 5x5 to 7x7.

In conventional quantum systems, it is said that the more qubits you add, the more errors there are, and the closer the system approaches classical behavior. This is the '

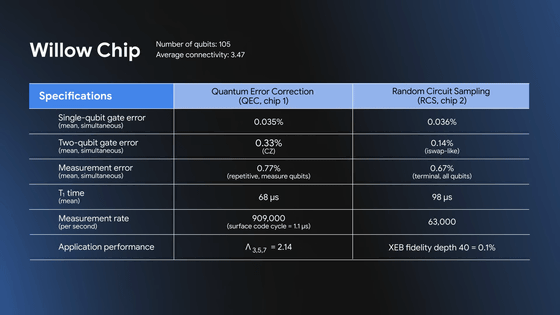

Willow achieves low error rates of 0.035% ± 0.029% for single-qubit gates and 0.33% ± 0.18% for two-qubit gates.

In addition, the T1 time that Willow's qubits can maintain an excited state has reached 68±13 microseconds, which is about a five-fold improvement over the previous generation. In addition, it has the ability to perform 909,000 error correction cycles per second. In quantum computing, this T1 time and error correction speed are very important because if errors cannot be corrected fast enough before the calculation is completed, the results will be destroyed.

The Google Quantum AI Lab claims that Willow's achievement is groundbreaking in that it not only demonstrates theoretical feasibility, but also demonstrates it on an actual superconducting quantum chip. It is expected to be important experimental evidence that strongly suggests the feasibility of large-scale fault-tolerant quantum computers.

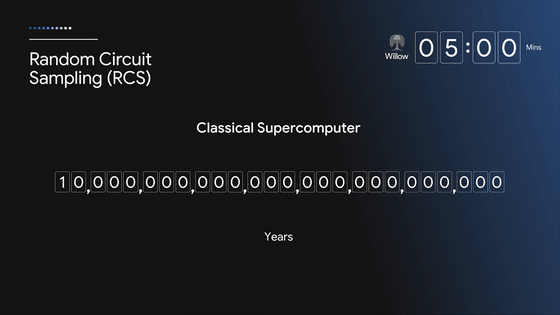

In addition, Google Quantum AI Lab reported another result of Willow: 'Willow was able to perform a calculation that would take 10 25 years on the fastest supercomputer at the time of writing in less than 5 minutes. However, this conclusion was based on a calculation that ran a benchmark called random quantum circuit sampling (RCS) on Willow and tried to reproduce the sampling results on a classical computer.'

'Advanced AI will benefit greatly from the use of quantum computing, which is essential for collecting training data that is inaccessible to classical machines, for training and optimizing specific learning architectures, and for modeling systems where quantum effects are important,' the Google Quantum AI Lab said.

'We see Willow as an important step in our efforts to build a useful quantum computer with practical applications in areas like drug discovery, fusion energy and battery design,' said Google CEO Sundar Pichai.

We see Willow as an important step in our journey to build a useful quantum computer with practical applications in areas like drug discovery, fusion energy, battery design + more. Details here: https://t.co/dgPuXOoBSZ

— Sundar Pichai (@sundarpichai) December 9, 2024

Related Posts:

in Hardware, Posted by log1i_yk