Google reports that it has discovered an unknown bug in 'Big Sleep,' a program that uses AI to find code vulnerabilities

Google has reported that it has used AI to discover an exploitable bug in the open source database SQLite, which has since been reported to the developer and fixed.

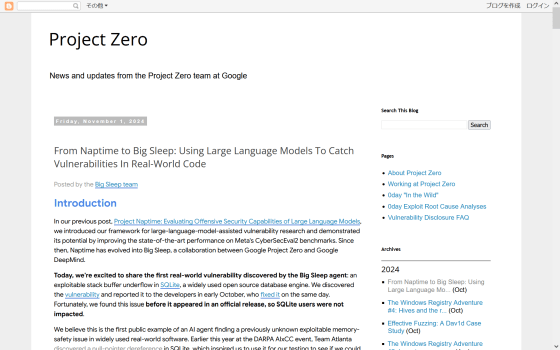

Project Zero: From Naptime to Big Sleep: Using Large Language Models To Catch Vulnerabilities In Real-World Code

Google's 'Big Sleep' AI Project Uncovers Real Software Vulnerabilities | PCMag

From Naptime to Big Sleep: Using Large Language Models To Catch Vulnerabilities In Real-World Code

https://simonwillison.net/2024/Nov/1/from-naptime-to-big-sleep/

Google is building 'Big Sleep,' an AI-based system that mimics the workflow of human security researchers scrutinizing code to find bugs that may pose security issues.

According to Google, during the project, they built a system based on Gemini 1.5 Pro, and when they had this system check new changes to the SQLite code base, they found a previously undiscovered bug.

Google then reported the vulnerability to the SQLite developers, who responded by releasing a fix.

Based on these discoveries, Google said, 'Our AI is smart enough to independently find vulnerabilities in real-world software.'

'We continued our investigation by inducing a bug that crashed SQLite to check for variants of previously reported vulnerabilities,' the Google researchers wrote. 'Large language models are good at pattern matching, so by inputting patterns that indicate past vulnerabilities, they can identify potential new vulnerabilities. The key to this discovery is that large language models are well suited to checking for new variants of previously reported vulnerabilities.'

'We believe this is the first example of an AI finding an exploitable, previously unknown problem in widely used, real-world software. As attacks against variants of previously discovered and patched vulnerabilities continue to grow unchecked, we believe this is a promising discovery that could ultimately turn the tables and provide an advantage to defenders,' the researchers added.

This is not the first time that AI has discovered software flaws; for example, in August 2024, a large-scale language model called 'Atlantis' discovered another bug in SQLite.

Still, Google said, 'These results from our own AI programs demonstrate that it's possible to use large-scale language models to find complex bugs before software is released,' and that in the future it could make dealing with and fixing problems cheaper and more effective.

Project Big Sleep was previously called 'Project Naptime,' a half-joking hope that the AI would be so good that it would give Google researchers time to take a nap.

Related Posts: