Two researchers who proposed the foundations of AI and machine learning using artificial neural networks received Nobel Prize in Physics

The Royal Swedish Academy of Sciences has announced that the Nobel Prize in Physics will be awarded to Professor Emeritus

Press release: The Nobel Prize in Physics 2024 - NobelPrize.org

https://www.nobelprize.org/prizes/physics/2024/press-release/

UC Emeritus Professor Geoffrey Hinton wins Nobel Prize in Physics | University College U of T

https://www.uc.utoronto.ca/news/uc-emeritus-professor-geoffrey-hinton-wins-nobel-prize-physics

PNI's John Hopfield receives Nobel Prize in physics | Princeton Neuroscience Institute

https://pni.princeton.edu/news/2024/pnis-john-hopfield-receives-nobel-prize-physics

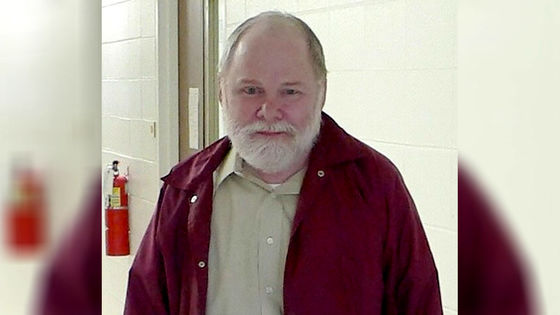

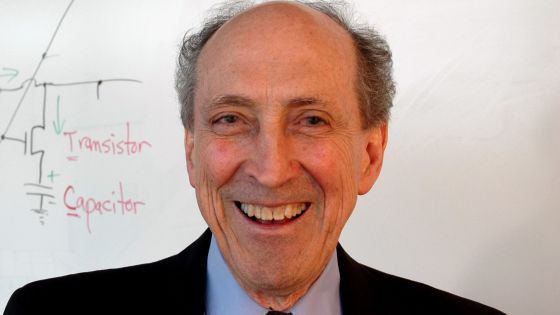

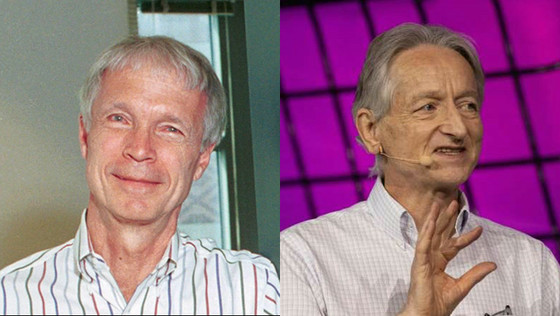

Nobel Prize-winning Physics Professors Hopfield (left) and Hinton (right)

The Royal Swedish Academy of Sciences stated, 'Professor Hopfield developed associative memories that can store and reconstruct images and other patterns in data. Professor Hinton invented techniques that can autonomously find properties in data and perform tasks such as identifying specific elements in an image.'

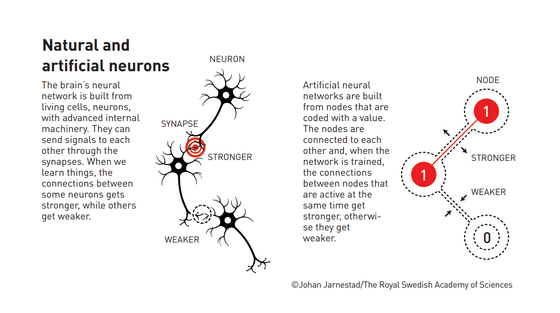

When we talk about artificial intelligence (AI) today, we are often referring to machine learning using artificial neural networks, a technique originally inspired by the structure of the brain, which trains the network as a whole by strengthening the connections between nodes with higher values.

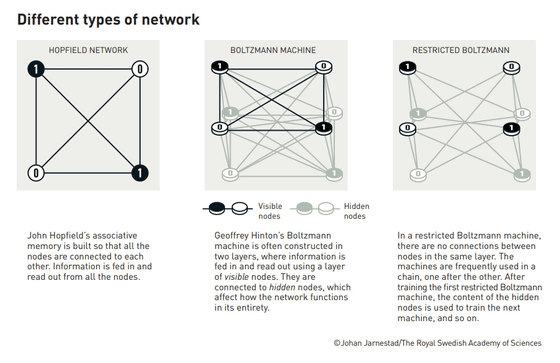

Professor Hopfield proposed a reciprocal neural network with no self-connections called the '

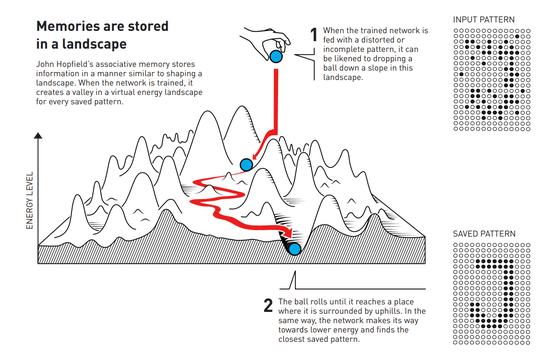

For example, let's say you want to recreate a black-and-white image, with each node as a pixel that can represent either black or white. The network remembers which nodes to turn on and which to turn off to represent a given image or character. During this process, the strength of the connections between nodes is adjusted. That is, nodes that are frequently turned on together are strongly connected, and nodes that are not often connected together are weakly connected.

When an incomplete pattern is input to the network, the network gradually changes the node state based on the input pattern, and finds the pattern that most closely matches the input from the patterns memorized during learning. For example, if a slightly distorted character 'a' is input to the network, the network will eventually output the complete 'a'. The Hopfield network became the basis of 'associative memory', which can reproduce complete patterns from incomplete information.

Based on this Hopfield network, Professor Hinton developed a network called the '

Boltzmann machines operate in three main phases: 'learning,' 'generation,' and 'recognition.' In the 'learning' phase, the machine automatically learns the characteristics of the given data and reflects the statistical properties of the data while adjusting the strength of the connections between nodes. In the 'generation' phase, the machine creates new data based on the learned patterns. In the 'recognition phase,' the machine becomes able to identify newly given data.

A notable feature of Boltzmann machines is that their operation is probabilistic. The output is not always the same, but has a certain degree of randomness. Boltzmann machines are the foundation of today's deep learning technology, including image recognition, speech recognition, recommendation systems, and natural language processing.

Professor Hinton has worked at Google since 2013 and has been involved in the development of AI, but will leave the company in March 2023.

'Godfather of AI' regrets AI research and leaves Google - GIGAZINE

The Nobel Prize Committee has released an audio recording of Professor Hopfield receiving the news of the award from the Royal Swedish Academy of Sciences. Professor Hopfield received the call while he and his family were getting flu vaccinations and stopping for coffee on the way home. Professor Hopfield commented, 'Science that advances technology is done early, driven by curiosity, and produces technology that is very interesting, useful, and that we can rely on to continue to make things better.'

First Reactions | John Hopfield, Nobel Prize in Physics 2024 | Telephone interview - YouTube

Below is an audio recording of Professor Hinton receiving the news of the award. 'I had an MRI scheduled for today, but I'm going to have to cancel that,' he said. Later, Professor Hinton told a University of Toronto spokesperson, 'I never expected this to happen. I'm very surprised and honored to be selected.'

First Reactions | Geoffrey Hinton, Nobel Prize in Physics 2024 | Telephone interview - YouTube

The Nobel Prize ceremony will be held in Oslo, Sweden on Tuesday, December 10, 2024. The prize money is 11 million Swedish kronor (approximately 158 million yen), which will be split equally between Professor Hopfield and Professor Hinton.

Related Posts: