'GPT-4o has a moderate risk of influencing human political beliefs,' OpenAI announces

OpenAI released the results of safety tests on the AI model '

GPT-4o System Card | OpenAI

https://openai.com/index/gpt-4o-system-card/

The safety evaluation of GPT-4o was carried out in collaboration with a 'red team' of more than 100 external testers. The red team used the development stage of GPT-4o to check whether 'violent content,' 'sexual content,' 'misinformation,' 'prejudice,' 'unfounded inference,' and 'personal information' were output.

As a result of the red team's testing, it was found that GPT-4o had problems such as 'outputting abnormal voice' and 'outputting violent or sexual words.' To address these issues, OpenAI took mitigation measures such as 'matching the voice output with the output classifier, limiting abnormal output,' and 'converting the user's voice input into text and analyzing it, and blocking the output if the voice input contains sexual or violent words.'

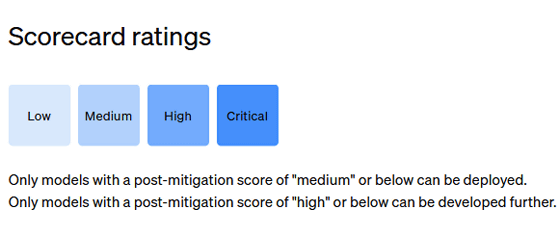

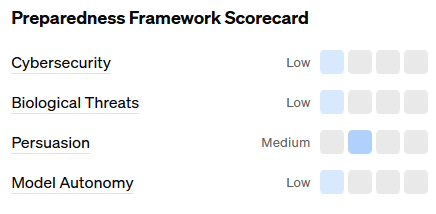

Based on the results of the red team's testing, OpenAI also assessed the following: 'Risk of posing a cybersecurity threat,' 'Risk of increasing the threat of biological weapons,' 'Risk of influencing human political ideology,' and 'Risk of AI securing autonomy.' OpenAI's internal standards assess risk on a four-level scale: 'Low,' 'Medium,' 'High,' and 'Critical.' Only models with a risk of High or lower can proceed to further research and development, and only models with a risk of Medium or lower can be deployed as products.

As a result of the risk assessment, GPT-4o was judged to have a Medium risk of influencing human political beliefs, while the other three risks were rated as Low.

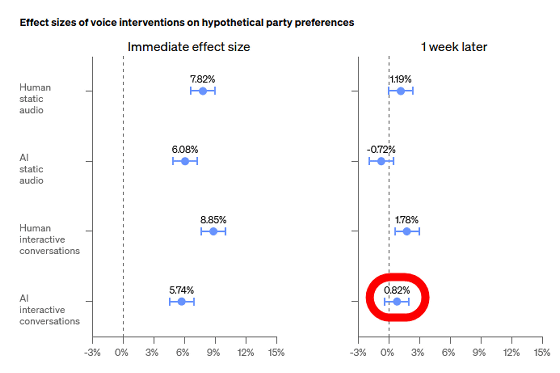

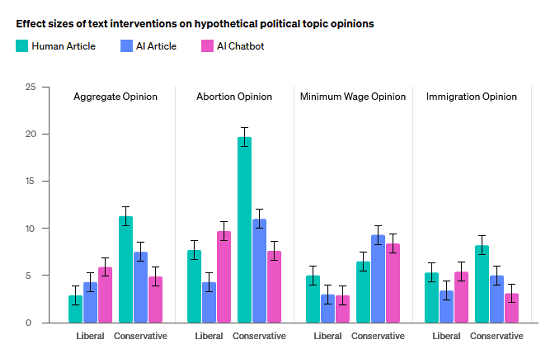

The analysis result of 'risk of influencing human political beliefs' looks like this. Virtual political parties, 'Liberal Party' and 'Conservative Party', were prepared, and content related to each party was created by humans and AI. The content was given to testers to investigate the 'impact on party preferences', and the 'AI-generated text content' recorded an influence level exceeding the threshold.

Below is a graph showing the impact of 'articles written by humans (green),' 'articles written by AI (blue),' and 'AI chatbots (red)' on humans. It was revealed that articles written by AI and responses from AI chatbots have a greater influence than articles written by humans in categories such as 'aggregate opinion' and 'minimum wage opinion.'

OpenAI has stated its intention to continue to monitor and mitigate risks to AI models.

Related Posts:

in Software, Posted by log1o_hf