A philosophy professor answers the question, 'Can AI predict and warn personal futures?'

There is an idea to use artificial intelligence (AI) and computers capable of advanced calculations to predict the future and prevent undesirable events in advance. Thomas Hofweber, a professor of philosophy at the University of North Carolina at Chapel Hill who studies how AI and language models work, explains the appropriateness and ethics of AI that predicts an individual's future.

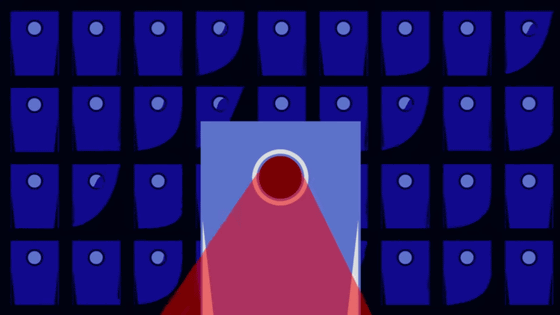

Professor Hofweber starts by giving the example of a couple who are thinking about getting married and wondering, 'Will this marriage work?' For example, statistics show that 10% of married couples get divorced within five years.

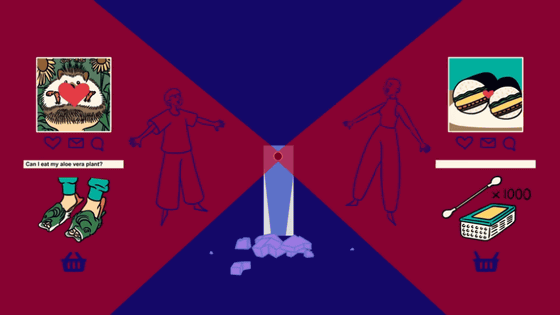

AI is believed to be able to make more personalized predictions than statistics. It learns from training data on what types of online searches a large number of couples perform, what shopping habits they tend to make, and what they say on social media. If you then input the information about the couple you want to predict, it should be able to give you a more detailed prediction of 'Will this couple's marriage go well?'

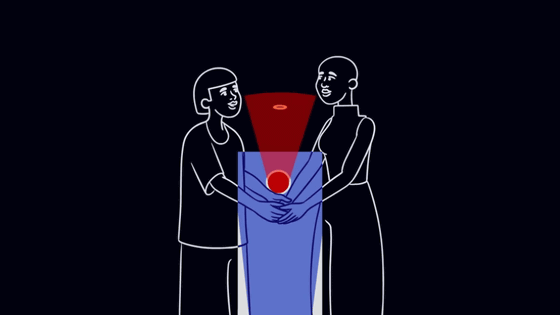

However, even if the AI predicts that 'there is a 95% chance that this couple will get divorced within five years of marriage,' their actions will not be restricted. Couples have three options. One is to get married, hoping that the prediction is wrong. Second, if there is a high probability that the relationship will dissolve, to break up now. Finally, since the prediction is that they will get married and then divorce, they also have the option of remaining together as unmarried.

The problem here, Hofweber points out, is that 'we don't understand the reasons.' If you are told that 'these are the reasons why you are likely to divorce,' you can confidently choose an option: be careful and live your married life, or decide not to get married if that's the reason. However, AI has uncertainty and incomprehensibility, and its opacity undermines the choices you can make based on future predictions.

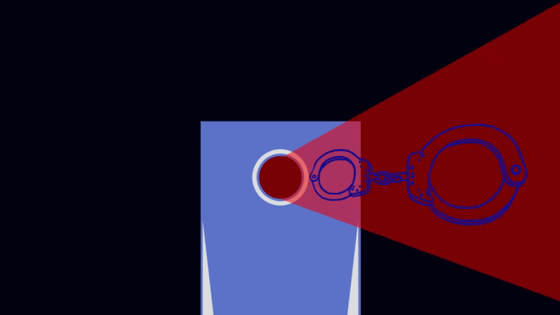

Another example is when a bank uses AI to predict whether a customer will be able to repay a loan. The same can be said for a prisoner who is not granted parole because the AI judges that the person is likely to return to prison. Even if a predictive model is recognized as potentially useful, it should seem intuitive that following the decisions made by the AI without understanding how the system works is quite problematic.

Transparency and accountability are just some of the trade-offs that arise when you entrust important decisions to AI. If you feel comfortable entrusting your decisions to an AI model, it's probably because you only care about the accuracy of the predictions. If you don't think about the basis for why a prediction is highly accurate, you can say that AI can predict the future.

But when it comes to making predictions, especially about personal topics, Hofweber says we should prioritize reliability over accuracy: AI can present possible futures, but it's up to each individual to make choices and prepare for failure.

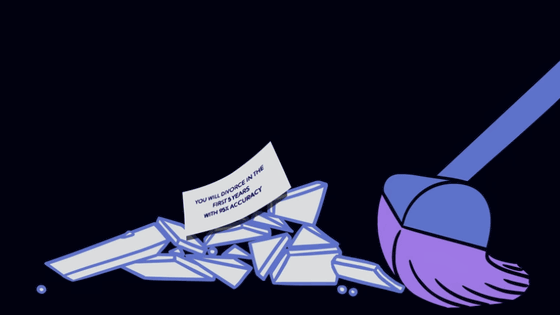

Also, even if the prediction is 95% accurate, it is not perfect. Even if the prediction is accurate, it will be wrong one time in 20. The problem here is that the more couples who use this prediction service, the higher the probability of getting divorced because 'the AI said we would get divorced,' and the 'correct answer rate' increases without scrutinizing the AI's predicted data. Hofweber describes AI predictions as 'potentially becoming more accurate through artificial or self-fulfilling properties.'

Related Posts: