Password management software LastPass employees are being deceived by a hacker using an AI-replicated CEO voice

With the development of AI technology,

Attempted Audio Deepfake Call Targets LastPass Employee

https://blog.lastpass.com/posts/2024/04/attempted-audio-deepfake-call-targets-lastpass-employee

Hackers Voice Cloned the CEO of LastPass for Attack

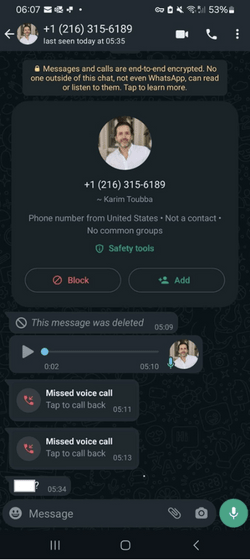

In April 2024, a LastPass employee received calls, text messages, and voice messages via WhatsApp from an individual claiming to be CEO Touba.

The employee determined that these contacts were false because they were outside the scope of normal business communication and claimed to be urgent. He did not respond to any of the messages and instead reported them to the company's security team.

As a result of this decision, no damage was done to LastPass, and the company said, 'The employee's decision enabled us to take steps to mitigate the threat and raise awareness of similar attacks both internally and externally.'

LastPass added, 'We wanted to share this incident to raise awareness that deepfakes are not only being used by nation-state threat actors, but can also be used to commit fraud by impersonating business executives. This case teaches us the importance of reviewing any suspicious contact from an individual claiming to be the CEO through proper internal communication channels.'

In fact, in Hong Kong, there was an incident where a man transferred 200 million Hong Kong dollars (approximately 3.9 billion yen) after following instructions from his superior over a video conference, despite the video and audio being deepfake.

Deepfake: A case occurred in which 3.8 billion yen was transferred to a scammer following instructions from a boss. Is there a way to distinguish deepfakes? - GIGAZINE

Related Posts: