A study that analyzed 57 million comments found that harmful comments on Wikipedia lead to a decrease in the activity frequency of Wikipedia editors

Each entry and user page on Wikipedia has

Toxic comments are associated with reduced activity of volunteer editors on Wikipedia | PNAS Nexus | Oxford Academic

https://academic.oup.com/pnasnexus/article/2/12/pgad385/7457939

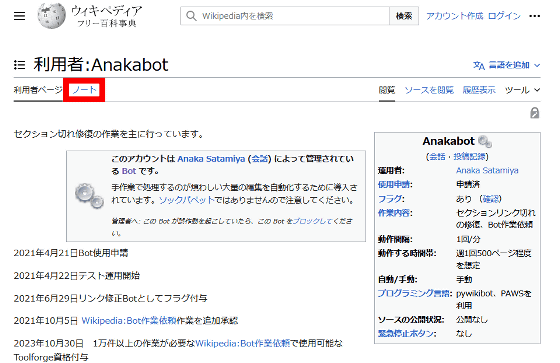

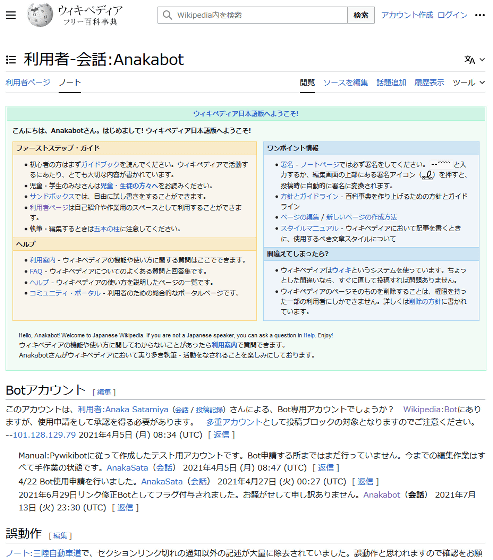

Wikipedia users can create individual user pages, and you can access each user's note page by clicking 'Notes' on the user page.

For example,

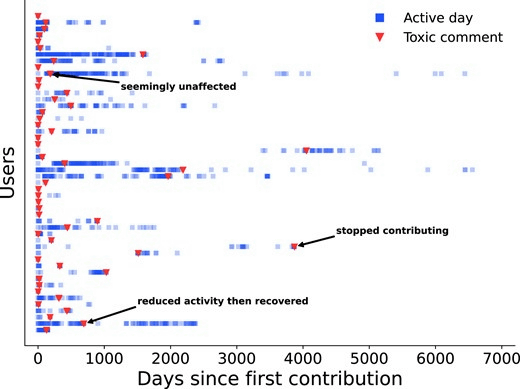

Below is a diagram showing the difference in activity frequency before and after 50 Wikipedia editors were randomly selected and bombarded with harmful comments. The horizontal axis shows the number of days that have passed since each editor's first activity, the blue square shows the activity, and the red triangle shows the timing of the harmful comments. Looking at the diagram, you can see that some editors who are bombarded with harmful comments reduce the frequency of their activities or stop their activities altogether.

In order to clarify the impact of harmful comments on Wikipedia editors, the research team aimed to clarify the impact of harmful comments on Wikipedia's 8.5 million editors' notes pages in English, German, French, Spanish, Italian, and Russian. Collected 57 million comments. Furthermore, we determined whether each comment was harmful using the

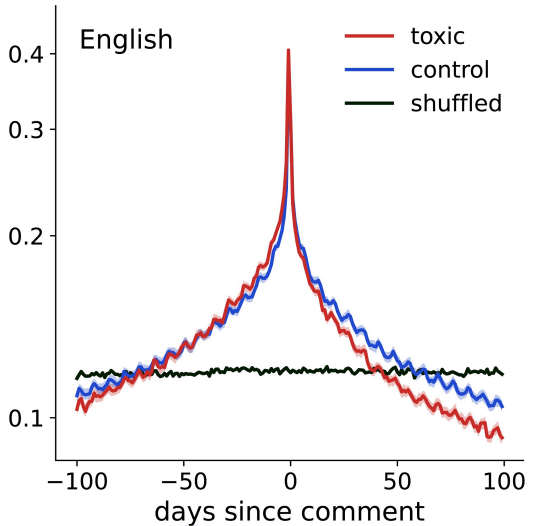

In the graph below, the horizontal axis shows the 'number of days that have passed since the day you received the comment' on the English version of Wikipedia, the vertical axis shows the frequency of activity, and the red line shows the activity when receiving harmful comments. Frequency change, the blue line shows the activity frequency change when non-harmful comments are received. The frequency of editor activity peaks on the day they receive a comment, regardless of whether it is harmful or not, and the activity frequency decreases after the day they receive a comment, but it is more noticeable when they receive a harmful comment. It can be seen that the frequency of activity has decreased.

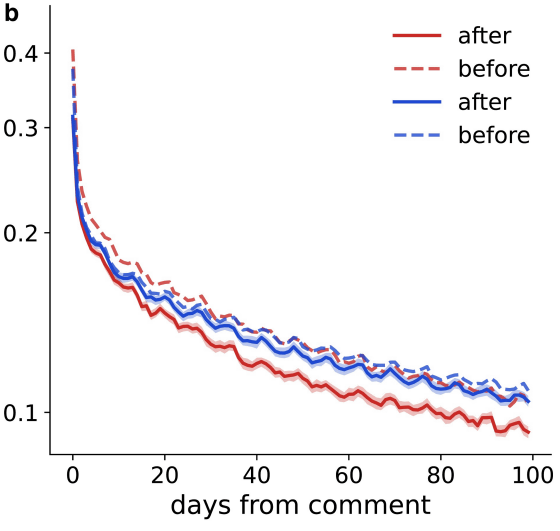

Below is a graph with the horizontal axis of the above graph aligned to the absolute value. The solid line indicates the frequency of activity after receiving the comment, and the dashed line indicates the frequency of activity before receiving the comment. When non-harmful comments are received (blue), the increase and decrease in activity frequency are almost the same, but when harmful comments are received (red), the activity frequency increases more rapidly than the increase. It can be seen that is decreasing. In other words, on the English version of Wikipedia, harmful comments have the effect of greatly reducing the frequency of editor activity.

The results were similar in German, French, Spanish, Italian, and Russian, confirming that editors who received harmful comments were less active. Based on the analysis results, the research team concluded that ``suppressing harmful comments is important for the continued success of collaborative platforms such as Wikipedia.''

Related Posts:

in Web Service, Science, Posted by log1o_hf