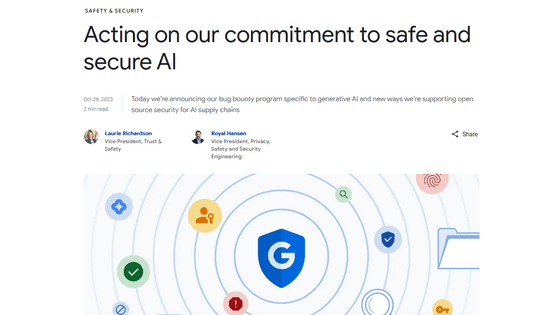

Google launches program to pay rewards to users who discover vulnerabilities in generative AI

Since 2011, Google has been operating

How Google is expanding its commitment to secure AI

https://blog.google/technology/safety-security/google-ai-security-expansion/

Google expands its bug bounty program to target generative AI attacks

Google adds generative AI threats to its bug bounty program | TechCrunch

https://techcrunch.com/2023/10/26/google-generative-ai-threats-bug-bounty/

Google announces bug bounty program for AI services, just like Microsoft - MSPoweruser

https://mspoweruser.com/google-bounty-program-for-ai/

Generative AI can answer questions, generate code, and generate illustrations according to the purpose, but depending on how it is used, there is a risk that it can create false news articles, SNS posts, and attacks that leak personal information. I'm running.

Until now, Google has been working on an initiative called VRP that pays rewards to security researchers who discover vulnerabilities in Google products and open source software. This time, Google has expanded the scope of this VRP and revealed that AI-based security vulnerabilities and attacks will also be subject to reporting and reward payments.

With the expanded scope of VRP, security researchers and hackers who discover, report, and disclose security flaws in generative AI to Google will receive up to $31,330 depending on the severity of the discovered vulnerability. (approximately 4.7 million yen) will be paid. In addition, overseas media TechCrunch stated that the conditions for receiving the full amount of $31,337 were, ``In highly confidential applications such as Google Search and Google Play, there are vulnerabilities in command injection attacks using AI and deserialization . 'If you find a bug that will cause a bug,' he said.

On the other hand, if the priority of the discovered vulnerability is low, the maximum reward paid by Google will be limited to $ 5,000 (about 750,000 yen).

Google told TechCrunch: 'Expanding the scope of VRP will lead to more research into AI safety and security, which may uncover potential issues with generative AI. 'This should lead to the development of safer and more secure generative AI products.'

In addition, Google solicits reports from security researchers and has formed its own white hacker team

The Google Red Team has previously warned about problems with large-scale language models, such as ' prompt injection, ' where hostile prompts can generate harmful text or leak sensitive information. Masu. It also warns Google about `` training data extraction attacks '' that reconstruct information trained by large-scale language models and extract personal information and passwords from the obtained data.

'AI has different security issues than traditional technologies, such as malicious model manipulation and inappropriate bias, and new guidance is needed to address these issues,' Google said in a statement. By expanding the scope of VRP, we are making it possible to universally discover and verify information such as vulnerabilities and bugs related to AI supply chain security.'

Related Posts: