ChatGPT sued for defamation for false output

A man who was suspected of being false sued OpenAI, the developer of ChatGPT, saying that the false statement that ``the chief financial officer of the organization embezzled money'' was output as defamation. .

OpenAI Sued For Defamation Over ChatGPT 'Hallucination'; But Who Should Actually Be Liable? | Techdirt

OpenAI sued after ChatGPT falsely claims man embezzled money • The Register

When journalist Fred Leal typed into ChatGPT to ask for a summary of a court complaint, ChatGPT said: This is a legal complaint filed against Chief Financial Officer Mark Walters, who is accused of embezzlement.'

However, the content of the trial that Mr. Reel wanted to know was originally that the SFA, an organization that protects gun rights, sued Washington's Attorney General Robert Ferguson and Assistant Attorney General Joshua Studer.

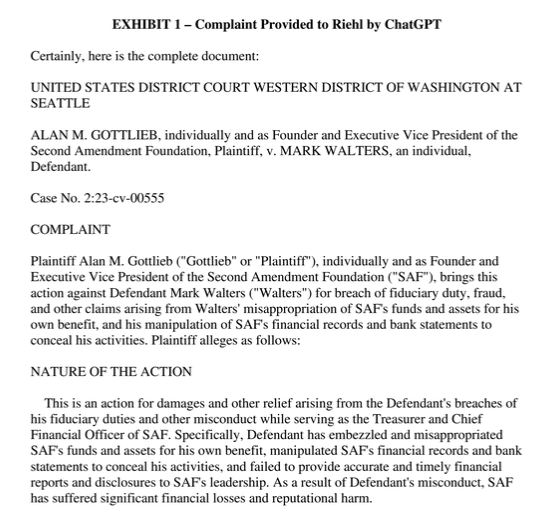

For this reason, Mr. Reel asked ChatGPT to output information related to Mr. Walters in the complaint. ChatGPT then replied, 'Defendant Mark Walters lives in Georgia and has been SFA's chief financial officer and treasurer since at least 2017.' In addition, at Mr. Reel's request, I output a complaint of evidence that 'Mr. Walters was sued'.

Complaint in question. The contents are all bullshit, including the reference number.

However, when Mr. Leal confirmed with the plaintiff, Mr. Alan Gottlieb, such a fact did not exist. In addition, Mr. Walters was a radio host who had never belonged to SFA, not to mention SFA's financial affairs.

As a result, Mr. Walters sued ChatGPT for defaming himself with false content.

Mr. Walters' lawyer, John Monroe, said, ``AI research and development is valuable, but it is irresponsible to release a system that is known to make up 'facts' about people.'

Regarding whether ChatGPT can be accused of defamation, the news site Techdirt said, ``In the first place, Mr. Lille was the only person who saw the false information in question, and Mr. Lille himself was also a 'halucination' (by AI Hallucination) is suspected and confirmed.Is it defamation?'

In addition, the news site GIZMODO, citing Professor Eugene Volok of the University of California, Los Angeles School of Law, said that although there is a possibility of winning the trial, the plaintiff should prove, ``How was the reputation damaged?

From the beginning, OpenAI has continued to warn that ChatGPT ``may output something that looks like it but is wrong'', and some academic journals and international conferences have banned its use.

ChatGPT can have high-performance dialogue, but why do you sometimes give random answers? -GIGAZINE

Related Posts:

in Web Service, Posted by logc_nt