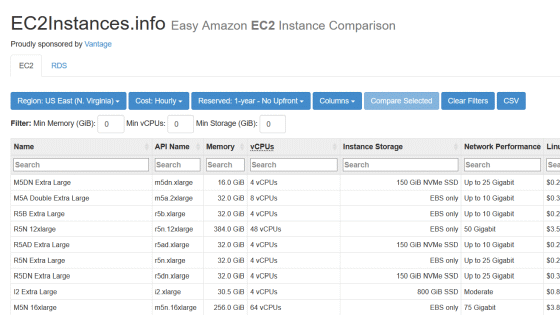

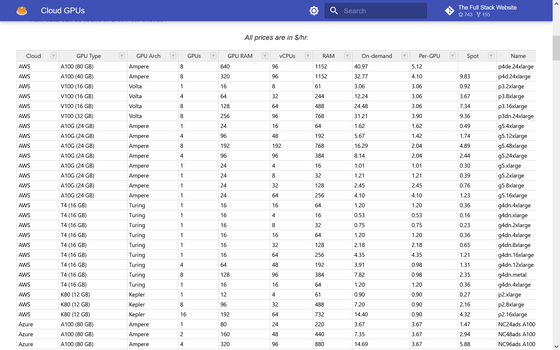

A list of how many dollars per hour the cloud GPU provided by AWS, Azure, Cudo, etc. can be used and what kind of configuration it is

GPUs are often used for training and executing neural networks, and the demand for GPUs in cloud services is increasing with the spread of AI. AI-related news & community site

Cloud GPUs - The Full Stack

https://fullstackdeeplearning.com/cloud-gpus/

The table is divided into two types: cloud server and serverless. Services picked up include Amazon Web Service (AWS), Microsoft Azure, Cudo Compute, Google Cloud Platform (GCP), AWS Lambda, and so on. There is no mention of some services such as Hugging Face.

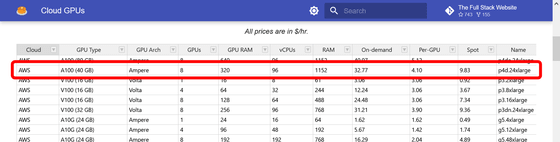

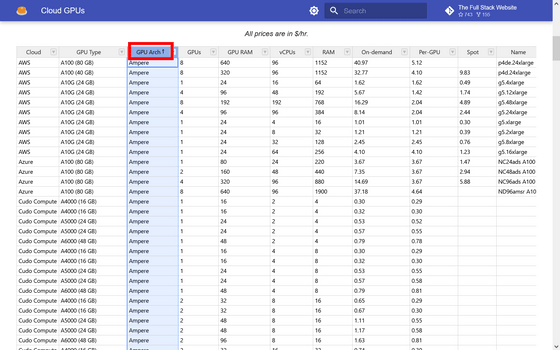

The table of the cloud server looks like this. The leftmost column is the service name, the right side is the GPU type, and the right side is the architecture. All prices are in dollars/hour.

For example, AWS's p4d.24xlarge architecture is NVIDIA's Ampere, GPU type is A100 (40GB), number is 8, RAM is 320GiB, vCPU is 96, RAM is 1152GiB, on-demand charge is 32.77 per hour. $ (about 4578.87 yen), the GPU fee is $ 4.10 per hour (about 572.81 yen), and the spot instance fee is $ 9.83 per hour (about 1373.34 yen).

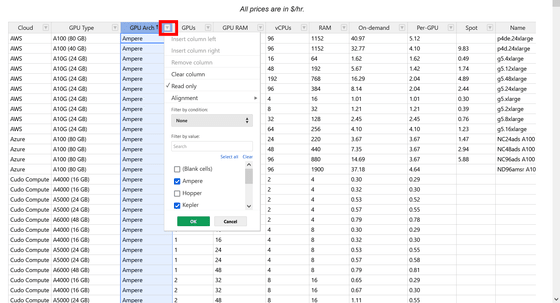

You can sort by clicking the category title.

Click the downward pointing triangle to filter.

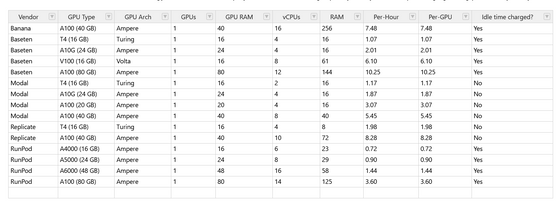

The table next to the cloud server is serverless. Here, serverless is defined as 'no server management, elastic scaling, high availability, and no idle capacity.'

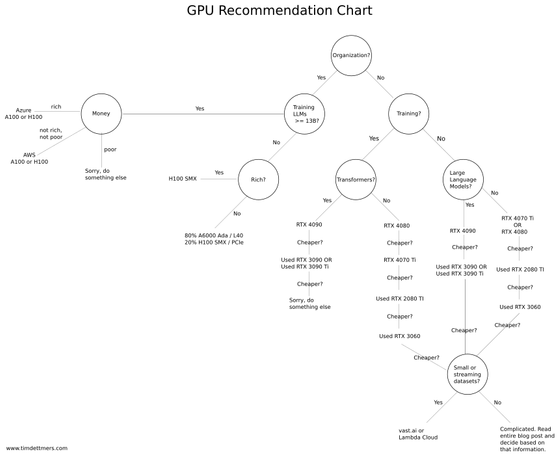

Next is a flow chart that will help you when choosing a GPU. By answering questions such as 'Organization?' and 'Do you want to train a large-scale language model (LLM)?' According to this figure, if you want to train with LLM of 13B or more, it is recommended to use Azure or AWS.

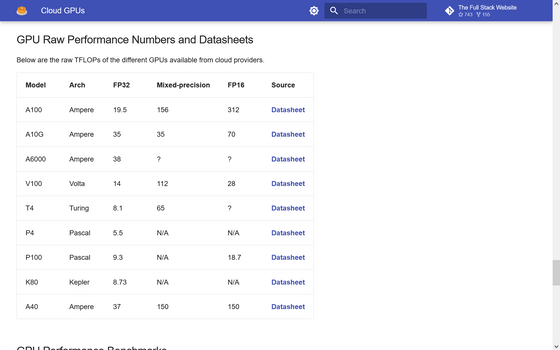

A link to the datasheet for each GPU model was also posted.

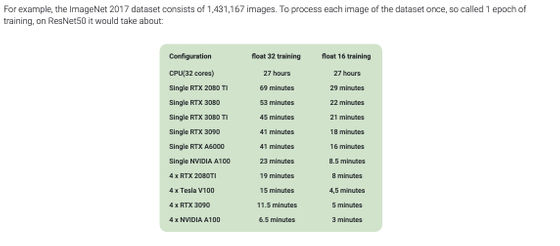

Next is a basic GPU benchmark for common deep learning tasks.

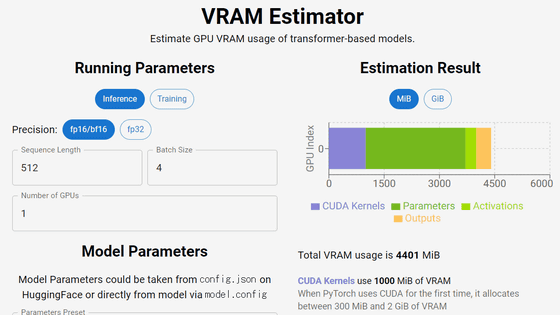

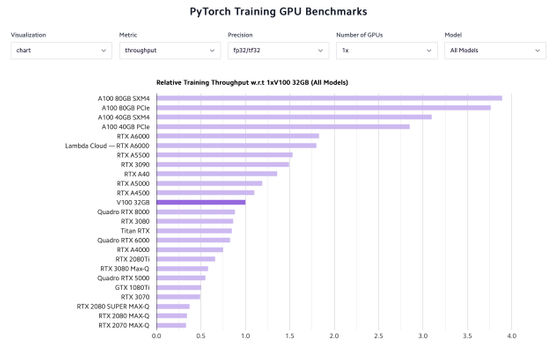

Finally, the PyTorch

Related Posts:

in Web Service, Posted by log1p_kr