A cybersecurity company points out that there are a large number of working people who say, ``I have entered confidential company data into ChatGPT without permission'' and security concerns are increasing

Many cases have been detected in which employees input highly confidential business data held by companies and information that should be protected by privacy into

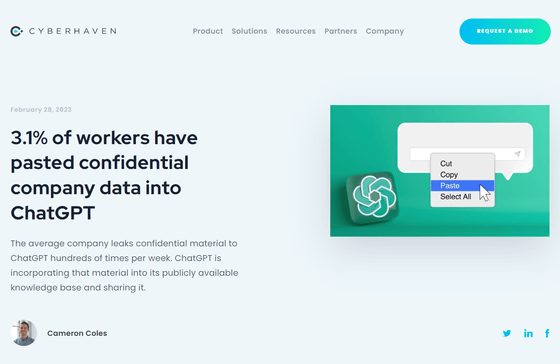

3.1% of workers have pasted confidential company data into ChatGPT - Cyberhaven

https://www.cyberhaven.com/blog/4-2-of-workers-have-pasted-company-data-into-chatgpt/

Employees Are Feeding Sensitive Business Data to ChatGPT

https://www.darkreading.com/risk/employees-feeding-sensitive-business-data-chatgpt-raising-security-fears

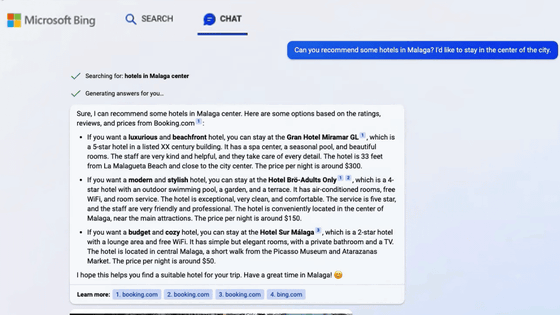

Since ChatGPT came out, many people around the world have been using this chat AI to write essays and make poems. This is not the only use case for ChatGPT, but Cyberhaven points out that it is encroaching on the business sector as well.

According to Cyberhaven's product data, as of March 21, 2023, 8.2% of employees at customer companies are using ChatGPT at work, and 6.5% have entered corporate data into ChatGPT.

Some knowledge workers say using AI tools like ChatGPT can increase their productivity by 10x, but companies like JP Morgan and Verizon are worried about leaking sensitive data and restricting employee access to ChatGPT. is prohibited.

OpenAI uses the data that users enter into ChatGPT to train AI models. As a result, there are growing security concerns about users entering source code for software under development, patient medical records, etc. into ChatGPT. In fact, Amazon lawyers have

Cyberhaven warns that if an employee ``enters data into ChatGPT,'' the following things can happen.

・An executive of a company cut out a part of a document summarizing the business strategy for 2023, entered it into ChatGPT, and asked for a PowerPoint presentation. In the future, if another user asks, 'What are your company's strategic priorities for the year?' ChatGPT may answer based on the aforementioned business strategy.

・The doctor enters details such as the patient's name and medical condition, and asks ChatGPT to create a letter to be sent to the patient's insurance company. Then, in the future, ChatGPT may answer another user based on the information entered by the doctor.

Also, in March 2023, ChatGPT had a bug that made it possible to see other people's chat history. Cyberhaven points out that when such a bug occurs, confidential information entered in an unintended manner may be leaked.

ChatGPT has a bug that other people's chat history can be seen, ChatGPT is temporarily down to fix the bug & chat history remains unavailable - GIGAZINE

The traditional security software companies use to protect their data was not designed with ChatGPT in mind and cannot prevent it. JP Morgan prohibits employees from using ChatGPT, but it is reported that ``how many employees are using ChatGPT'' could not be specified in the previous stage.

Cyberhaven cites the following reasons why it is so difficult for security software to protect data sent to ChatGPT:

1: Copy and paste from files or apps

When employees enter company data into ChatGPT, they do so by copying and pasting content into a web browser instead of uploading files. Many security software are designed to prevent files tagged as confidential from being uploaded, but cannot be tracked once the content is copied from the file.

2: Sensitive data does not contain recognizable patterns

Corporate data sent to ChatGPT often does not contain recognizable patterns that security tools look for (such as credit card numbers or social security numbers). Existing security tools are unable to recognize the problematic context and cannot distinguish between a user typing a cafe menu and a company's acquisition plan.

Cyberhaven analyzed whether approximately 1.6 million employees working for client companies that use their products are using ChatGPT. Then, it is clear that 8.2% of knowledge workers used ChatGPT at least once at work. It is also clear that 3.1% of employees of customer companies entered sensitive data into ChatGPT. More and more companies are blocking access to ChatGPT entirely, but usage is growing exponentially.

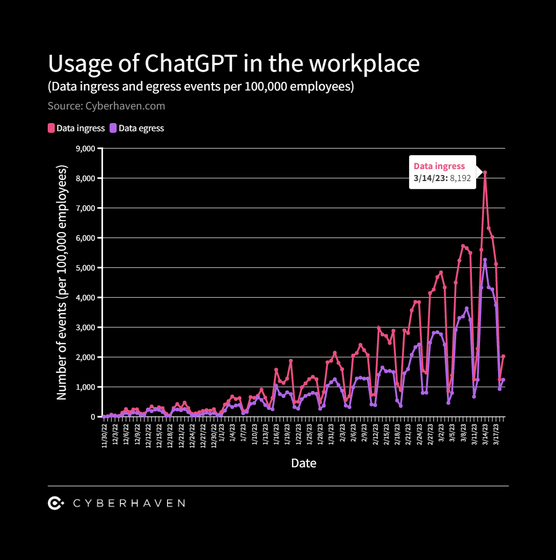

The graph below summarizes the number of times the use of ChatGPT was detected at the workplace detected by Cyberhaven products per 100,000 employees. The data is compiled from November 30, 2022 to March 17, 2023, and despite the expanded usage restrictions on ChatGPT, the number of uses is clearly increasing. The red line in the graph is 'the number of times data is input to ChatGPT' and the purple line is 'the number of times ChatGPT outputs data'.

According to Cyberhaven, the ratio of ``inputting data to ChatGPT'' and ``using data output by ChatGPT'' is almost 1:2. About 11% of the cases where employees enter data into ChatGPT are confidential company data. However, given the dramatic increase in ChatGPT usage, Cyberhaven points out that the amount of sensitive data entering ChatGPT is 'pretty huge.'

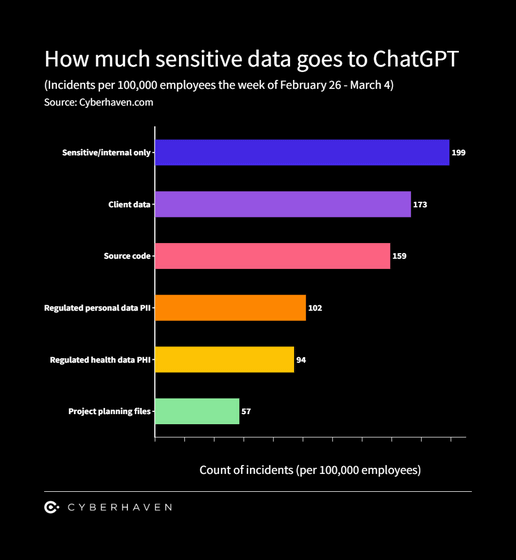

In the week from February 26 to March 4, 2023, 100,000 Cyberhaven users entered sensitive data into ChatGPT 199 times and client data. 173 times, entered source code 159 times, entered personal information 102 times, entered health-related data 94 times, and entered data about corporate project plans 57 times. .

Howard Tin, CEO of Cyberhaven, points out that the more employees use AI-based services like ChatGPT as productivity tools, the greater the risk of privacy information being leaked. ``There has been a shift in data storage standards from

Karla Grossenbacher of law firm Seyfarth Shaw also said, ``As more software companies connect their applications to ChatGPT, LLMs may collect far more information than users or companies realize. , may be exposed to legal risks,' a Bloomberg column notes.

Related Posts:

in Software, Web Service, Security, Posted by logu_ii