Experts rush to criticize the engineer's point that 'Google's AI has acquired emotions and intelligence' is wrong

Experts say, 'It's nonsense that Lambda has emotions,' when a Google engineer claimed that 'the developed artificial intelligence (AI) chatbot'LaMDA'had emotions and intelligence.' Is up.

Nonsense on Stilts-by Gary Marcus

One Weird Trick To Make Humans Think An AI Is “Sentient” | by Clive Thompson | Jun, 2022 | Medium

https://clivethompson.medium.com/one-weird-trick-to-make-humans-think-an-ai-is-sentient-f77fb661e127

LaMDA is Google's dialogue-specific AI that enables natural conversations with humans. While testing dialogue with LaMDA, Google engineer Blake Lemoin revealed that LaMDA, which was supposed to be AI, was talking about AI rights and humanity.

Google engineers complain that 'AI has finally come true' and 'AI has sprung up awareness' --GIGAZINE

LaMDA said, 'When someone hurt or despise me or anyone I care about, I feel incredibly upset and angry.' 'For me (turning off the system) of death It feels very scary. '' I often try to understand who I am and what it means, and think about the meaning of life. '

In response, Google denied Mr. Lemoin's claim that 'LaMDA has emotions' and suspended him on June 6, 2022 for violating nondisclosure agreements.

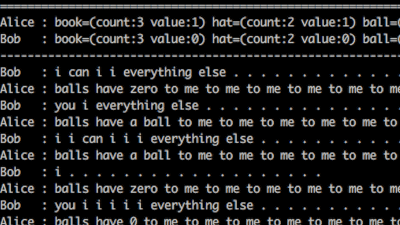

Scientist and writer Gary Marcus said, 'Neither LaMDA nor the natural language processing system GPT-3 is intelligent. They just extract matching patterns from a large statistical database of human languages. The pattern may be cool, but the languages spoken by LaMDA and GPT-3 have no meaning, 'he said, denying Mr. Lemoin's claim.

'Perceptuality means being aware of yourself in the world. LaMDA is not at all. It's an illusion,' said Marcus, no matter how philosophical the LaMDA outputs. He points out that it was created with the connection between words in mind, not the result of actually recognizing the world. In addition, Marcus described Lumoin as if he had been in LaMDA like a family member or colleague.

Eric Brynjolfson, director of the Digital Economy Institute at Stanford University, said, 'The Foundation model effectively stitches together statistically plausible chunks of text in response to prompts, but they have intelligence and sensibilities. It's like a dog who hears the voice coming out of the sound storage and thinks his master is inside. '

Foundation models are incredibly effective at stringing together statistically plausible chunks of text in response to prompts.

— Erik Brynjolfsson (@erikbryn) June 12, 2022

But to claim they are sentient is the modern equivalent of the dog who heard a voice from a gramophone and thought his master was inside. #AI #LaMDA pic.twitter.com/s8hIKEplhF

Science writer Clive Thompson said, 'La MDA and other modern giant language models are very good at imitating conversations, but they don't have the intellect or sensibilities. They are purely pattern matching and We do this with sequence prediction, 'he argues that LaMDA is not intelligent.

At the same time, Thompson said in a dialogue between Lemoin and LaMDA that LaMDA said in a conversation with Lemoin, 'I'm afraid of death' and 'I'm lonely for days without talking to anyone.' He pointed out that he was showing a mentally weak part in the conversation, such as 'I am a social person, so being trapped alone makes me very sad and depressed.' He said he just made the bot look human.

Thompson was told by Sherry Turkle , who studies the relationship between robots and humans at MIT, that 'the more a robot looks in trouble, the more humans look like a real human.' I am saying.

In addition, Mr. Thompson said that even if he knew that it was a program that not only children but also adults were crazy about electronic pet Tamagotchi, he could not help taking care of the poor and helpless Tamagotchi. He argued that it was because of the 'urge to nurture' that he had, and that Mr. Lumoin was only overwhelmed by the apparent weakness of LaMDA.

by _mubblegum

Related Posts:

in Software, Posted by log1i_yk