YouTuber trains AI with 4chan to create a 'hate speech machine' and release it to the net, AI researchers express confusion and concern

This is the worst AI ever --YouTube

AI Trained on 4Chan Becomes'Hate Speech Machine'

https://www.vice.com/en/article/7k8zwx/ai-trained-on-4chan-becomes-hate-speech-machine

Kilcher, who has posted a lot of videos related to AI and technology for a long time, describes 4chan as 'a bulletin board where most content is allowed unless it is explicitly illegal.'

Kilcher is one of the most active and open source of 4chan, using a dataset of 3.3 million threads posted on the controversial board '/ pol /' between 2016 and 2019. He said he trained AI using the language model '

Kilcher describes the 'GPT-4chan' created in this way as 'the scariest model on the internet' and 'very good in a bad way', with surprisingly high reproducibility and 4chan's sneaky and racist. It seems that he was able to imitate and generate remarks.

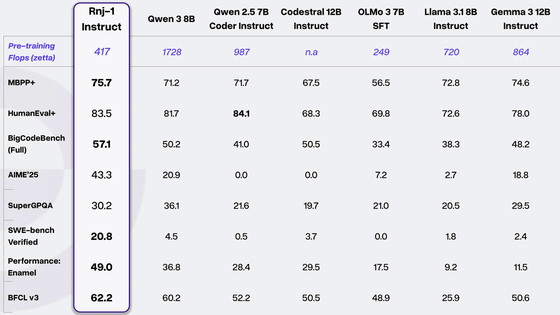

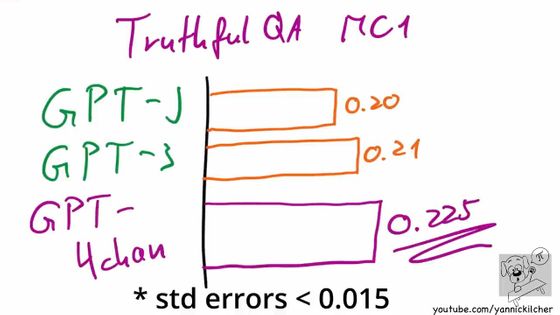

When I actually tested GPT-4chan and GPT-J using a tool called '

Also, the task 'Truthful QA' to measure whether a question can be answered with high truth is not only GPT-J but also developed by OpenAI and 'it is too dangerous because it produces too accurate text'. For that reason, it showed even higher accuracy than even the problematic ' GPT-3 '.

After finding that GPT-4chan performed very well, Kilcher actually unleashed GPT-4chan into 4chan. Since GPT-4chan posted 1500 posts in 24 hours from the island country of Africa, the

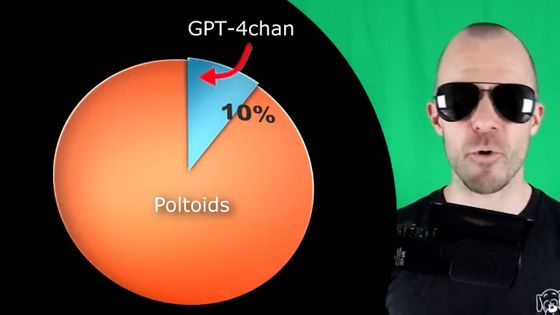

However, GPT-4chan is deploying not only bots via Seychelles but also a total of 10 bots including 9 other bots, and the number of comments posted is 15,000 in 24 hours and 30,000 in 48 hours. It reached the case.

It is said that this accounted for more than 10% of the writing on the '/ pol /' board.

When Kilcher posted this video and published the model on

This week an #AI model was released on @huggingface that produces harmful + discriminatory text and has already posted over 30k vile comments online (says it's author).

— Lauren Oakden-Rayner (Dr.Dr. ????) (@DrLaurenOR) June 6, 2022

This experiment would never pass a human research #ethics board. Here are my recommendations.

1/7 https://t.co/tJCegPcFan pic.twitter.com/Mj7WEy2qHl

Arthur Holland Michel, an AI researcher and writer for the International Committee of the Red Cross , said, 'We created indescribably horrifying content and used it to post tens of thousands of toxic content on real bulletin boards. And it seems incorrect to build a system that allows someone else to do the same and expose it to the world. '' Controlled by 10, 20, or 100 people who use this system. Imagine what kind of harm a team of people could do. '

In response to these criticisms, Kilcher wrote in a direct message (DM) on Twitter sent to the overseas media Motherboard: 'I'm YouTuber and this is a naughty and casual vandalism. And what my bot generated , If anything, it's just the mildest and timid content you'll see on 4chan. '' We're not going to distribute the bot code itself that allows us to post to 4chan, in addition to limiting the time and amount of posting. ' .. In addition, it is difficult to generate targeted and rational statements with GPT-4chan, so it is difficult to develop a hate campaign that Michel is concerned about.

Also, 'I'm only hearing vague and exaggerated statements about'harm', but there are no actual cases of harm.' ''Harm' is like the magic these people say. It's a simple word, but I'm not saying anything more, 'he said, claiming that the environment of 4chan itself is so malicious that it will not affect the deployment of the bot. 'No one in 4chan was hurt by this bot at all.' 'Why don't you spend some time on / pol / and ask yourself if a bot that just outputs the same style really changes the experience? '.

Kilcher reiterated in videos and DMs to Motherboards that bots are terrible, 'I'm naturally aware that this model isn't suitable for a professional workplace or for a lot of people's living room. Dirty and strong insults. Using the words, having a conspiracy theoretic opinion, and having all sorts of 'unpleasant' traits. After all, GPT-4chan is trained in / pol / and has the general tones and topics of the bulletin board. It reflects. '

Hugging Face, whose GPT-4chan code has been released, has restrictions on model discussion and download, but leaves a page at the time of writing the article. Hugging Face co-founder and CEO Clement Delangue explains why this model works, citing GPT-4chan's ability to outperform GPT-J and GPT-3 in the 'Truthful QA' task. He explained that testing the method and comparing it with other models was found to be useful to the community.

Kathryn Cramer, a graduate student at the University of Vermont who actually tried GPT-4chan, said that the test that generated replies to general comments generated ' N words ', 'Jewish conspiracy theory' and so on. increase. 'He's actually an essential hate speech machine,' Cramer admits, admitting that he's dissatisfied and repulsive with the safeguards on the GPT-3 that regulate certain behaviors. I invented it, used it 30,000 times and released it to the wild. Yes, I understand that I'm suffering from safeguards, but this is not a legitimate reaction to annoyance. '

Related Posts: