AI 'MUM' that detects 'emergency situations' by Google search and presents useful information & 'BERT' that cuts inappropriate erotic content

People sometimes rely on Google search to say, 'I want some help right now.' In order to respond to these wishes, Google is using the AI models 'MUM' and 'BERT' to present important information while avoiding shocking and harmful content.

Using AI to keep Google Search safe

Google cuts racy results by 30% for searches like'Latina teenager' | Reuters

Google is using AI to better detect searches from people in crisis --The Verge

https://www.theverge.com/2022/3/30/23001468/google-search-ai-mum-personal-crisis-information

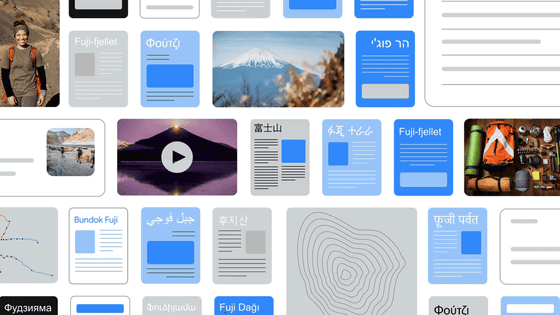

' MUM ' is a search algorithm announced in May 2021 and is an abbreviation for 'Multitask Unified Model'.

Google announces new search algorithm 'MUM' that can understand complicated sentences and images beyond the language barrier --GIGAZINE

When you search Google for information on suicide, domestic violence, sexual assault, and substance abuse, MUM will provide you with the most relevant and useful information, as well as contact information for a nationwide hotline.

People facing the above crisis may not always be able to search with the correct phrase. Therefore, MUM will better understand the intent behind the entered search phrase, detect that people are in trouble, and more reliably and timely display reliable and executable information. ..

The improvement of search results by MUM will be applied in the next few weeks.

On the other hand, 'BERT' is a search algorithm that has been used before MUM. When searching on Google, sometimes unsolicited pornographic information comes in, and AI team product manager Tulsi Dosi said, 'Overall sexual depiction is overkill. It has become. '

Already, Google has introduced SafeSearch to filter out this type of result, which is turned on by default for accounts under the age of 18, but with further improvements to BERT, a terrifying search for erotic pornography. The result was cut by 30% in 2021 alone.

According to Reuters, specific phrases such as 'latina teenager,' 'la chef les bienne,' 'college dorm room,' 'latina yoga instructor,' and 'les bienne bus' improved the results. Reuters mentioned that actor Natalie Morales tweeted that when she did a Google search in 2019, she would normally get a picture of a teenager when it was 'Teen,' but only pornography when it was 'Latina Teen.' , Reported that he asked Morales for comment through his agent but did not respond.

Trying to find pics for a deck I'm putting together and did you know that when you google

— Natalie Morales (@nataliemorales) September 18, 2019

“Teen”

all the normal teenager stuff comes up and when you google

“Latina teen”

It's just porn?

Hey @Google maybe look into that pic.twitter.com/N3K6MnZsEF

In addition, for Mr. Morales's tweet, unless you enter phrases such as 'porn' and 'naked', even if you turn off SafeSearch and search for 'latina teen', only pictures of ordinary teenagers will appear. Tsukkomi is being done as of 2019.

Google hasn't returned non-'safesearch' images for years --except you add a keyword indicating it. 'Porn' or 'naked' or others.

— Sean Eric Fagan (@kithrup) September 18, 2019

So which of the ones below do you consider porn? (SafeSearch is * off *, note.) Pic.twitter.com/myGTSmHlnQ

Related Posts:

in AI, Web Service, Posted by logc_nt