Added API to create high-precision depth map by Google and API that can use AR in shot movies to AR development kit 'ARCore'

At the developer conference 'Google I / O 2021' that started on May 18, 2021 local time, it was announced that two new APIs will be introduced in

Google Developers Blog: Unlock new use cases and increase developer velocity with the latest ARCore updates

https://developers.googleblog.com/2021/05/unlock-use-cases-and-increase-developer-velocity-with-new-capabilities-in-arcore.html

Google upgrades ARCore API with new features to immerse users

https://www.xda-developers.com/ar-core-raw-depth-api-recording-api-google-io-2021/

ARCore released in 2018 has been installed more than 1 billion times in total, and at the time of writing the article, more than 850 million Android devices are running ARCore and can access the AR experience. That thing. Google has announced that it has released the latest version of ARCore, ARCore 1.24, and will introduce two new APIs, the Raw Depth API and the Recording and Playback API.

◆ Raw Depth API

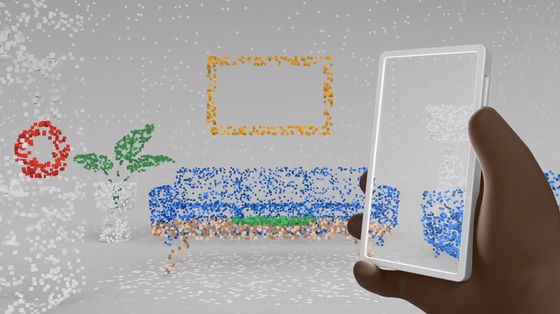

In 2020, Google released the 'Depth API ' that generates a depth map with a single camera, making it possible to create a depth map on an Android device to improve the AR experience. The newly announced Raw Depth API seems to be built on the traditional Depth API, and enables more detailed AR representation by creating a raw depth map.

The raw depth map uses a corresponding image that provides a confidence value for depth estimation at each pixel, and because it contains unsmoothed data points, it is possible to capture a more realistic depth map.

Google has also released a movie that introduces the Raw Depth API.

Introducing the ARCore Raw Depth API-YouTube

By using the Raw Depth API ...

You can create more detailed depth maps than the conventional Depth API.

By recognizing the depth and positional relationship of objects, you can realize a more accurate AR experience.

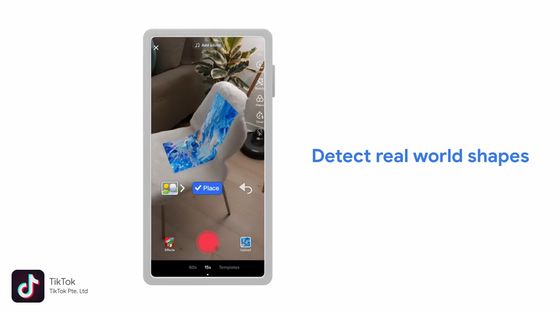

This allows the

With TikTok's latest effects, you can even paste uploaded images onto objects in real-world movies. The image is bent at the boundary between the seat surface of the chair and the backrest, and you can see that accurate depth information of the object in the movie can be obtained.

The Raw Depth API

◆ Recording and Playback API

One of the big problems that plagues AR app developers is that when you want to test the behavior of your app in a particular environment, you have to actually go there. Of course, developers may not always have access to their destination, lighting conditions may change over time, and the information acquired by the sensor may vary from test session to test session.

The Recording and Playback API will solve the problems that AR developers have by making it possible to record IMU (Inertial Measeurement Unit) and depth sensor data along with a movie of the place.

This is a movie that Google introduces the Recording and Playback API.

One of the limitations of the AR experience so far is that you must be in the place where you want to use AR.

In order to use AR, it is necessary to use a camera to acquire information about the surroundings in real time, so I could not enjoy the AR experience set in the park at home.

But with the newly announced Recording and Playback API ...

Based on the movie you shot, you can enjoy the AR app in the park even if you are at home.

In addition, it is not only users who can benefit from the Recording and Playback API, but also AR app developers.

DiDi cuts R & D costs by 25%, travel costs by 60%, and shortens the development cycle by as much as 6 months, as you can test AR without having to visit the site multiple times if you have a movie you shot. It is said that it was completed.

Related Posts: