How does the second-generation iPhone SE measure 'depth' with a single camera?

iPhone SE: The One-Eyed King?-Halide

https://blog.halide.cam/iphone-se-the-one-eyed-king-96713d65a3b1

IFixit, which has published many disassembly reviews of the device, said 'it seems to have the same camera sensor and display as the iPhone 8' in the second generation iPhone SE disassembly review released on April 27, 2020. Announced .

By

It turns out that the 2nd generation iPhone SE is shooting with the same camera module as the iPhone 8 released in 2017, but the 2nd generation iPhone SE uses portrait mode by using only a single camera. It's the first iPhone we've implemented, and its camera capabilities are improving. The iPhone XR is also an iPhone that can shoot with single camera and portrait mode, but since the iPhone XR uses a technology called `` Focus Pixels '' that measures the depth of field with a sensor while gradually shifting the focus, Halide's development team has determined that 'single camera only' does not mean that portrait mode is realized.

According to the Halide development team, the characteristic of the camera function of the second generation iPhone SE is 'depth measurement by software using machine learning'. For example, the following photo is processed by software to become a portrait photo with a blurred background.

According to the machine learning dataset for developers released by Apple, the iPhone XR software determines that 'the central object exists in front of the surroundings' and determines the depth as follows.

On the other hand, the second-generation iPhone SE software will look like this. The Halide team says it's 'still wrong,' but it knows that the front side of the background is near the camera and the back side of the background is far from the camera.

However, there are several problems with depth measurement on the 2nd generation iPhone SE. One of them is that 'depth can only be measured roughly'. The following succulent plants have a large number of clusters clustered together, making it difficult to measure accurate depth information.

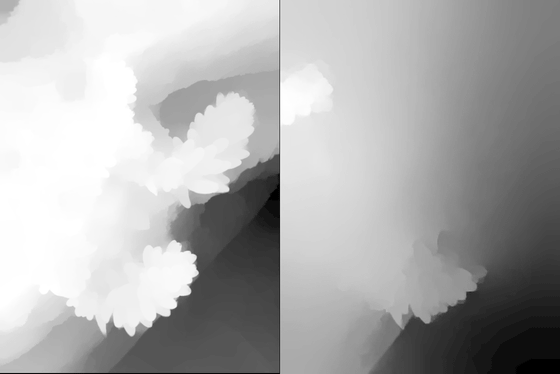

The image below compares the depth map of the iPhone 11 Pro with a triple camera (left side) and the depth map of the 2nd generation iPhone SE (right side). With the iPhone 11 Pro, it is possible to measure the depth of field up to the contour of the tuft by measuring the depth of field with multiple cameras to create an accurate depth map, but with the 2nd generation iPhone SE it is only roughly possible. Has not been determined.

The images taken by each are as follows. Since the iPhone 11 Pro (left side) can generate accurate depth maps, each tuft of succulent plants is projected in detail, but the succulent that the second generation iPhone SE (right side) could not accurately measure with depth maps The tuft on the right side of the plant is blurred.

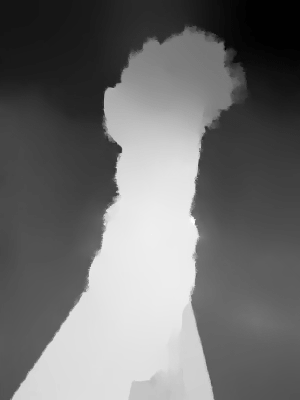

Another problem is that 'when the subject is not a person, the depth measurement fails.' The following is an example of failure. The red frame and the trees above the dog's head are not blurred.

It is judged as follows by software, and it is clear that the boundary between the dog and the trees is not distinguished.

To avoid such problems, Apple has set the default camera app to 'Portrait mode is not available if the subject is not a person'. According to the Halide development team, 'When you do something other than an object in portrait mode, you'll be disappointed with the occasional mistake. Apple prefers to set portrait mode as a person-only object rather than disappoint the user. I think it's good. '

In addition, the Halide development team pointed out that machine learning about depth of field of the second generation iPhone SE, 'Even human beings may mistake the depth due to illusion etc., and the neural network may make mistakes in depth measurement'. He emphasized the advantage of shooting with multiple lenses with the lens, `` If machine learning evolves at the current pace, software that will not cause noticeable depth measurement errors may be completed in a few years. '

Related Posts:

in Mobile, Posted by darkhorse_log