MIT develops chips for neural networks that reduces 94% of power consumption by more than 3 times faster than conventional models

MIT researchers have developed a chip for neural networks that can calculate more than three times faster than before and can reduce power consumption by 94%.

Neural networks everywhere | MIT News

http://news.mit.edu/2018/chip-neural-networks-battery-powered-devices-0214

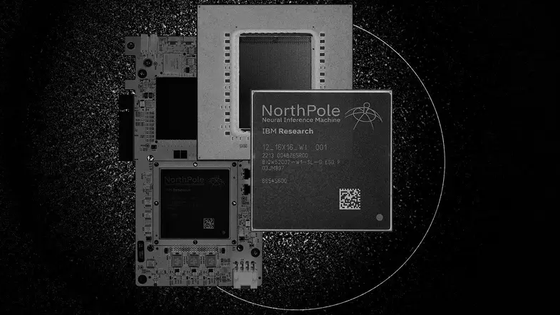

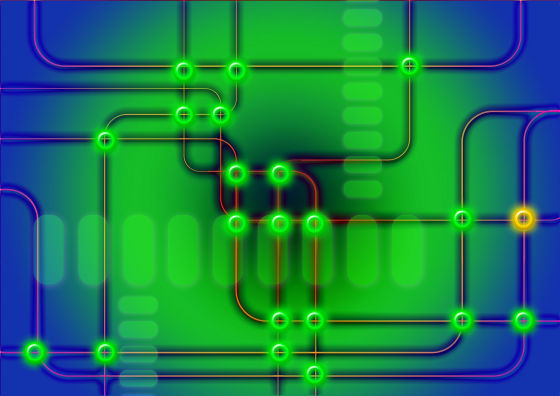

In the general processor model, the processor and the memory in the chip are separated, and data is exchanged between the memory and the CPU when computation is performed. Therefore, "With machine learning algorithms where massive computations are performed, data transfer is the dominant factor in energy consumption," said Abishek Biswas, a student at MIT Computer Science. Therefore, by simplifying the calculation of the machine learning algorithm to a specific operation called "dot product" and mounting it in the memory, by avoiding the process of reciprocating the data between the CPU and the memory, it is possible to speed up the operation and save power It is a chip for a newly developed neural network that tries to realize the conversion.

Neural networks are typically placed in layers. A single processing node on one layer of the network generally receives data from several nodes in the lower layer and passes data to some nodes in the upper layer. Each connection between nodes has its own "weight". Weight is an indicator of how much the next node plays a role in the calculation to be executed, and "training" in the neural network is a task of setting weights.

A node that receives data from multiple nodes in the lower layer is calculated by adding the result of multiplying the weight of the connection corresponding to each input to the definition of "dot product". When the dot product exceeds a certain threshold, the node does the work of transmitting the dot product to the next layer's node through its own weighted connection.

In this chip developed by Biswas, the input value of the node is converted to voltage and then multiplied by the weight. The sum of the dot products simply adds up the voltages and only the combined voltage is stored as digital information for later processing. As a result, the chip can calculate the dot product for multiple nodes, and it seems that it was able to calculate 16 dot products without exchanging data between the memory and CPU in one step at the trial production stage.

According to MIT, the new chip has succeeded in improving the calculation speed of the neural network at the present time from three times to seven times as much as before, and the power consumption has been successfully reduced by 94 to 95% . Until now, applications such as smartphones uploaded data to the server and returned the result of calculation processing on the server, but we believe that if it is a new chip neural network can be executed locally (terminal side).

Related Posts:

in Hardware, Posted by darkhorse_log