Clearly that Google is developing image recognition AI "PlaNet" with more than human ability

ByCHRISTOPHER DOMBRES

GoogleDevelop technology to automatically generate descriptive text of imagesDoing machine learning andDeep learningWe have been working on the development of computers and artificial intelligence that have advanced recognition capabilities at the human level. In such a circumstance, it has become clear that we have succeeded in developing artificial intelligence capable of "identifying the shooting location with only" picture information "of the photograph with accuracy exceeding human beings.

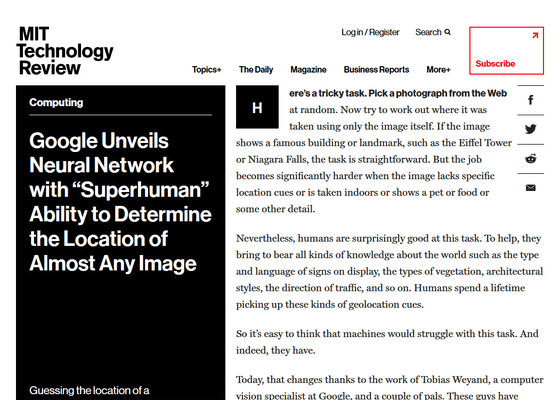

Google Unveils Neural Network with "Superhuman" Ability to Determine the Location of Almost Any Image

https://www.technologyreview.com/s/600889/google-unveils-neural-network-with-superhuman-ability-to-determine-the-location-of-almost/

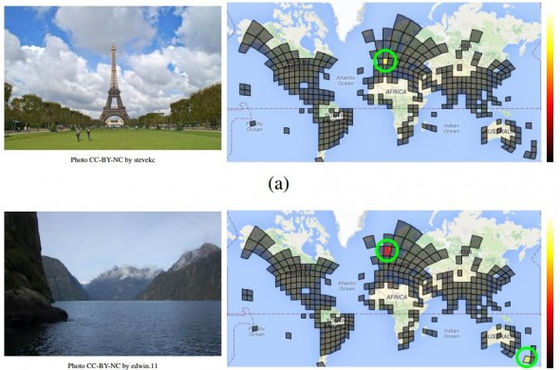

It seems to be difficult to pick up photos randomly from the web and identify where they were shot from the information shown in the photos. However, if a photograph shows a famous building or scenery like the Eiffel Tower, it is relatively easy to identify the shooting location. Actually, there are the following techniques for specifying the shooting location from landscapes etc. in the photograph, there are methods to specify shooting location even if it is not a sightseeing, etc.

How to identify shooting locations from photos uploaded to Twitter or Instagram - GIGAZINE

However, when a clue indicating a specific position is not reflected, a photograph taken indoors, a photograph of details such as pet or food, it is very difficult to specify the photographing location It will be difficult. Still, humans are surprisingly that this task is a good creature, and it is possible to specify shooting places from all kinds of information including letters, plant types, architectural styles, and traffic flow.

However, Google Tobias Weijn and his colleagues announced that they are doing research to enable computers to recognize every imagepaperAccording to them, they seem to have succeeded in developing AI which can perform the photograph shooting place "with accuracy exceeding human beings" by only pixel information of the image. Mr. Wei &Computer vision"Exif information included in more than 126 million pieces of photograph data so that the computer can" identify the shooting location with only the "picture information" of the photograph "by using the deep learning at the expert of" And a data set containing metadata of the image, and learned this by the neural network. As a result, AI can estimate the shooting point of the photograph much more accurately than the human being, and specify the photographing location of the photograph which seems to include no position information at first glance such as indoor photographs, pets and food It seems that it became possible.

ByKate Ter Haar

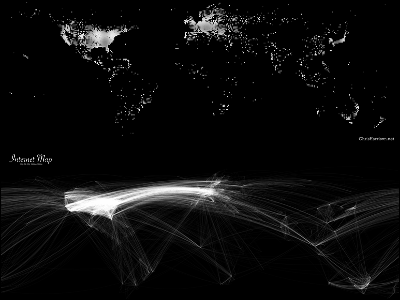

This method seems to be very simple at least in the world of machine learning, and that way is to "draw a line on the world map and make the photo shoot location a single square". First of all, we collect a lot of "photos including location information" from the web, and make it a database. Next, using a photo with position information, we will set up squares for each region. One squirrel is set for each picture in the database, and instead of adding it to the sea and the Arctic / Antarctica, in the city where many pictures are taken, there is a small squares, in the countryside where there are not many people taking pictures big It is supposed to use a squirrel.

What was then completed is AI called "PlaNet". In order to measure the accuracy of this PlaNet, the research team seems to have collected 2.3 million images with location information from Flickr, and let me deduce where PlaNet was shot. According to Mr. Way and Mr. Way and comment that "PlaNet's accuracy is 3.6% at the street level and 10.1% at the city level." Furthermore, it is possible to hit the shooting spot with a probability of 28.4% at the national level and 48% at the continental level, which means that the Massachusetts Institute of Technology'sMIT Technology Review.

In order to further test PlaNet's ability, Way and Mr. and his team conducted experiments comparing their abilities with those who traveled ten frequently. In the test, we show users randomly selected pictures from Google Street View and ask them to show on the map which scenery this is,GeoGuessr"use. The result of the test ended with PlaNet overwhelming the ten travel enthusiasts. According to Mr. Wei & said, "PlaNet won the game 28 times out of 50 times, the average error distance per play was 1131.7 km, while the average error distance of human subjects was 2320.75 km" , It seems that PlaNet 's ability was clearly beyond human.

Furthermore, anyone can challenge for "GeoGuessr" who challenged PlaNet for free from the following. It is a simple quiz service that shows the randomly chosen photos from Google Street View and shows where on the map which scenery it is on, but when you challenge it, you can feel how good the performance of PlaNet is .

GeoGuessr - Let's explore the world!

Even more interesting is that PlaNet is not getting hints of shooting places from plants and architectural styles that appear in photos like humans. "We think that PlaNet has an advantage over humans because PlaNet can remember photos of places that people often traveled to have never seen before," he said. PlaNet won the impression that it is natural for victory. In addition, even for photos that do not contain information that would lead to the identification of location information, PlaNet may be able to identify the shooting location from the album that contains that photo.

Even more interesting is that this PlaNet can be realized with very small data. Mr. Way and said, "Our model (PlaNet) is only 377 MB, and if this is the smartphone can be loaded without problems", it is also a matter of time that functions using powerful neural network technology appear on smartphones I do not.

Related Posts:

in Science, Posted by logu_ii