How does "Google Translate" automatically recognize and translate characters just by holding the smartphone?

In Google's translation application "Google Translate"Ability to convert text in photo to another languageThere was, distributed on July 29updateThe corresponding languages expanded after 27 countries. Otavio Good, a software engineer at Google Translate, explains the mechanism of the same function that seems to be useful when going abroad.

Research Blog: How Google Translate squeezes deep learning onto a phone

http://googleresearch.blogspot.jp/2015/07/how-google-translate-squeezes-deep.html

The function to translate characters captured by simply holding the camera of the smartphone,neural networkIt is realized by utilizing the technology called. Neural network is a kind of machine learning algorithm aiming to imitate the interaction between nerve cells in the brain, in short, it is a software that reproduces the human brain and has learning ability. For example, a neural network is deeply involved in the technique of "image recognition" that understands whether the image is in the form of a dog or a cat.

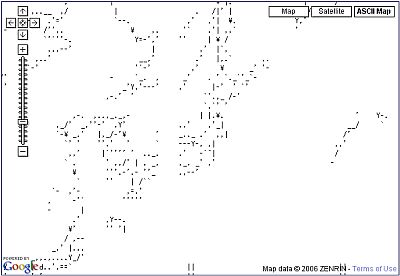

When Google Translate imports an image, the first thing that is done is screening "letters". Google translation picks up only pixels that are similar in color and arranged side-by-side in the pixels that are elements of the image. The picked-up item becomes a character, and other unnecessary elements are eliminated.

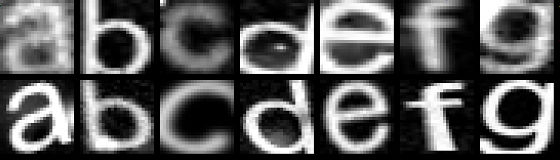

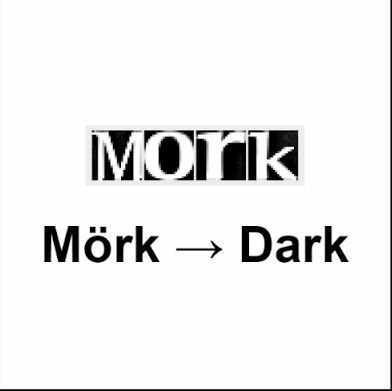

Next, Google Translate enters the task of recognizing what character the selected character is. Neural network is greatly related to this work. However, characters used in signs and signs are not necessarily all the same shape, unlike characters used on PCs and the Internet. For this reason, Google's neural network needs to learn not only "beautiful letters" but also "dirty characters". However, even if it says "dirty characters", since there are close to infinite numbers in reality, they seem to correspond to learn letters that are unclear or distorted like the image below .

When recognizing what character the character is in the image, translate it by looking up the corresponding letters and words from the dictionary. However, when recognizing characters, mistakes are inherent, for example, "S" may be recognized as "5". In order to correct such a mistake, when searching for a corresponding character or word from a dictionary, it searches for an approximate one rather than a perfect match.

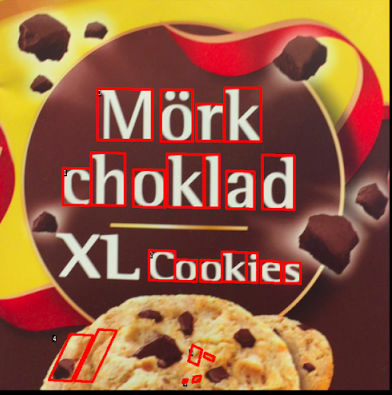

When the translation is completed, the translated sentence is superimposed on the original text of the image.

What you can do with this feature is offline. If you connect online to Google's data center, loading text from the image and translating it seems not that difficult. In order to make instant translation possible even offline, neural network is utilized again, and it is becoming an era where it can be used and understand without learning a foreign language by a neural network .

Related Posts: