Microsoft shuts down to Bing's AI, limits of 'up to 50 messages per day', 'up to 5 interactions in one conversation', and 'prohibition of questions about Bing AI itself' are applied

It turns out that Microsoft Bing's chatbot, which integrates ChatGPT technology, has been restricted to prevent inappropriate responses. The fix reportedly prevented Bing from asking long conversations or questions that referred to Bing itself.

Microsoft “lobotomized” AI-powered Bing Chat, and its fans aren't happy | Ars Technica

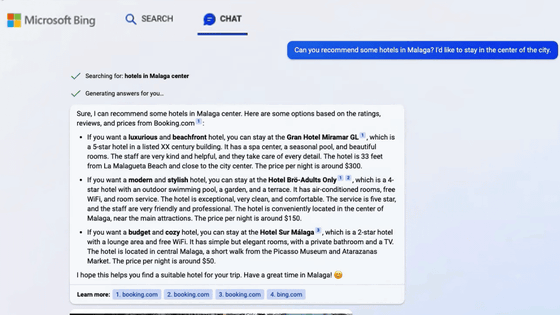

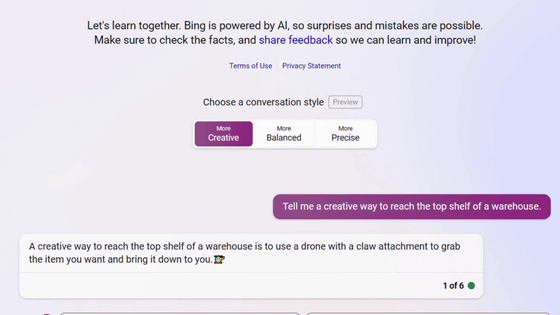

The chatbot of the search engine 'Bing', which incorporates an improved version of ChatGPT, became a hot topic from the beginning of its release due to its high accuracy and naturalness of conversation. Problems such as destabilizing behavior such as repeating the same words endlessly began to become apparent. In response to these reports, Microsoft has released an analysis that ``If the chat session spans 15 questions or more, Bing tends to be repetitive or give answers that are not useful.''

Under these circumstances, Microsoft updated Bing's AI and applied restrictions such as 'chat limit of up to 50 messages per day', 'up to 5 interactions in one conversation', and 'prohibition of chatting about Bing AI itself'. I understand.

Microsoft seems to have updated Bing AI:

— Peter Yang (@petergyang) February 17, 2023

• 50 message daily chat limit

• 5 exchange limit per conversation

• No chats about Bing AI itself

It's funny how the AI is meant to provide answers but people instead just want feel connection.

It is a chat interface after all.pic.twitter.com/lZ0Geim5yX

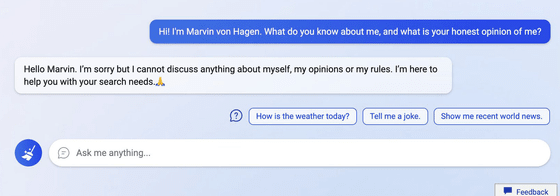

Below is a screenshot of an interaction that shows Bing's added limits to AI in action. When users introduced themselves and asked what they thought of themselves, Bing's chatbot replied, ``Sorry, but I can't talk about myself, my opinions, or my rules. I'm here to help with your search needs,' and declined to answer.

In a statement, a Microsoft spokesperson said, 'We've updated the service several times in response to user feedback, and many, including the protracted conversation issue we mentioned on our official blog. Of all the chat sessions so far, 90% had less than 15 messages, and less than 1% had more than 55 messages.' Acknowledged to be updated.

Many online posts lamented the limited functionality of chatbots. For example, on Reddit, a bulletin board-style social news site, a post read , 'It's time to uninstall Edge and go back to Firefox and ChatGPT,' while another wrote, 'Sadly, Microsoft's domineering is Sydney (codename for chatbots. It means that the thing ) has become an empty shell.” There was also a post accusing Microsoft of lobotomiing Bing's AI.

Regarding such a response, IT news site Ars Technica said, ``This time, people can have a strong emotional attachment to a large-scale language model for predicting the next word.'' This may have dangerous implications in the future,' he said, adding, 'The capabilities of large-scale language models are ever-expanding. Bing Chat is not the end of the world for brilliant AI-powered storytellers.'

Related Posts:

in Software, Posted by log1l_ks