AI ``Riffusion'' that automatically generates music along sentences has appeared, and anyone can freely use it based on the image generation AI ``Stable Diffusion''

The AI `` Stable Diffusion '' that generates images according to the input sentences (prompts) has been customized by many people because the model data is open to the public. AI ` ` Riffusion '' has been developed that adjusts such a model of Stable Diffusion and generates music simply by entering sentences.

Riffusion

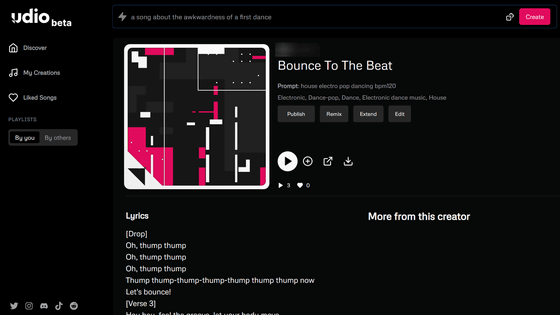

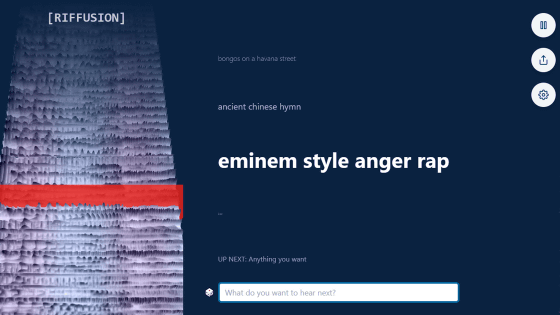

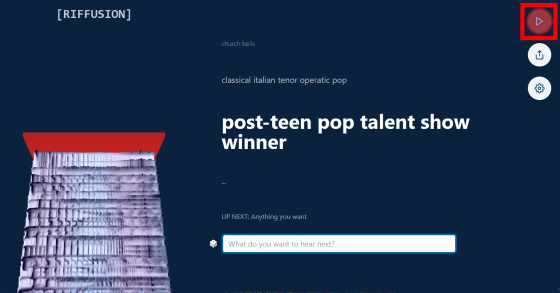

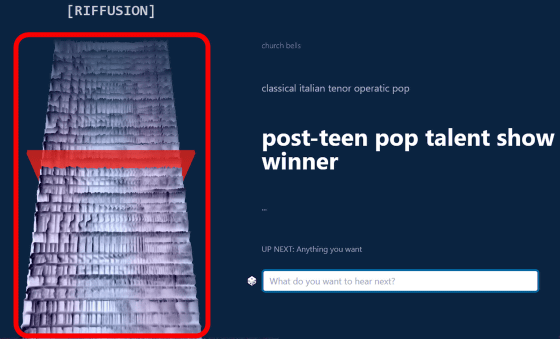

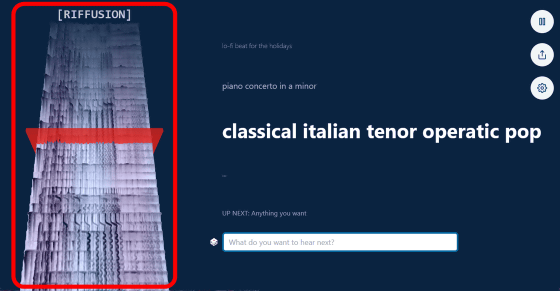

The Riffusion development team has released a web application that makes it easy for anyone to use Riffusion. When you access the web app, you will see a prompt on the right side of the screen that says 'post-teen pop talent show winner'. To play the song, click the play button at the top right of the screen OK.

When you click the play button, the figure on the left side of the screen will start scrolling upwards and the song will start playing. The song certainly sounds like a teenage artist.

A prompt input field is displayed at the bottom of the screen so that you can freely enter the prompt and generate music. As a test, I entered 'japanese pop (J-POP)' and pressed the Enter key.

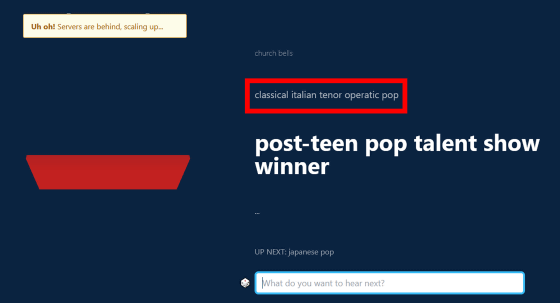

When you enter a prompt, the prompt will be displayed in the 'Next song to play field' located above the input field. However, at the time of writing the article, the server did not seem to be able to handle the load, and a notification indicating that the load was too high was displayed at the top left of the screen, making it impossible to generate music.

At the top of the screen are a series of pre-generated song prompts. As a test, click on the prompt 'classical italian tenor operatic pop'.

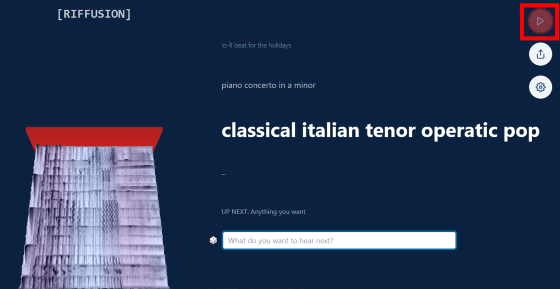

Then, the screen switches, so click the play button at the top right of the screen.

Music started playing. If you ask me, it seems to be an opera-like and pop tune.

In the above example, the prompt was difficult to understand, so it was difficult to realize the power of AI. A song has been generated. It is amazing to be able to generate the desired music with just one word.

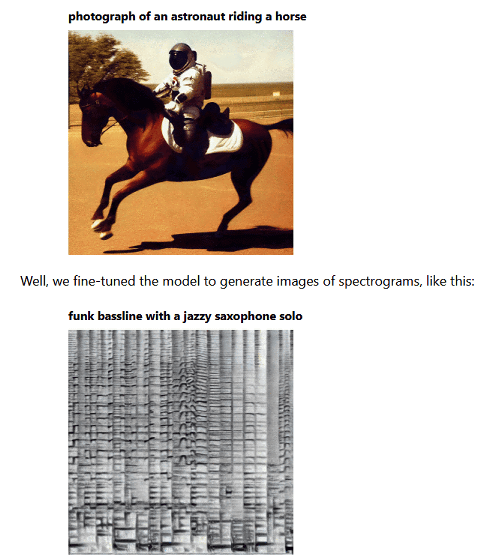

According to Riffusion's

Then, by converting the generated spectrogram into audio data, the operation of ``generating music according to the prompt'' is realized. For example, if the spectrogram shown at the bottom of the image above is converted to audio data, it will be as follows.

・Song examples

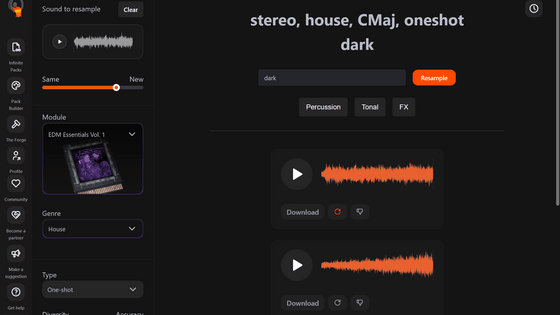

Stable Diffusion has a function called ' img2img ' that inputs an image and generates an image similar to the input image. Riffusion can also use functions like img2img, and it is possible to input songs and output similar songs. An example of the 'function to output similar music' can be understood well by generating the following audio data.

・Original song

・Music converted from the original music into a piano style

Riffusion also has an interpolation function that connects multiple short songs to create a long song. With this interpolation function, it is possible to connect not only similar songs but also songs with different atmospheres without discomfort. For example, you can see the smooth transition from typing sounds to jazz in the example below.

・Smooth transition from typing sounds to jazz

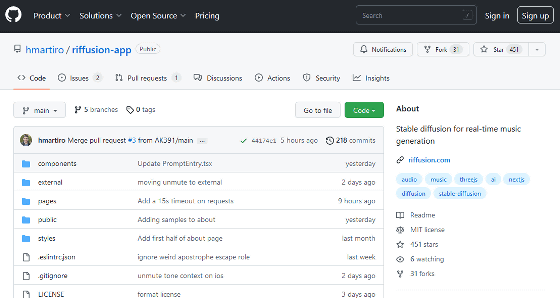

Information on the source code and model data of Riffusion is published at the following link.

GitHub - hmartiro/riffusion-app: Stable diffusion for real-time music generation

https://github.com/hmartiro/riffusion-app

Related Posts:

in Review, Software, Web Application, Posted by log1o_hf