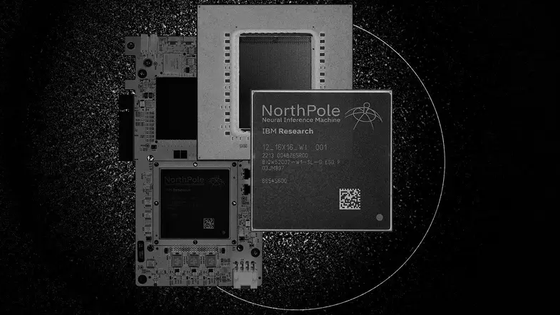

Announcing a new chip ``NeuRRAM'' that can move AI more efficiently, enabling execution and accuracy improvement of large-scale AI algorithms with smaller devices

Artificial intelligence algorithms have made rapid progress in recent years, but due to technical issues and environmental concerns, it is difficult to continue growing at the current pace. Therefore, in August 2022,

A compute-in-memory chip based on resistive random-access memory | Nature

https://doi.org/10.1038/s41586-022-04992-8

A Brain-Inspired Chip Can Run AI With Far Less Energy | Quanta Magazine

https://www.quantamagazine.org/a-brain-inspired-chip-can-run-ai-with-far-less-energy-20221110/

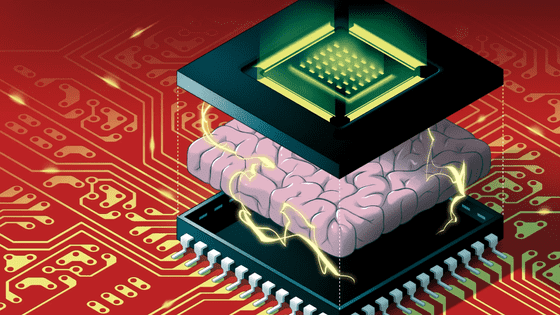

The chip developed this time is a highly energy efficient one called ' neuromorphic computing ' inspired by the processing of the nervous system. Conventional chips lacked the computational power needed to do large-scale deep learning, but NeuRRAM solves that problem.

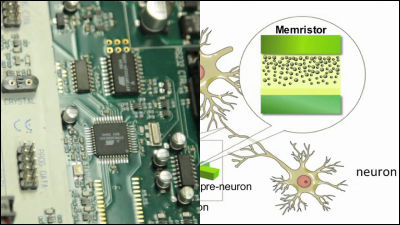

Conventional chips process information in binary, but NeuRRAM uses a new type of memory called 'resistive random access memory (RRAM)' that operates in an analog manner that holds multiple values within an independent range. By doing so, more information can be saved. Wang and colleagues claim that the result is up to 1,000 times more energy efficient than conventional chips. In addition, the miniaturization of chips will allow complex algorithms to run on small devices that were previously unsuitable for AI use, such as smartwatches and smartphones.

In a traditional computer, the computer's memory is located on a '

From around 2015, computer researchers recognized the possibility that devices using RRAM could be used to execute large-scale AI, and development proceeded. Also, in 2015, a team of scientists at the University of California, Santa Barbara reported that devices with RRAM have uses other than storing memory. The announcement was groundbreaking in that basic computational tasks such as simple matrix multiplication could be performed in memory.

Because NeuRRAM performs analog processing, unlike digital processing, the chip is said to be able to perform many matrix calculations in parallel, resulting in higher energy efficiency than digital RRAM memory.

However, there are two problems with the analog processing that NeuRRAM does. One is that analog RRAM chips must be accurate. Due to analog RRAM, fluctuations and noise can occur, making it difficult to run AI algorithms. On the other hand, Mr. Wang and others announce that if the AI algorithm is adjusted to get used to noise, it can produce accuracy similar to that of conventional computers.

Another problem is analog RRAM's lack of flexibility. A lot of wires and circuits were required to do large-scale AI execution in analog RRAM, which required energy and space. Therefore, Mr. Wang and others have achieved space saving and energy saving by designing a new chip.

In addition, Mr. Wang and his colleagues aim to expand the scale by stacking multiple NeuRRAM chips that are about the size of a fingernail, and aim for further energy saving and energy efficiency.

Related Posts:

in Hardware, Posted by log1r_ut