Explain the procedure to draw a picture as desired with 'Stable Diffusion' from a simple sketch

The image generation AI '

4.2 Gigabytes, or: How to Draw Anything

https://andys.page/posts/how-to-draw/

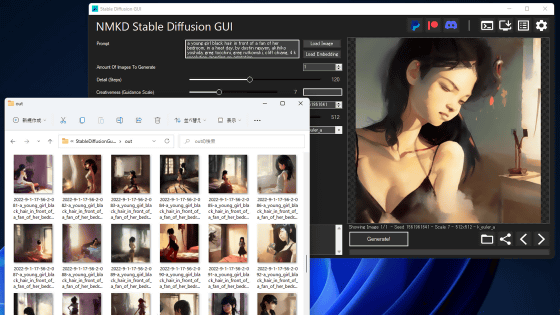

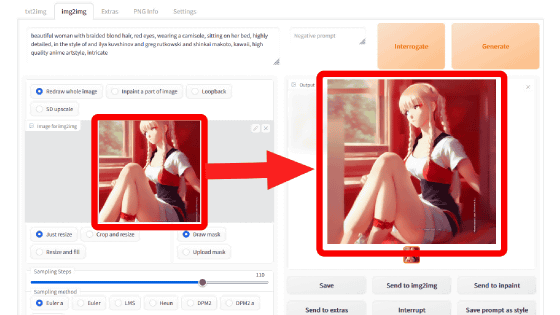

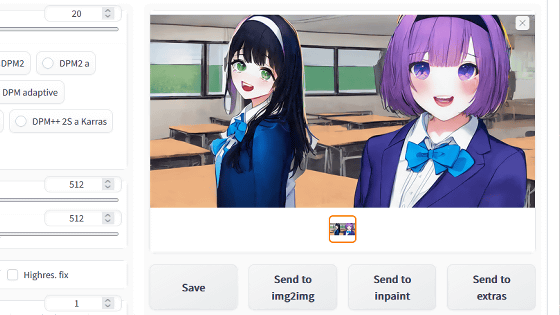

If you give Stable Diffusion an instruction such as ``A bear playing in the forest,'' it will output an image that is different from the image, such as ``The composition is not as expected'' or ``I would like a winter forest instead of a summer forest.'' This often happens. In order to make the output image closer to the image, you can give detailed instructions, but since the person you are giving instructions to is not a human but an AI, you need to give instructions that are optimized for the AI . Stable Diffusion is equipped with a function called 'img2img', which allows you to generate the desired image even with somewhat rough instructions by providing a reference image. You can check the images actually generated using 'img2img' in the article below.

A site where anyone can try out the 'img2img' mode, which automatically generates the photos and illustrations you want with just simple drawings and keywords, using 'Stable Diffusion' - GIGAZINE

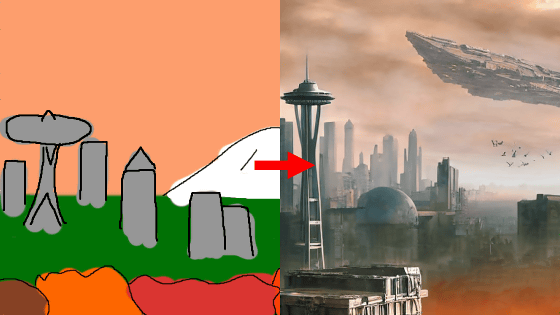

Mr. Salerno explains the steps to create the following ``illustration of a spaceship flying over the ruins of Seattle'' from a simple rough sketch using Stable Diffusion's ``img2img''.

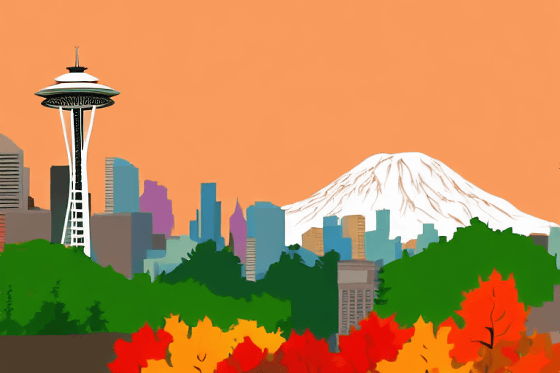

First, draw the background.

Additionally, I added the foreground, ground, cityscape, and mountain roughness by hand.

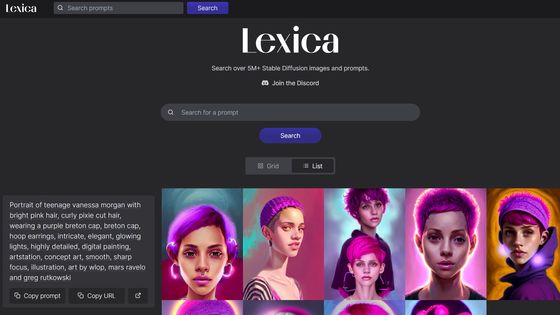

I specified the hand-drawn rough sketch as a reference image and created a 'Digital fantasy painting of the Seattle skyline. In the foreground are bright autumn trees. The Space Needle can be seen. In the background is Mt. Rainier. High definition.' The Seattle city skyline. Vibrant fall trees in the foreground. Space Needle visible. Mount Rainier in background. Highly detailed.)' The result is below. At this stage, the trees and cityscape are painted solid. The value of 'strength', which allows you to specify the amount of change from the reference image, is set to 0.8.

Specify the image generated by Stable Diffusion above as a reference image and re-enter Stable Diffusion to create a 'Digital matte painting. Very detailed. Ruined town. Post-apocalyptic collapsed buildings. Science fiction. City of Seattle. Golden hour at dusk. Beautiful sky at sunset. High quality digital art. Very realistic. (Digital Matte painting. Hyper detailed. City in ruins. Post-apocalyptic, crumbling buildings. Science fiction. Seattle skyline. Golden hour, dusk. Beautiful sky Below is an image output with the instruction 'at sunset. High quality digital art. Hyper realistic.)'. The trees in the foreground have been removed to depict the ruined cityscape of Seattle. At this time, 'strength' is 0.8.

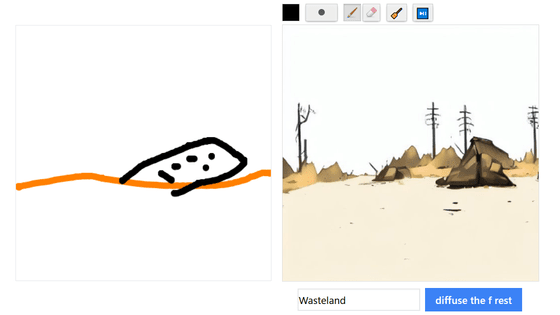

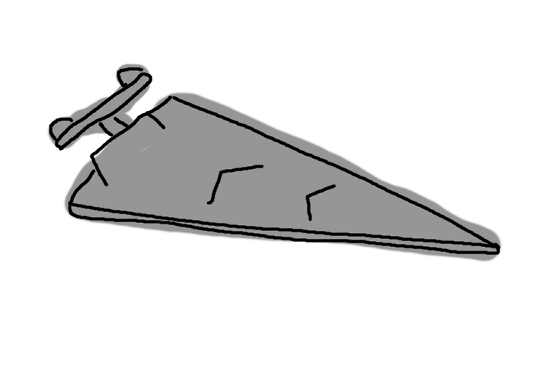

Next, draw a rough sketch of the spaceship floating in the sky.

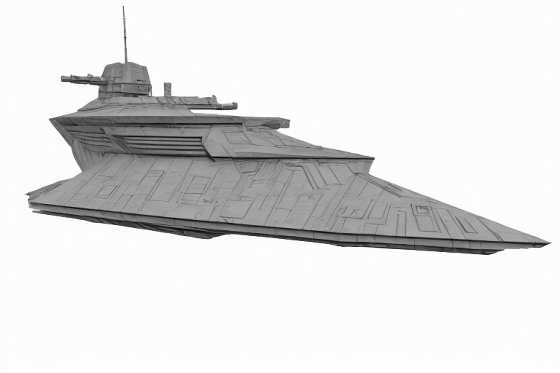

Highly detailed. White background (Digital fantasy science fiction painting of a Star Wars Imperial Class Star Destroyer. Highly The following is the result of giving the instruction 'detailed, white background.)' with 'strength' of 0.8. A spaceship with a Star Destroyer-like atmosphere was output.

Below is an image of the output spaceship placed directly on top of the image of Seattle. Only the spaceship has a different drawing style, which breaks the atmosphere.

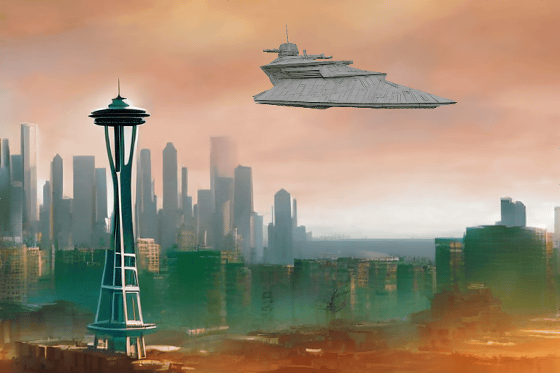

Therefore, Mr. Salerno repeated the output of the ``Seattle image'' and the ``spaceship image'' so that the atmosphere of the two images matched. The following is a superimposition of 'images with just the right atmosphere' obtained after multiple trials.

Next, draw a rough sketch of a bird like the one below...

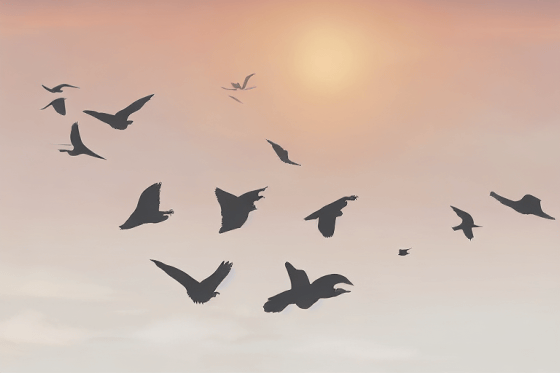

Specify the rough bird as a reference image and create a ``digital matte painting. Very detailed. Birds fly towards the horizon. Golden hour at dusk. Beautiful sky at sunset. High quality digital art. Very realistic. (Digital Matte painting. Hyper detailed. Brds fly into the horizon. Golden hour, dusk. Beautiful sky at sunset. High quality digital art. Hyper realistic.)' with 'strength' of 0.75, and during the flight as below I generated an image of the bird.

The image below is a superimposed image of the bird. It's pretty close to ideal.

Finally, in order to blend the whole thing together, I specified the above image as a reference image and created a 'Digital matte painting. Very detailed. Ruined town. Post-apocalyptic collapsed buildings. Science fiction. City of Seattle. Star Wars. Imperial class Star Destroyer. Birds flying in the distance. Golden hour at dusk. Beautiful sky at sunset. High quality digital art. Very realistic. (Digital Matte painting. Hyper detailed. City in ruins. Post- apocalyptic, crumbling buildings. Science fiction. Seattle skyline. Star Wars Imperial Star Destroyer hovers. Birds fly in the distance. Golden hour, dusk. Beautiful sky at sunset. High quality digital art. Hyper realistic.) Below is the result when executed with 0.2. A high-quality illustration of a spaceship flying over a devastated city has been completed.

Regarding the text provided to Stable Diffusion, there is a widely used technique that says, ``Adding the name of an actual artist will result in a high-quality image,'' but Mr. Salerno says, ``When you search the web for an artist's name, it appears in the search results.'' It is said that images are generated without using artist names because it is not desirable to display images made by AI.

Related Posts: