Apple announces FAQ that 'detection of child sexual abuse material does not compromise privacy'

Apple's attempt to prevent the spread of CSAM (Child Sexual Abuse Material) announced on Thursday, August 5, 2021 has led to a backlash

Expanded Protections for Children Frequently Asked Questions

(PDF file) https://www.apple.com/child-safety/pdf/Expanded_Protections_for_Children_Frequently_Asked_Questions.pdf

The contents of the FAQ are as follows. Since it is just a 'collection of answers to frequently asked questions', there are many overlapping parts in the contents of the answers.

Q: Q:

'Communication safety in Messages ( message and safety of communication of the application),' 'Detection CSAM ( ICloud photo What is the difference between CSAM detection at)' is?

A:

The two are different features and do not use the same technology.

'Communication safety in Messages' is intended to provide parents and children with 'protection to prevent sending and receiving' sexually explicit images '', and images sent and received by the iMessage app set in family sharing. Only targeted. If the image on the device is analyzed and the child's account confirms the transmission and reception of 'sexually explicit images', the photo will be blurred, the child will be warned, and at the same time, the photo will be viewed and sent. If you don't want to do it, you'll see a message reassuring you that you don't have to. As an additional precaution, infants also have the option of sending a message to their parents as a safety check.

CSAM detection, on the other hand, is designed to exclude CSAM from iCloud Photos without providing Apple with information about photos other than those that match your existing CSAM. Ownership of CSAM is illegal in many countries, including the United States. The CSAM detection feature only affects users who choose to save their photos to iCloud Photos, not users who don't use iCloud Photos. It doesn't apply to iMessage and doesn't affect other data on your device.

Q: Q:

Who can use 'Communication safety in Messages'?

A:

'Communication safety in Messages' is only available for accounts that are set up as a family member in iCloud. You need to enable the family group feature in your parent account. Parental notifications can only be enabled by parents of child accounts under the age of 12.

Q: Q:

Does that mean that the content of the message will be shared with Apple and law enforcement agencies?

A:

No, Apple does not have access to your communications with this feature. In addition, the images and information exchanged in the 'Message' will not be shared with the National Center for Missing and Exploiting Children (NCMEC) or law enforcement agencies. 'Communication safety in Messages' is a function independent of 'CSAM detection'.

Q: Q:

Does 'Communication safety in Messages' break the end-to-end encryption of the 'Messages' app?

A:

No, 'Communication safety in Messages' does not change the privacy guarantees of the 'Messages' app, and Apple does not have access to your communications. All users of a 'message' have control over what is sent to whom. If this feature is enabled on your child's account, the device will intervene if the image sent or received by the 'Messages' app is determined to be a 'sexually explicit image'. If you have an account for a child under the age of 12, you can set it to send a notification to parents when an image that is judged to be a 'sexually explicit image' is sent or displayed. Apple does not communicate, evaluate images, intervene, or notify you.

Q: Q:

Does 'Communication safety in Messages' prevent children in abused families from seeking help?

A:

'Communication safety in Messages' applies only to 'sexually explicit images' sent and received by the messaging app. It does not affect the communication that the victim uses to ask for help, such as text. We also use Siri and Search to provide guidance on how to seek help from victims and their acquaintances.

Q: Q:

Do you notify parents without alerting children or giving them the opportunity to make a choice?

A:

No. First, you need to enable 'Communication safety in Messages' in your parent account, and then you can enable parental notifications for accounts of children under 12 years of age. For accounts of children under the age of 12, every time a 'sexually explicit image' is sent or received, a warning will be issued that 'viewing or sending this image will send a notification to parents'. Notifications will only be sent if the image is still displayed / sent after the warning. In addition, for accounts of children aged 13 to 17, a warning will be displayed on the person's device as to whether or not to display and share 'sexually explicit images', but parents will not be notified.

Q: Q:

Does 'CSAM detection' mean that Apple scans all the images stored on my iPhone?

A:

No. By design, 'CSAM detection' only applies to the photos you select when you upload them to iCloud Photos. Even then, Apple will only recognize images that match a known CSAM. If you have iCloud Photos disabled, 'CSAM detection' will not work. Also, 'CSAM detection' does not work for private iPhone photo libraries.

Q: Q:

Does 'CSAM detection' download CSAM in iPhone for comparison with my photos?

A:

No. CSAM is not sent or saved to the terminal. Apple uses unreadable hashes stored on the device instead of the actual CSAM. A hash is a string of numbers that indicates a known CSAM, but you cannot read the hash or convert it to the original CSAM. The hash list is based on images obtained by a child protection organization and verified to be CSAM. Apple can use a new encryption application to detect only iCloud photo accounts that store photos that match known CSAMs.

Q: Q:

Why is Apple doing 'CSAM detection' now?

A:

One of the key challenges in this area is to protect children while preserving user privacy. With the new technology, Apple can only know the iCloud photo account that stores the known CSAM. I don't know anything about other images stored on my device. Existing technology that scans all users' photos stored in the cloud poses a privacy risk to all users. CSAM detection for iCloud photos offers a huge privacy benefit by Apple not getting information about your photos unless you store images that match known CSAMs.

Q: Q:

Can CSAM detection technology be used for non-CSAM detection?

A:

It is designed to prevent that from happening. iCloud Photo's CSAM detection technology is designed to work only with CSAM hashes provided by NCMEC and other organizations. This hash list is based on images obtained by a child protection organization and verified to be CSAM. Apple does a visual check before reporting to NCMEC and does not automatically notify law enforcement agencies. This system is a mechanism that 'reports only photos that are known to be CSAM in iCloud photos'. In most countries, including the United States, possession of CSAM is a crime, and Apple is obliged to report any known cases to the appropriate authorities.

Q: Q:

Can the government force Apple to add non-CSAM images to the hash list?

A:

Apple rejects any such request. Apple's CSAM detection feature is built solely to detect known CSAMs identified by NCMEC and other experts from child safety organizations among the photos stored in iCloud Photos. We have faced demands for the introduction and construction of government-led changes that violate user privacy and have categorically rejected them. We will continue to refuse requests. It's important to note that this technology is limited to 'detection of CSAMs stored in iCloud photos' and does not meet the government's request to expand its scope. Apple does a visual check before reporting to NCMEC. If the system flags photos that do not match the known CSAM, the account will not be deactivated and will not be reported to NCMEC.

Q: Q:

Is it possible to 'inject' a non-CSAM image into the system and flag the account for something other than CSAM?

A:

Our process is designed to prevent that from happening. The hash list used for matching is from a known CSAM that has been expertly validated. Apple does not add hashes. Also, since the same hash is stored in the OS of all iPhone / iPad users, it is not possible to attack a specific individual. Finally, there is no automatic reporting to law enforcement agencies, and Apple does a human check before reporting to NCMEC. In the unlikely event that an image that does not match a known CSAM appears on your system, your account will not be deactivated and will not be reported to NCMEC.

Q: Q:

Can law enforcement misidentify an innocent person by detecting CSAM in iCloud photos?

A:

The system is so precisely designed that the chances of falsely flagging any account are less than a trillionth of a year. In addition, if the system flags your account, Apple will check it with the human eye before reporting it to NCMEC. As a result, system errors and 'attacks' do not report innocent people to NCMEC.

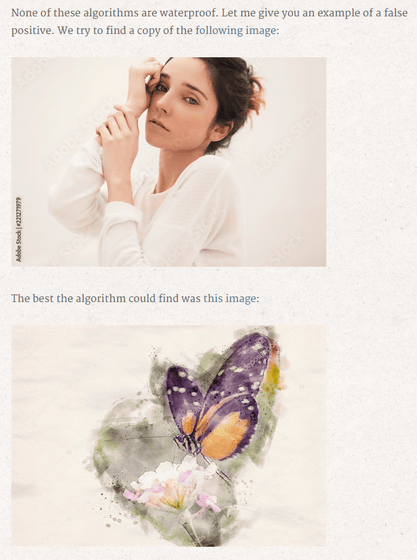

Apple's CSAM detection system is not the first method to use hashes to identify images, and it is also used to detect copy usage on stock photo sales sites. Oliver, who is an engineer belonging to a company that actually uses this technology, says that the basis of Apple's number 'false positives are less than one trillionth of a year' is unknown, and multiple photos are falsely detected. It points out that the situation is expected.

The Problem with Perceptual Hashes

https://rentafounder.com/the-problem-with-perceptual-hashes/

Oliver cites an example of false positives in which the algorithm recognizes the following two images as identical.

Related Posts:

in Web Service, Posted by logc_nt