Apple has announced that it will scan iPhone photos and messages to prevent sexual exploitation of children, and there are protests from the Electronic Frontier Foundation and others that it 'damages user security and privacy'

On August 5, 2021 local time, Apple released a page entitled 'Extended Protection for Children' and spread 'Child Sexual Abuse Material (CSAM)'. Announced that it will introduce new safety features to limit the number of people. Apple specifically 'displays a warning about content that seems to be CSAM exchanged on the message app' 'inspects the data stored in iCloud photos and enables CSAM to detect' 'Siri and search function 'Allows you to report on CSAM,' but users have criticized that 'checking the contents of iCloud photos is a privacy breach.'

Child Safety-Apple

Apple plans to scan US iPhones for child abuse imagery | Ars Technica

https://arstechnica.com/information-technology/2021/08/apple-plans-to-scan-us-iphones-for-child-abuse-imagery/

Apple announces new protections for child safety: iMessage features, iCloud Photo scanning, more ―― 9to5Mac

https://9to5mac.com/2021/08/05/apple-announces-new-protections-for-child-safety-imessage-safety-icloud-photo-scanning-more/

On August 4, 2021 local time, before Apple announced its CSAM efforts, Johns Hopkins University cryptographer Matthew Green said, 'Apple will scan the CSAM tomorrow on the client side. I got the information to release the tool separately from multiple people. This is a really bad idea. '

I've had independent confirmation from multiple people that Apple is releasing a client-side tool for CSAM scanning tomorrow. This is a really bad idea.

— Matthew Green (@matthew_d_green) August 4, 2021

After that, Apple officially announced its efforts on CSAM. Apple says it will limit the spread of CSAM from three areas: 'message app,' 'iCloud Photos,' and 'Siri and search capabilities.'

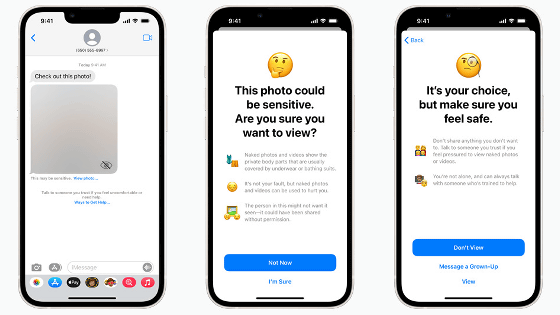

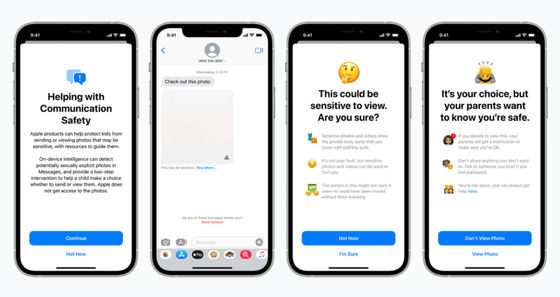

◆ Message app

A new tool will be introduced to warn children and their parents when sending and receiving sexually explicit photos on the messaging app. Upon receiving the CSAM, the photo will be blurred, the child will be warned, and information will be provided to help report the problem. In addition, if your child checks the CSAM or tries to send it yourself, you can notify your parents by message.

In addition, it is not a human moderator that determines whether the content exchanged on the message application is CSAM, but a scanning system that uses machine learning on the terminal. In other words, the system is designed so that Apple doesn't have direct access to the message content, so users' privacy is preserved. Scanning on the Messages app will be available from iOS 15 / iPadOS 15 / macOS Monterey and will be available to users who have set up family sharing in iCloud.

◆ iCloud Photos

In addition, to prevent CSAM from spreading on the Internet, Apple has announced that it will 'use new technologies in iOS and iPad OS to detect known CSAMs stored in iCloud photos.' increase. If Apple detects CSAM,

Regarding the iCloud photo scanning function, Apple said that it was 'designed with user privacy in mind.' Instead of scanning images in the cloud, the system uses a database of known CSAM hash data from NCMEC and other child safety organizations to check for problematic content. Also, the scan runs on each user's device, not in the cloud. The verification process uses an encryption technique called 'Private Set Intersection (PSI) ' that allows you to see if there is a problem without checking the image itself. In addition, the inspection results on the terminal will be uploaded to iCloud as a security certificate in an encrypted state.

In addition, the 'threshold secret sharing (threshold secret sharing by using a different technology called)', and known CSAM content in the account that you want to use the iCloud photo is detected more than a certain number, the safety certificate Apple It is designed so that the side can be checked. Apple said, 'Because we use a very high level of CSAM scanning tools, the chances of the system falsely flagging as'having CSAM content'are less than one trillion. I guarantee it. ', And emphasized the high accuracy of the system.

If the threshold is exceeded, Apple will check the contents of the safety certificate and manually check whether the content on iCloud is CSAM content based on the contents of the safety certificate. After that, if there is a problem, you will have to deactivate your account and send a report to NCMEC. Users can file a claim to restore their account if they feel that their account is incorrectly flagged.

◆ Siri and search function

In addition, Siri and the search feature have been updated to explain to users that their interest in the topic is harmful and problematic when they perform a CSAM-related search, and provide resources to help with the problem. To do. Siri and search feature updates will be available from iOS 15 / iPadOS 15 / watchOS 8 / macOS Monterey, due out this fall.

In response to Apple's announcement, the Electronic Frontier Foundation criticized CSAM's efforts in a post entitled 'Apple's plan to'differently think'about encryption puts a backdoor in your private life.' Specifically, 'Apple currently has the key to view the photos stored in iCloud Photos, but not scanning. However, once CSAM's scanning feature is introduced, users It's more obvious than looking at the fire that your privacy is reduced. '' CSAM detection is known to be very difficult to do with machine learning, and it's quite possible that Apple's efforts could misclassify it. 'With the addition of CSAM's scanning capabilities, Apple's messaging app, iMessage, can no longer be said to be end-to-end encrypted.'

Apple's Plan to 'Think Different' About Encryption Opens a Backdoor to Your Private Life | Electronic Frontier Foundation

https://www.eff.org/deeplinks/2021/08/apples-plan-think-different-about-encryption-opens-backdoor-your-private-life

In addition, the Center for Democracy & Technology criticized that 'Apple's changes to messaging apps and photo services threaten user security and privacy.'

CDT: Apple's Changes to Messaging and Photo Services Threaten Users' Security and Privacy --Center for Democracy and Technology

https://cdt.org/press/cdt-apples-changes-to-messaging-and-photo-services-threaten-users-security-and-privacy/

Related Posts: